Imagine building a world-class AI model, only to realize your data sources are an uncoordinated mess: different formats, questionable quality, and zero transparency about where the data came from. Welcome to one of the thorniest challenges in decentralized AI: data coordination. As the DePIN (Decentralized Physical Infrastructure Networks) movement gains momentum, projects are racing to solve this problem with verifiable datasets that can be trusted, audited, and used to power advanced AI workflows.

The Data Dilemma: Why Decentralized AI Needs Trustworthy Inputs

Decentralized AI systems promise huge advantages for privacy and scalability. But they also introduce a new set of headaches:

Top Data Coordination Challenges in Decentralized AI

-

Data Heterogeneity: In decentralized AI, data from different nodes is often non-IID (non-independent and identically distributed). This diversity can cause significant accuracy loss in collaborative machine learning models, making it tough to achieve consistent results across the network.

-

Data Quality and Standardization: Ensuring consistent data quality and formatting across decentralized sources is a major hurdle. Varying standards and input methods can lead to noisy or incompatible datasets, which degrade AI performance.

-

Regulatory Compliance: Decentralized data sharing must comply with strict regulations like GDPR and HIPAA. Coordinating data across jurisdictions complicates privacy, consent, and auditability requirements, raising legal and ethical concerns.

-

Security Risks: Decentralized systems are vulnerable to adversarial attacks such as data poisoning, where malicious actors inject false data to corrupt AI models. Ensuring robust security and integrity is a constant challenge.

-

Infrastructure Limitations: Running decentralized AI requires significant computational resources. Many nodes may lack the necessary hardware or bandwidth, limiting the adoption of advanced AI algorithms and slowing progress.

-

Verifiable Datasets: Guaranteeing data authenticity and integrity is essential. Solutions like Irys and Drynx use cryptographic proofs, on-chain storage, and smart contracts to create transparent, auditable, and standardized datasets for decentralized AI workflows.

Let’s break it down:

- Data Heterogeneity: Not all nodes speak the same language. Data can be non-IID (not independent and identically distributed), leading to accuracy drops in federated learning models. This isn’t just academic – it’s a real-world performance drag (source).

- Quality and Standardization: When everyone brings their own dataset to the party, you get a wild mix of formats and standards. This inconsistency can seriously degrade your model’s output (source).

- Regulatory Compliance: Decentralized means global – but crossing borders with sensitive data invites regulatory headaches (think GDPR/HIPAA). Tracking who accessed what becomes mission-critical.

- Security Risks: Decentralization opens the door for adversarial attacks like data poisoning. If you can’t verify your training data, your model is at risk (source).

Irys and Programmable Datachains: The DePIN Game-Changer

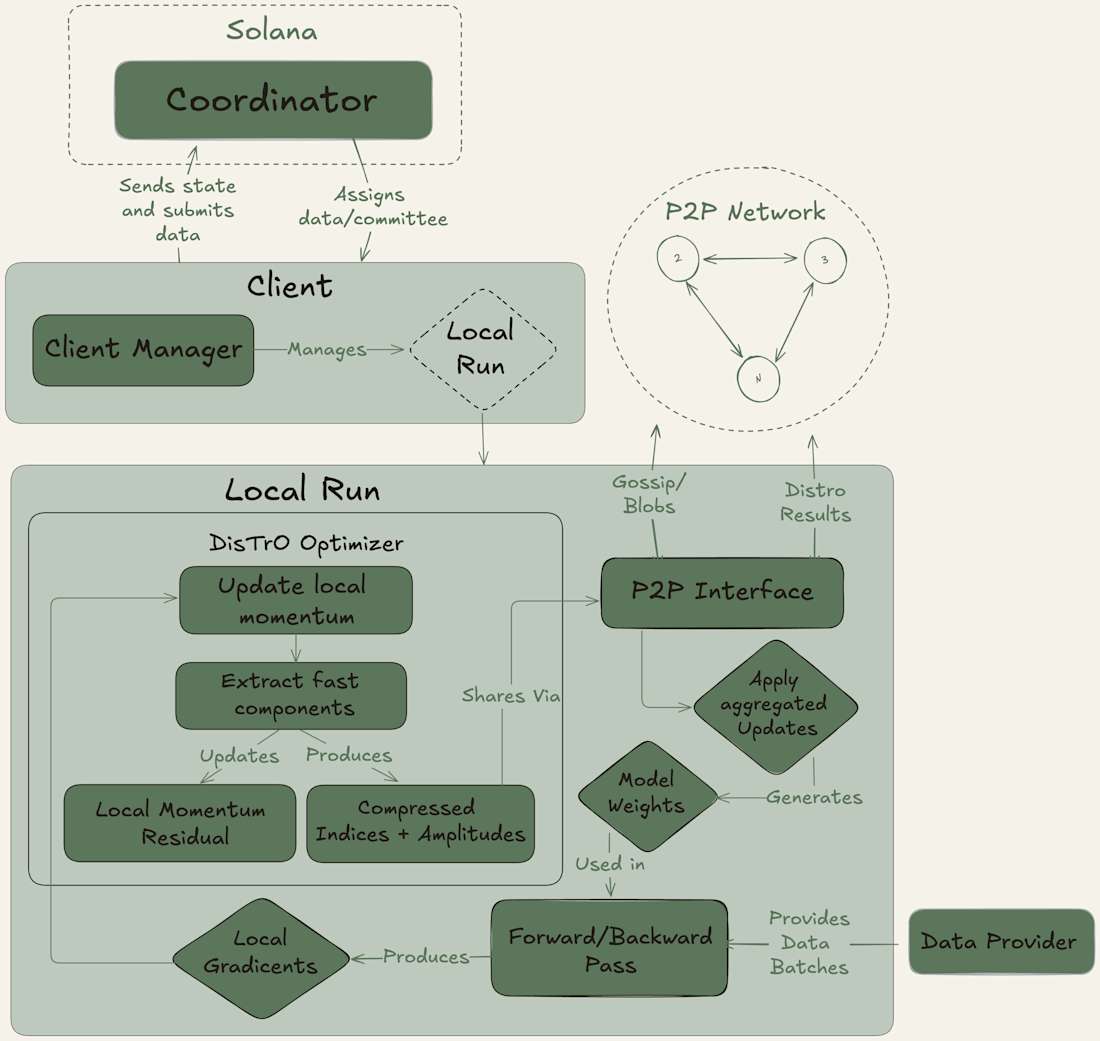

This is where programmable datachains like Irys are rewriting the rules. Think of Irys as the “AWS of onchain data infrastructure” – but instead of relying on centralized cloud servers, it offers low-cost, verifiable storage with smart contract programmability. Here’s why that matters:

- Permanently On-Chain: Data uploaded to Irys stays there, immutable and auditable.

- Programmable Verification: Smart contracts can automatically check if datasets meet certain standards before they’re used for training or inference.

- A Trigger for Workflows: As soon as verified data lands on-chain, it can kick off downstream AI processes, no human intervention needed!

This isn’t just theory. Projects are already prototyping GPT agents that use Irys as an on-chain trust anchor for their training memory (source). The result? Models that know exactly where their knowledge comes from, and users who can trust every inference made.

The Building Blocks: How Verifiable Datasets Actually Work

The magic behind verifiable datasets comes down to cryptography and automation. Here’s what’s under the hood:

- Cryptographic Hashing: Every dataset gets a unique fingerprint so any tampering is instantly detectable.

- ZK Proofs and Encryption: Advanced techniques like zero-knowledge proofs (ZKPs) let you prove something about your data without revealing the raw info itself (source). Solutions like Drynx push this even further by enabling secure statistical analysis across distributed sources.

- Smart Contracts for Auditing: Instead of manual checks, smart contracts enforce standards automatically, every access or update is logged transparently on-chain (source). No more black boxes!

The upshot? With these tools in play, anyone contributing or consuming data in a DePIN-powered AI network gets instant assurance that what they’re using is real, untampered-with, and compliant with whatever rules matter most.