Decentralized AI compute networks are rapidly transforming the landscape of artificial intelligence, shifting power away from centralized hyperscalers and toward a more distributed, community-driven model. At the heart of this transformation are edge nodes: everyday devices and specialized hardware that collectively process data, train models, and execute inference closer to where data is generated. This shift isn’t just about technology – it’s about democratizing access to AI compute, optimizing resource utilization, and enabling new classes of applications that demand speed, privacy, and scalability.

Edge Nodes: The Backbone of Decentralized AI Infrastructure

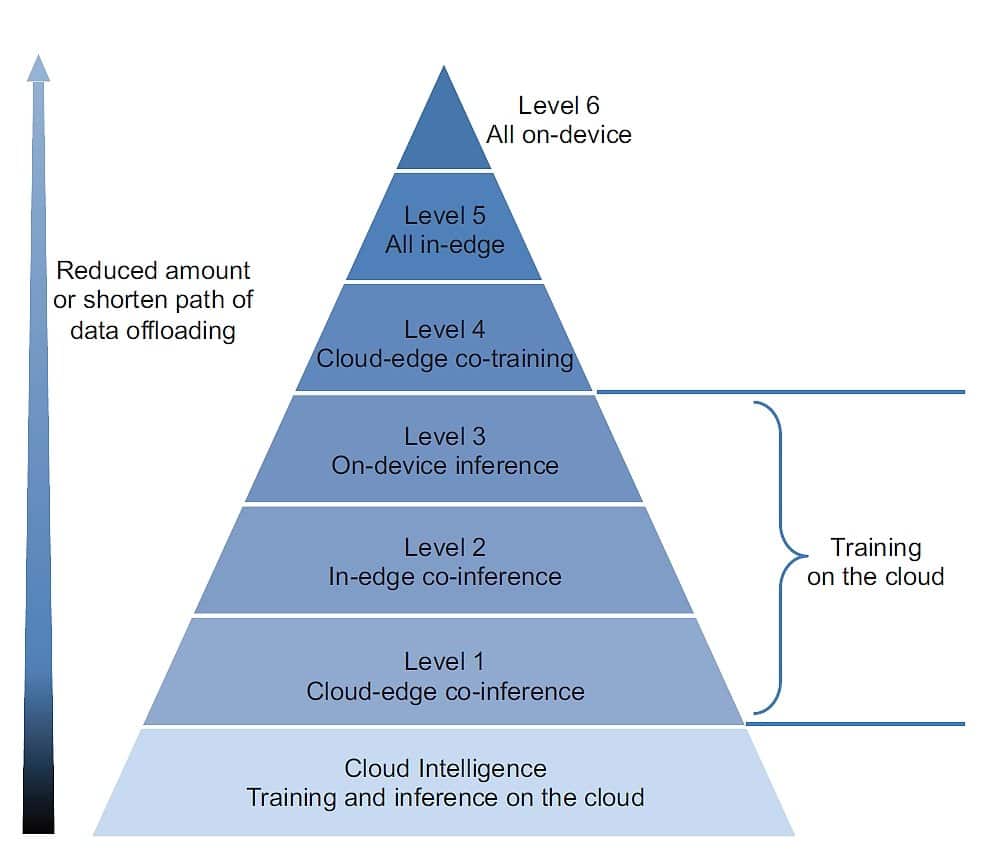

Traditional cloud computing has long relied on centralized data centers to handle intensive AI workloads. However, as the appetite for compute grows exponentially, this model faces critical limitations – bandwidth bottlenecks, latency issues, privacy concerns, and escalating costs. Enter edge nodes: distributed devices ranging from smartphones and IoT gateways to dedicated GPU servers. By harnessing their idle capacity through decentralized protocols like those pioneered by Edge Network, these nodes form a resilient mesh capable of handling real-time AI tasks at scale.

This paradigm shift is not theoretical. Networks such as EdgeAI, Society AI’s 100,000-node inference engine, and NodeGoAI are already operational or in advanced development. They leverage blockchain for transparency and smart contracts for automated rewards in cryptocurrency tokens – aligning incentives for node operators worldwide.

Key Advantages: Speed, Privacy and Scalability

Key Benefits of Edge Nodes in Decentralized AI Networks

-

Reduced Latency for Real-Time Applications: Edge nodes process data closer to its source, dramatically minimizing transmission times. This is crucial for latency-sensitive use cases like autonomous vehicles and industrial automation, where milliseconds matter. (Edge Network)

-

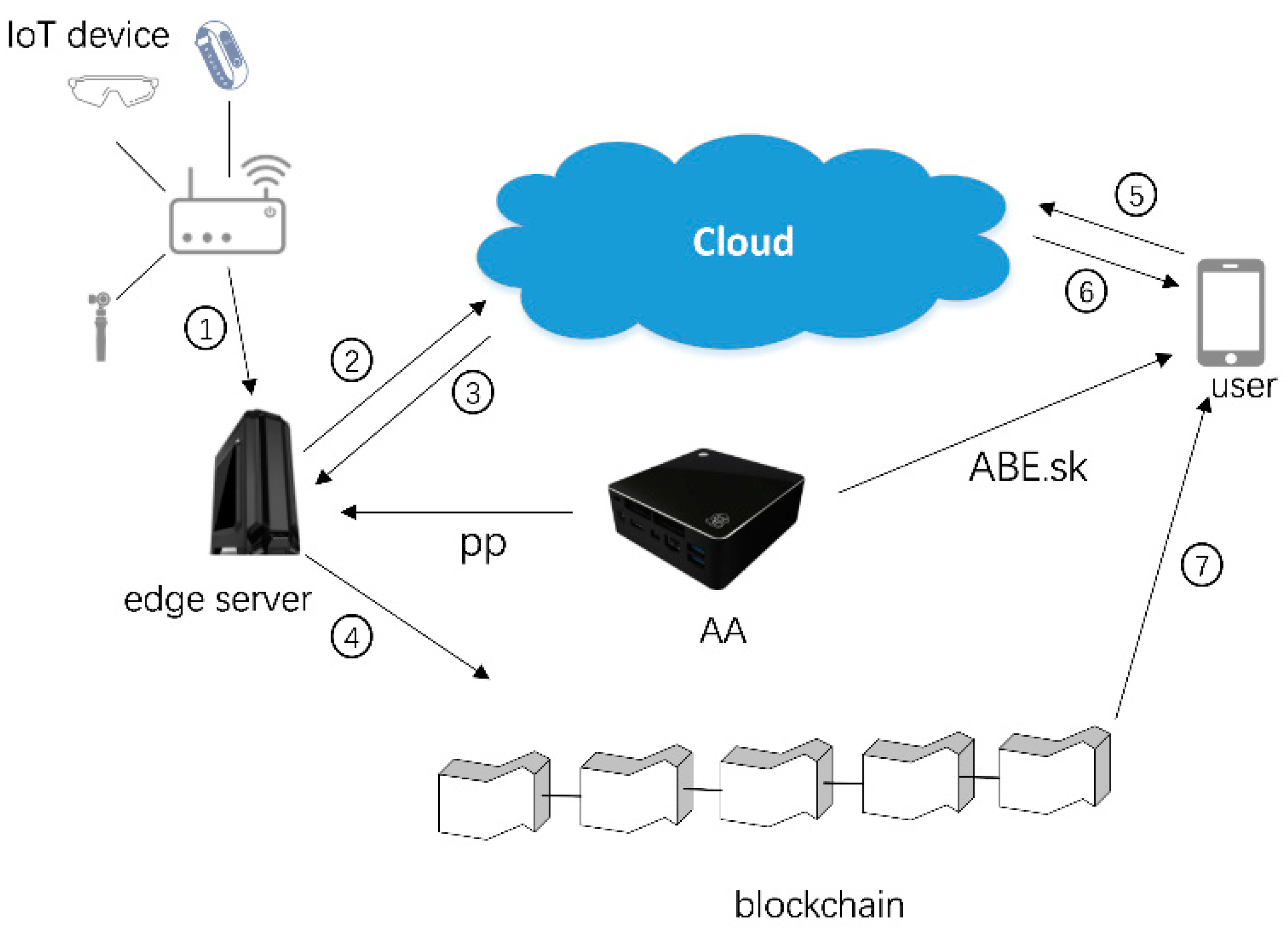

Enhanced Privacy and Security: By keeping sensitive information on local devices, edge nodes reduce the risk of data breaches. Blockchain integration further strengthens data integrity and transparency, making decentralized AI networks more secure. (Medium: Decentralized AI in Edge Computing)

-

Scalability and Efficient Resource Utilization: Edge nodes tap into idle computing resources across a distributed network, enabling dynamic scaling and optimal resource usage. This approach accommodates fluctuating workloads without overloading centralized servers. (EdgeAI Documentation)

-

Improved Reliability and Model Accuracy: Research frameworks such as DRAGON and E-Tree Learning optimize edge node performance, enhance fault tolerance, and boost the accuracy of AI models beyond traditional federated learning. (DRAGON Framework, E-Tree Learning)

The advantages of deploying edge nodes within decentralized AI infrastructure are multi-dimensional:

- Reduced Latency: By processing data near its source (think autonomous vehicles or industrial robotics), edge nodes minimize round-trip times to distant data centers. This is crucial for applications where milliseconds matter.

- Enhanced Privacy and Security: Sensitive information can be processed locally without ever leaving the premises. Blockchain integration further hardens data integrity and auditability.

- Scalable Resource Utilization: Rather than overprovisioning expensive centralized hardware, networks dynamically tap into underused resources across thousands (or millions) of devices – a principle central to DePIN (Decentralized Physical Infrastructure Networks) edge AI systems.

Pioneering Implementations in Action

The real-world impact of edge node-powered networks is best understood through leading examples:

- EdgeAI’s Decentralized Cloud Infrastructure: By orchestrating a global fleet of computers and servers through blockchain-based coordination (see docs), EdgeAI enables both real-time inference at the edge and large-scale distributed training jobs.

- Society AI’s Inference Engine: With a vision to democratize access to powerful models via a community-run network exceeding 100,000 active nodes (learn more here), Society AI unlocks participation from individuals with spare hardware while providing enterprise-grade reliability.

- NodeGoAI: Going beyond simple compute sharing, NodeGoAI incentivizes users globally to contribute unused CPU/GPU cycles for diverse workloads including federated learning – all governed by transparent peer-to-peer protocols (Wikipedia overview).

This ecosystem is further enriched by ongoing research: frameworks like DRAGON enhance fault tolerance through predictive deep learning models; E-Tree Learning optimizes convergence speed on tree-structured device federations – both pushing the boundaries of what’s possible at the network’s edge.

As adoption accelerates, the ripple effects across industries are profound. Enterprises no longer need to rely solely on hyperscale providers for AI model training or inference. Instead, they can tap into a distributed pool of compute that is as dynamic as the workloads themselves. This shift is especially relevant for sectors like healthcare, logistics, and smart cities, where data sovereignty and ultra-low latency are non-negotiable requirements.

“Edge nodes don’t just supplement centralized clouds, they’re redefining the economics and accessibility of AI. “

Consider the implications for privacy-first applications: decentralized AI infrastructure ensures that sensitive data remains local, processed directly on POP (Point of Presence) edge nodes rather than traversing global networks. This model is already being leveraged in medical diagnostics, financial analytics, and industrial IoT deployments where both compliance and real-time insight are mission-critical.

Challenges and Evolving Solutions

No technology revolution comes without hurdles. Decentralized AI compute networks must continually address issues such as network reliability, heterogeneous hardware capabilities, power management, and incentive alignment for node operators. The DRAGON framework’s predictive fault tolerance and E-Tree Learning’s decentralized convergence are just two examples of how research is meeting these challenges head-on.

Another critical consideration is interoperability, ensuring that diverse edge devices can seamlessly participate in global compute pools regardless of vendor or hardware generation. Open standards and cross-chain blockchain protocols will be key to unlocking the full potential of this ecosystem.

The Road Ahead: From Niche to Norm

The momentum behind edge node-powered decentralized AI isn’t slowing down. Networks like EdgeAI and Society AI are scaling rapidly, with new entrants leveraging tokenized incentives to attract both individual contributors and enterprise partners. As more developers build applications optimized for DePIN edge AI architectures, and as regulatory clarity improves, the mainstreaming of this model seems inevitable.

- Scalability: Community-driven growth means networks become stronger as participation increases.

- Sustainability: By utilizing idle resources, these networks reduce energy waste compared to traditional centralized data centers.

- Resilience: Distributed topologies offer natural protection against outages or targeted attacks.

The convergence of blockchain transparency with cutting-edge distributed learning frameworks provides a solid foundation for trustless collaboration at scale. As highlighted by recent research (DRAGON framework, E-Tree Learning), continual innovation at the intersection of computer science, cryptography, and economics will drive further advances in reliability and performance.

Which industry will benefit most from decentralized AI compute powered by edge nodes?

Decentralized AI compute networks using edge nodes are transforming how data is processed—reducing latency, boosting privacy, and optimizing resources. From real-time analytics to secure data handling, which sector do you think stands to gain the most from this technology revolution?

Final Thoughts: A New Era for Decentralized Compute

If we take a step back, it’s clear that edge nodes are not simply technical components, they represent a philosophical shift toward shared infrastructure and open participation in the future of intelligence. Whether you’re an innovator building next-generation applications or an investor seeking exposure to scalable infrastructure plays, understanding how POP edge nodes underpin DePIN edge AI is essential.

The coming years will see even greater collaboration between open-source communities, hardware manufacturers, protocol designers, and enterprises, all united by the promise of more equitable access to powerful computation. For those willing to engage early with this paradigm shift, the opportunities are as distributed as the networks themselves.