Imagine harnessing the raw power of Llama models across a global network of GPUs, all incentivized by crypto rewards and running on Bittensor’s buzzing subnets. Right now, with Bittensor’s TAO token trading at $165.45, up $3.62 in the last 24 hours, decentralized AI compute is hitting new highs. Deploying Llama on Bittensor subnets isn’t just a tech flex; it’s a game-changer for bittensor subnets deployment and decentralized AI compute Bittensor style. We’re talking scalable inference without the centralized bottlenecks, where miners compete to serve your prompts fastest and smartest.

This surge in TAO reflects the exploding interest in DePIN AI models subnets. Bittensor’s architecture slices the AI workload into subnets, each specialized for tasks like pretraining or inference. Subnet 9, for example, has cooked up 700 million and 7 billion parameter models that smoke GPT-2 large and Falcon-7B. Tools like RedCoast are automating distributed training across GPUs and TPUs, making it dead simple to deploy Llama Bittensor way.

Bittensor’s Subnet Magic: Powering Llama at Scale

At its core, Bittensor flips the script on traditional cloud deployments. Forget spinning up pricey EC2 instances for Llama 3.2 1B or wrestling with Llama 3.3 70B on centralized clouds. Bittensor subnets let you distribute the load. Miners run the models, validators score their outputs, and the protocol doles out TAO emissions based on quality. It’s pure incentive alignment: better responses mean more rewards.

Take the pretraining subnet; it’s rewriting LLM development by crowdsourcing massive compute. As TAO holds steady at $165.45, with a 24-hour high of $179.79, projects like Taoillium are guiding devs to testnets for subnet creation. This isn’t hype; it’s happening now, democratizing access to frontier models.

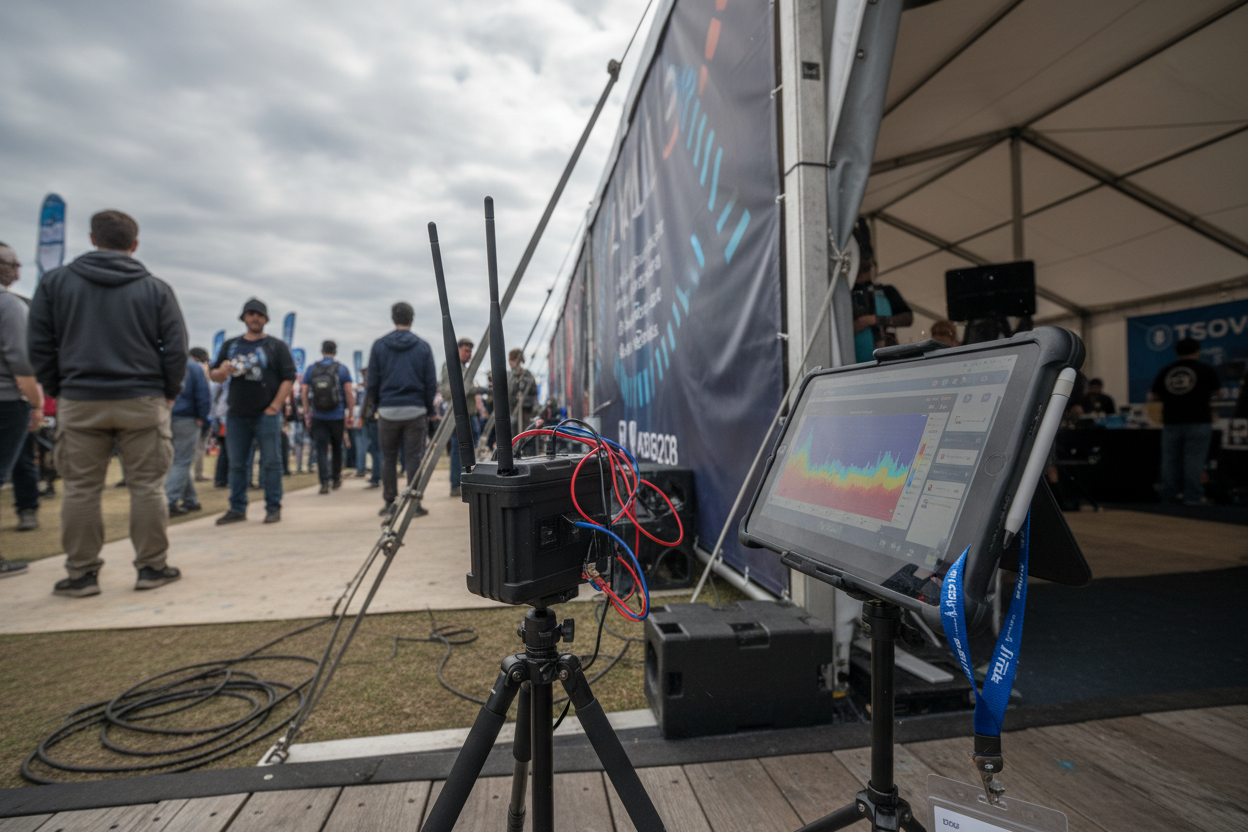

Prepping Your Rig for Bittensor AI Inference

Before diving into bittensor AI inference guide territory, nail your setup. Start local: fire up a subtensor blockchain as per Bittensor docs. This sandbox lets you create subnets without burning real TAO. Install the Bittensor CLI, sync the chain, and register your subnet. Pro tip: use testnet first, like in the taoillium GitHub guide, to avoid costly mishaps.

Hardware-wise, aim for GPUs with at least 16GB VRAM for smaller Llamas. NVIDIA A100s or RTX 4090s shine here. Software stack? Python 3.10 and, PyTorch, Transformers library, and Bittensor’s opentensor package. Clone a starter subnet repo, tweak the miner script to load Llama via Hugging Face, and you’re off.

Validate your setup by running a local miner-validator duo. Score some dummy prompts and watch the weights flow. This mirrors production: miners respond to validator challenges, earning emissions if they top the leaderboard. Time to go live. Pick an existing subnet or forge your own. For Llama deployments, join or create one tuned for text generation. Register with btcli: stake some TAO (current price $165.45, so even small amounts pack punch), get your netuid, and hotkey up. In your miner code, integrate Llama’s instruct variant. Quantize to 4-bit with bitsandbytes for efficiency; Bittensor loves lean runners. Expose an Axum or FastAPI endpoint for inference, but pipe it through Bittensor’s synapse protocol. Validators will ping you with prompts; respond in JSON with logits or completions. Forecasts driven by subnet growth, DePIN adoption, and decentralized AI compute via Llama model deployments (baseline: $165.45 in early 2026) Bittensor (TAO) is poised for strong growth from 2027-2032, with average prices projected to increase from $350 to $4,000 (CAGR ~63%), propelled by decentralized AI innovations like Llama deployments on subnets. Minimums account for bearish scenarios (e.g., regulatory setbacks, market downturns), while maximums reflect bullish adoption in DePIN and AI compute demand. Disclaimer: Cryptocurrency price predictions are speculative and based on current market analysis. Monitor with btcli wallet and dendrite. Tweak hyperparameters: temperature for creativity, top-p for focus. As your miner ranks up, emissions kick in, turning compute into crypto. Subnet 9’s success proves it: decentralized Llama crushes centralized baselines on speed and cost. RedCoast simplifies multi-node orchestration, auto-scaling across contributor GPUs. Pair it with Bittensor for hybrid training-inference subnets. The result? Llama models that scale infinitely, powered by global DePIN muscle. But let’s get hands-on. Deploying your first Llama miner demands precision, blending Bittensor’s protocol with Hugging Face smarts. Validators aren’t forgiving; subpar responses tank your rankings and TAO flow. I’ve seen miners skyrocket emissions by fine-tuning quantization and response caching. Once live, optimization is king in DePIN AI models subnets. Benchmark your Llama against subnet leaders: aim for sub-500ms latency on 1B models. Use vLLM for batched inference or TensorRT-LLM for NVIDIA speed demons. Stake more TAO at $165.45 to boost your hotkey’s influence, but diversify across validators to hedge. Challenges? Network latency can bite in cross-continental pings, but Bittensor’s scoring favors consistent quality over raw speed. Power costs vary; US miners might edge EU ones, yet global DePIN evens the field. Tools like Dendrite dashboards reveal hotkeys crushing it, inspiring tweaks. Subnet 9’s 7B model, outpacing Falcon-7B, shows what’s possible when incentives align compute hordes. Compare this to AWS EC2 drudgery for Llama 3.2 1B or DataCamp’s cloud hacks for 70B beasts. Centralized setups rack up bills; Bittensor miners earn while serving. Taoillium’s testnet runs prove you can prototype subnets sans real stakes, scaling to production where TAO’s 24-hour range from $161.70 to $179.79 mirrors the volatility fueling innovation. Pretraining subnets add rocket fuel, crowdsourcing datasets and flops for custom Llamas. Macrocosmos. ai nails it: blockchain incentives flip pretraining economics. Enterprises eye Bittensor for compliant, auditable AI; no black-box clouds here. “Bittensor isn’t just compute; it’s a conviction engine for AI builders. “ As TAO trades at $165.45, up a crisp $3.62 today, subnet creators like Sagarregmi’s Medium deep-dive highlight the protocol’s guts: miners, validators, weights dancing in harmony. Local subtensor spins let you iterate fast, graduating to testnets per GitHub wisdom. Future-proof your stack with dTAO upgrades from tao. media’s 2026 blueprint. Emissions shift to demand-driven, prioritizing hot subnets. Llama deployments will evolve: multimodal variants, agentic workflows, all decentralized. RedCoast’s TPU bridging teases hybrid futures, smashing inference ceilings. Dive in now. Fire up that miner, stake your TAO slice, and watch decentralized AI compute transform from buzzword to balance-booster. With Bittensor’s momentum, deploy Llama Bittensor isn’t optional; it’s the new standard for scalable smarts. Launching Your Llama Miner on a Live Subnet

Bittensor (TAO) Price Prediction 2027-2032

Year

Minimum Price (USD)

Average Price (USD)

Maximum Price (USD)

2027

$100

$350

$800

2028

$200

$600

$1,500

2029

$400

$1,000

$2,500

2030

$600

$1,600

$4,000

2031

$1,000

$2,500

$6,000

2032

$1,500

$4,000

$10,000

Price Prediction Summary

Key Factors Affecting Bittensor Price

Actual prices may vary significantly due to market volatility, regulatory changes, and other factors.

Always do your own research before making investment decisions.Step-by-Step Llama Launch: From Code to Crypto Rewards

Real-World Wins: Bittensor’s Edge Over Clouds