The landscape of artificial intelligence compute is undergoing a seismic shift. For years, centralized cloud giants have dictated the pace and price of AI model inference, leading to high costs, opaque data handling, and limited accessibility. Now, decentralized LLM inference networks are rewriting these rules. At the center of this revolution stands DecentralGPT, a project that exemplifies how distributed GPU infrastructure and blockchain-powered coordination are making AI more scalable, private, and affordable for all.

From Centralized Giants to Distributed Intelligence

AI inference has traditionally been dominated by platforms like AWS and Google Cloud. While reliable, these services come with significant drawbacks: persistent high costs, single points of failure, and increasing concerns over data privacy. DecentralGPT breaks this mold by leveraging a global network of independent GPU operators. Instead of mining for wasteful proof-of-work rewards, these GPUs earn DGC tokens by running real-world LLM inference tasks.

This new paradigm is built on DePIN (Decentralized Physical Infrastructure Networks) principles. By distributing workloads across thousands of nodes worldwide, DecentralGPT not only slashes operational costs – reportedly by up to 80% compared to traditional clouds – but also ensures that no single entity can monopolize access or control over user data.

How DecentralGPT Works: Architecture and Incentives

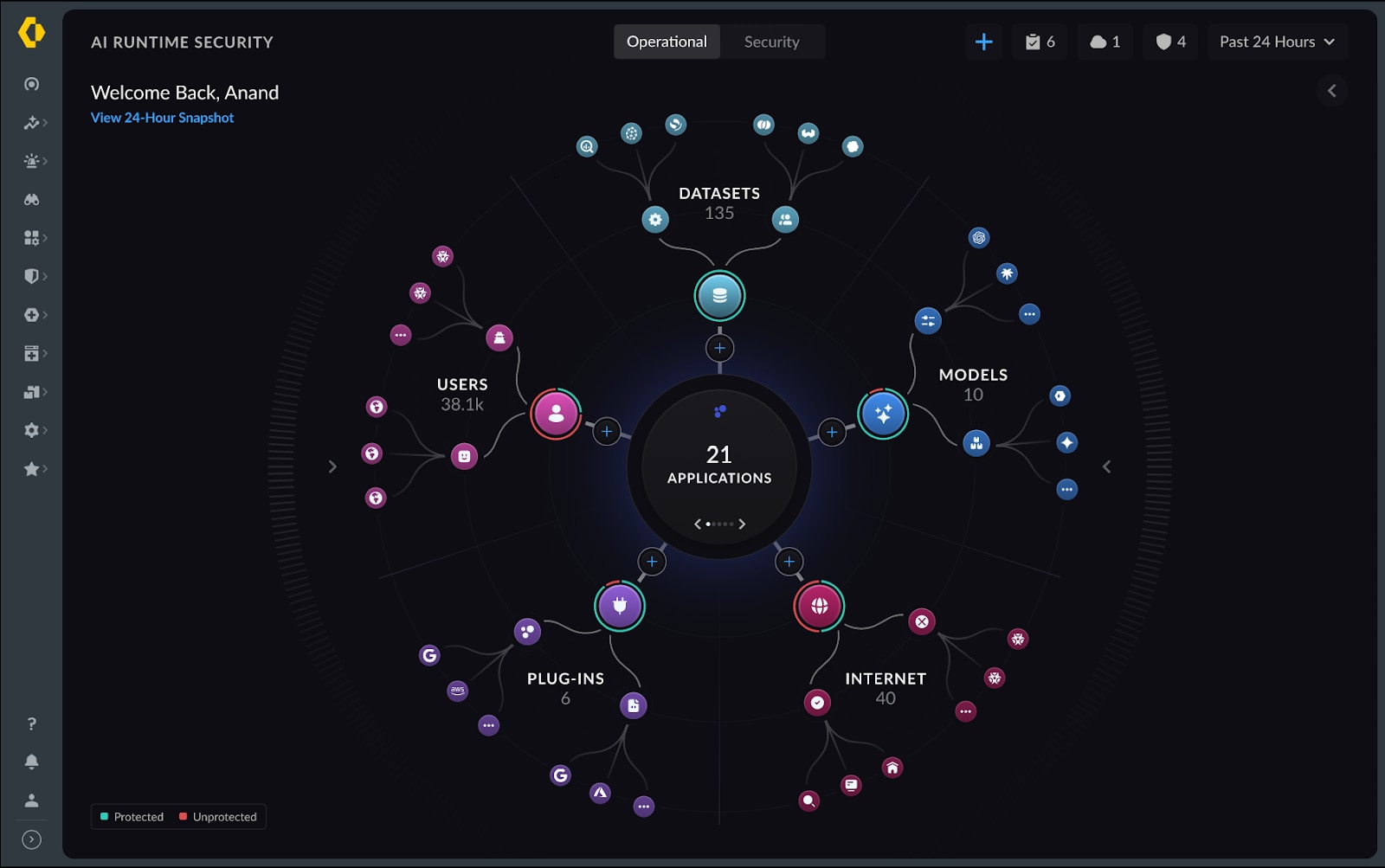

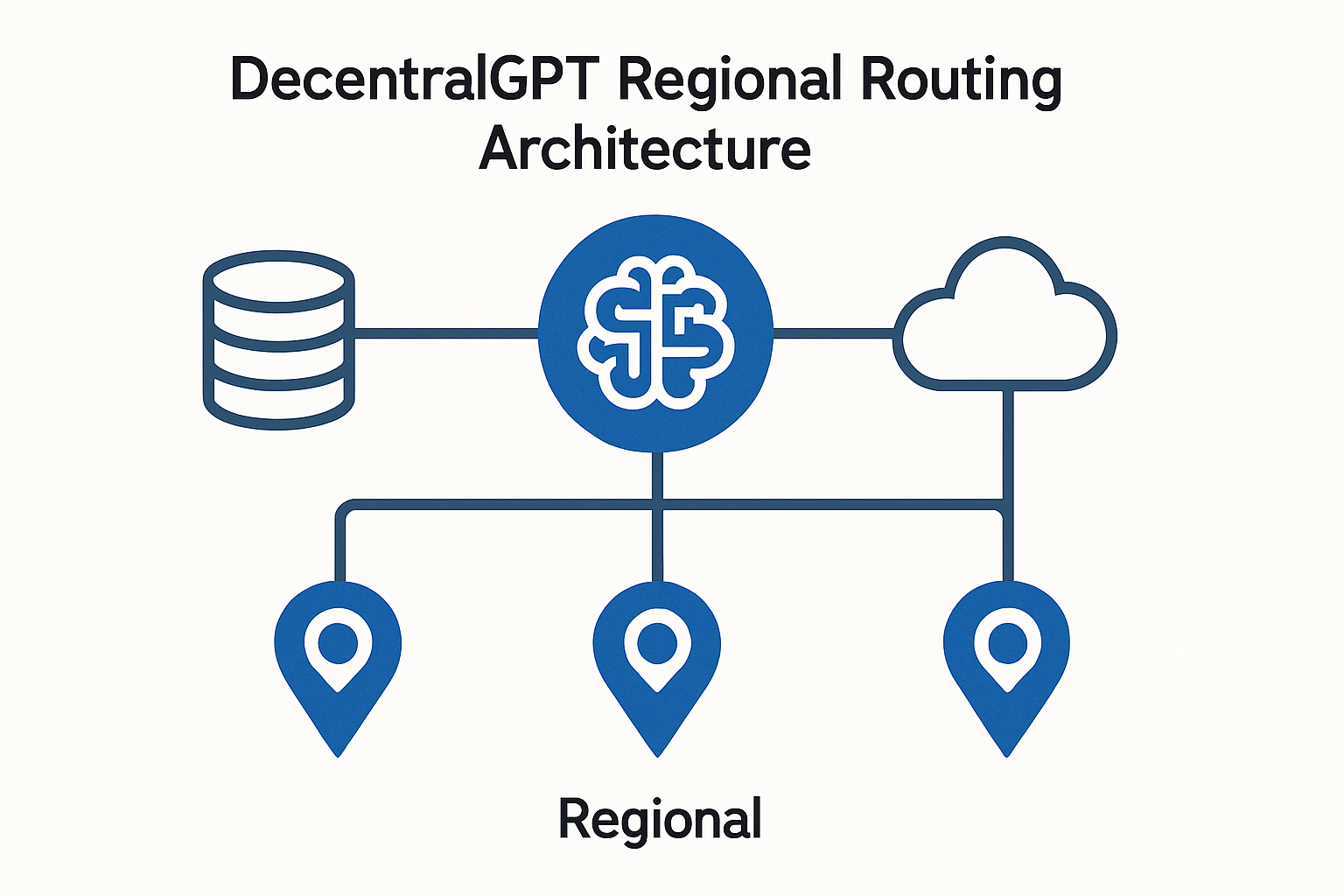

The technical backbone of DecentralGPT is elegantly simple yet robust. Users interact through an encrypted interface to submit LLM queries. A distributed coordinator then matches requests with available compute nodes based on location, load balancing needs, and model compatibility. This architecture ensures both low latency (by routing tasks regionally) and high throughput, even at scale.

Crucially, all data remains end-to-end encrypted throughout the process. This means sensitive information never sits unprotected on any single server – a sharp contrast to most centralized AI services.

Key Advantages of Decentralized LLM Inference Networks

-

Significantly Lower AI Inference Costs: Platforms like DecentralGPT leverage a global network of distributed GPU miners, reducing AI inference costs by up to 80% compared to centralized cloud providers such as AWS and Google Cloud.

-

Enhanced Data Privacy and Security: Decentralized LLM inference networks utilize end-to-end encryption and distribute processing across multiple nodes, ensuring that user data is never stored in a single location and remains protected throughout the inference process.

-

Reduced Latency Through Regional Routing: By routing inference tasks to geographically proximate GPU nodes, networks like DecentralGPT minimize latency, resulting in faster response times and improved user experiences across regions.

-

Greater Scalability and Network Resilience: Decentralized architectures connect thousands of GPU miners worldwide, allowing for dynamic scaling of compute resources and increased resilience against single points of failure.

-

Open Ecosystem for Model Developers: DecentralGPT supports both open-source and closed-source LLMs, enabling developers to submit and monetize their models while fostering a diverse and rapidly evolving AI ecosystem.

-

Incentivized Participation via Token Rewards: GPU operators earn DGC tokens for contributing compute power, creating a robust incentive structure that attracts and retains high-quality node operators.

The incentive layer is equally vital: GPU operators contribute compute power in exchange for DGC tokens. This creates a dynamic marketplace where supply scales with demand and participants are directly rewarded for supporting real AI workloads rather than arbitrary cryptographic puzzles.

A Diverse Model Ecosystem and Open Participation

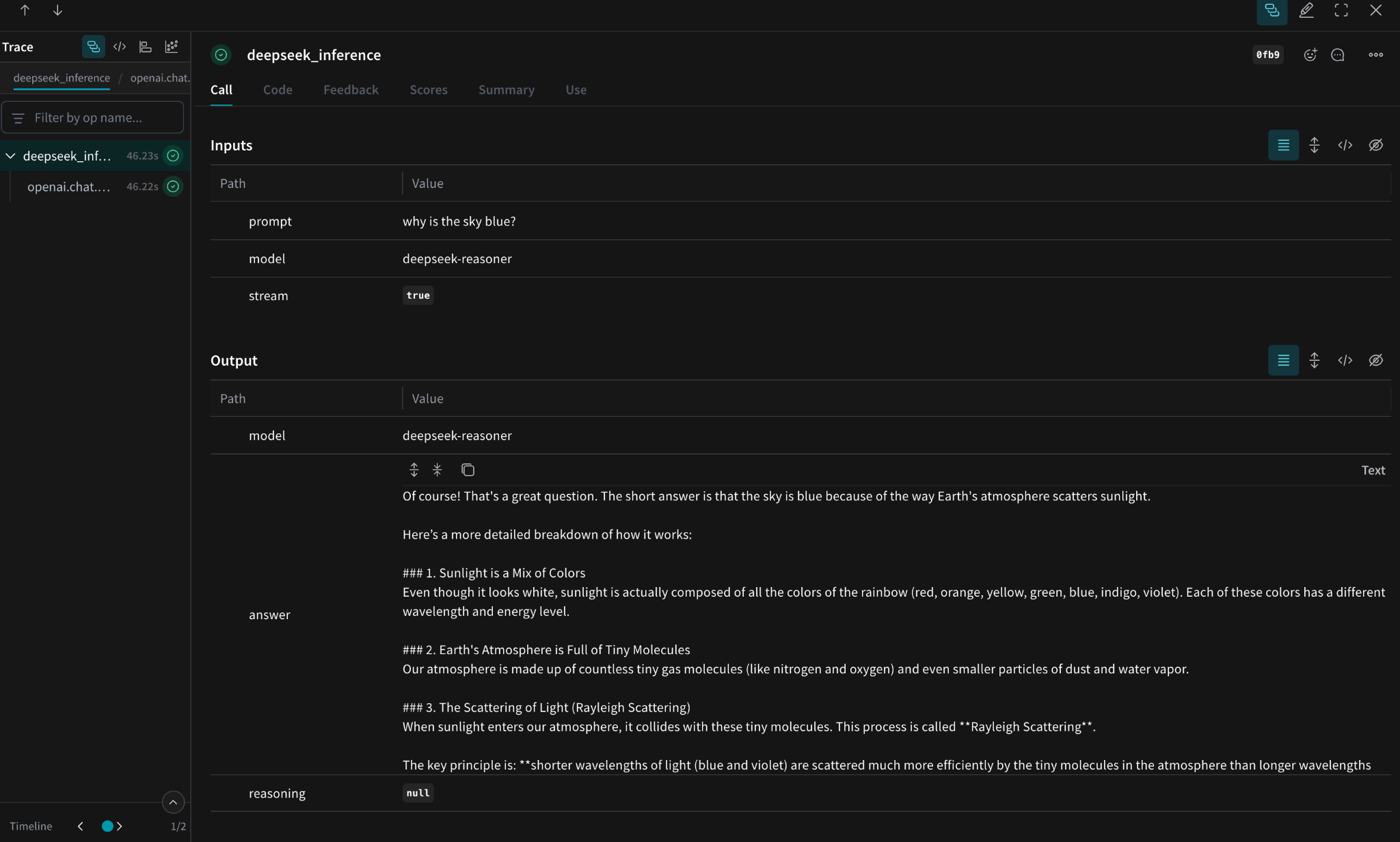

DecentralGPT supports multiple open-source LLMs including DeepSeek V3 and DeepSeek R1, giving users flexibility in choosing the right model for their application or research needs. Model developers can submit their own architectures to the platform as well, fostering innovation and ecosystem diversity beyond what walled-garden providers allow.

This approach not only democratizes access but also encourages experimentation with both open- and closed-source models within a secure environment powered by decentralized infrastructure.

As decentralized LLM inference networks mature, their real-world impact is becoming clear. For enterprises and developers, platforms like DecentralGPT offer a compelling alternative to traditional cloud AI. The ability to tap into a distributed GPU network means not only lower costs but also greater resilience against outages and censorship. DecentralGPT’s regional routing, already live in the USA, with UK expansion underway, demonstrates how geographic proximity reduces latency for users worldwide.

“We’re seeing a new standard for AI compute: borderless, permissionless, and privacy-first. ”

The implications for data privacy are especially significant. Since no single node ever has full visibility into user queries or model outputs, the risk of centralized data leaks is dramatically reduced. For industries with strict compliance requirements, finance, healthcare, legal, this architecture provides a much-needed layer of assurance that simply isn’t possible with legacy cloud models.

Economic Shifts and Tokenized Incentives

The economic incentives behind DecentralGPT are transforming the very nature of GPU mining. Instead of burning electricity on arbitrary proof-of-work computations, participants now earn DGC tokens by running high-value LLM inference workloads. This shift not only makes better use of global hardware resources but also aligns operator rewards directly with real-world demand for AI services.

For token holders and miners alike, this creates a robust feedback loop: as demand for decentralized AI compute grows, so too does the utility, and potentially the value, of DGC tokens. This dynamic is already attracting both individual GPU owners and professional mining operations seeking to diversify beyond traditional crypto mining.

Top Use Cases Enabled by Decentralized LLM Inference Networks

-

Cost-Effective AI Model Deployment: Platforms like DecentralGPT enable organizations to deploy large language models (LLMs) at up to 80% lower cost compared to traditional centralized cloud providers, making advanced AI accessible to startups and enterprises alike.

-

Privacy-Preserving AI Applications: With end-to-end encryption and decentralized processing, user data remains confidential and is never stored in a single location, supporting privacy-sensitive use cases such as healthcare, finance, and legal AI solutions.

-

Real-Time Regional AI Services: Decentralized LLM inference networks offer regional routing, allowing AI tasks to be processed on geographically proximate GPU nodes. This reduces latency and enables responsive AI-powered chatbots, virtual assistants, and translation services.

-

Open-Source Model Ecosystem: Platforms like DecentralGPT support a wide range of open-source LLMs (e.g., DeepSeek V3, DeepSeek R1), empowering developers to select, customize, and deploy models tailored to diverse applications.

-

Incentivized GPU Resource Sharing: By rewarding GPU operators with DGC tokens, decentralized networks encourage global participation, expanding available compute resources and supporting scalable, community-driven AI infrastructure.

Challenges Ahead, and Why They Matter

No technology is without its hurdles. Decentralized LLM inference faces ongoing challenges around network coordination, quality assurance across heterogeneous hardware, and ensuring fair compensation for node operators. Yet ongoing upgrades to DecentralGPT’s distributed coordinator and encrypted communication protocols show that these obstacles are being addressed rapidly.

DecentralGPT’s documentation details how they’re leveraging DeepBrainChain’s infrastructure to strengthen reliability while maintaining decentralization at scale.

Ultimately, what sets decentralized AI compute DePINs apart isn’t just technical novelty, it’s their ability to unlock new forms of collaboration and innovation previously stifled by centralized gatekeepers. As more developers contribute models and more operators join the network, the positive feedback loop accelerates: better performance, lower costs, broader access.

The Road Ahead for Decentralized AI Infrastructure

The rise of projects like DecentralGPT signals a fundamental reordering in how we access and deploy artificial intelligence at scale. By combining blockchain-based incentives with distributed GPU sharing, these networks are making advanced LLM capabilities accessible far beyond Silicon Valley or Big Tech monopolies.

This transformation isn’t just about cost savings or technical efficiency, it’s about empowering individuals and organizations worldwide to build smarter applications while retaining control over their data. In an era where privacy concerns are escalating alongside demand for powerful AI tools, decentralized LLM inference networks represent not just an evolution in infrastructure but a necessary shift in digital sovereignty.