Decentralized AI compute networks are rapidly transforming the landscape of artificial intelligence, pushing innovation beyond the confines of centralized data centers. By distributing tasks across a permissionless global network, these systems promise not just enhanced scalability and efficiency, but also new standards for integrity and transparency in AI operations. As the DePIN (Decentralized Physical Infrastructure Networks) movement matures, the intersection of blockchain and AI is enabling verifiable inference and trustless coordination at scale.

Why Verifiable Inference Matters in Decentralized AI

At the heart of decentralized AI compute networks lies a critical challenge: ensuring that distributed computations are both correct and tamper-proof. Unlike traditional cloud setups, where users rely on a single provider’s assurances, DePIN infrastructure requires mechanisms that can independently verify every step of an AI model’s execution.

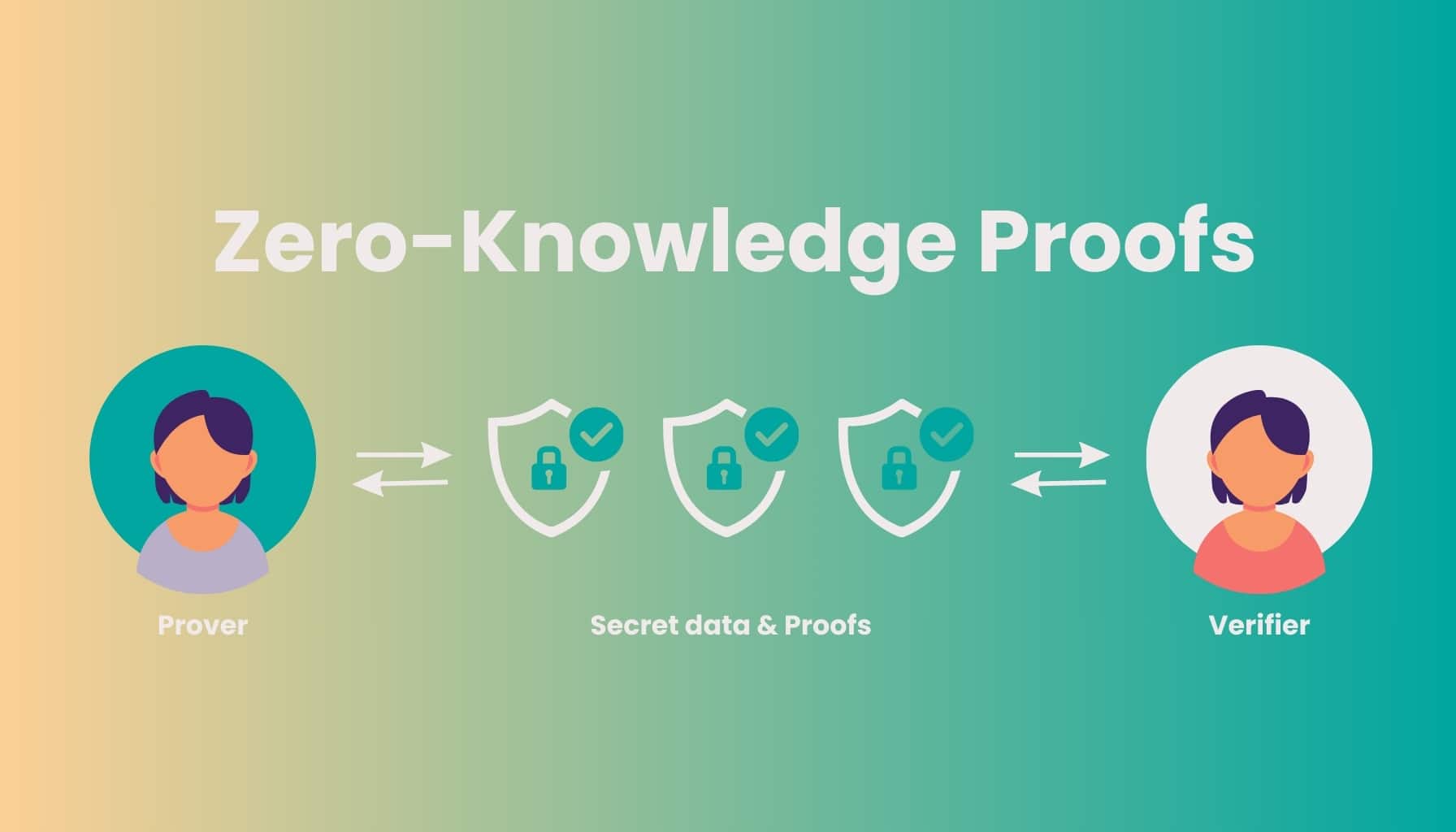

Zero-Knowledge Proofs (ZKPs) are emerging as a cornerstone technology for this purpose. ZKPs enable one party to mathematically prove to another that a computation was performed correctly without revealing any sensitive data or proprietary model weights. This cryptographic approach is pivotal for industries like healthcare or finance, where privacy and auditability must coexist. For more technical insight into how these proofs work in decentralized stacks, see this analysis on dev. to.

Trusted Execution Environments (TEEs) provide another layer of security by executing code within secure hardware enclaves. TEEs ensure that even if a node operator is malicious or compromised, the computation remains isolated and verifiable. Projects like Phala Network are pioneering TEE-powered decentralized compute infrastructure; you can read more about their approach here.

For model integrity checks across heterogeneous devices, techniques such as Locality Sensitive Hashing (LSH), including innovations like TOPLOC, allow networks to detect unauthorized modifications with high accuracy, even when models run on diverse hardware.

Top 5 Technologies Powering Verifiable Inference in Decentralized AI

-

Zero-Knowledge Proofs (ZKPs): These advanced cryptographic protocols enable one party to prove the correctness of AI computations to another party without revealing underlying data. ZKPs ensure privacy and integrity, making AI inference results verifiable in a decentralized environment. Learn more.

-

Trusted Execution Environments (TEEs): TEEs are secure hardware enclaves that execute AI code in isolation, providing tamper-proof and verifiable computation. They ensure that models run as intended and prevent unauthorized access or modification. Learn more.

-

Proof-of-Compute Protocols: These mechanisms verify that computational tasks—such as AI model training or inference—are completed correctly. Contributors are rewarded only upon successful, proven work, ensuring trustless coordination. Learn more.

-

Decentralized Inference Machines (DIN): Systems like PAI3’s DIN distribute inference tasks across multiple models and environments, evaluate disagreements, assign trust scores, and log inference pathways for transparent, auditable AI outputs. Learn more.

-

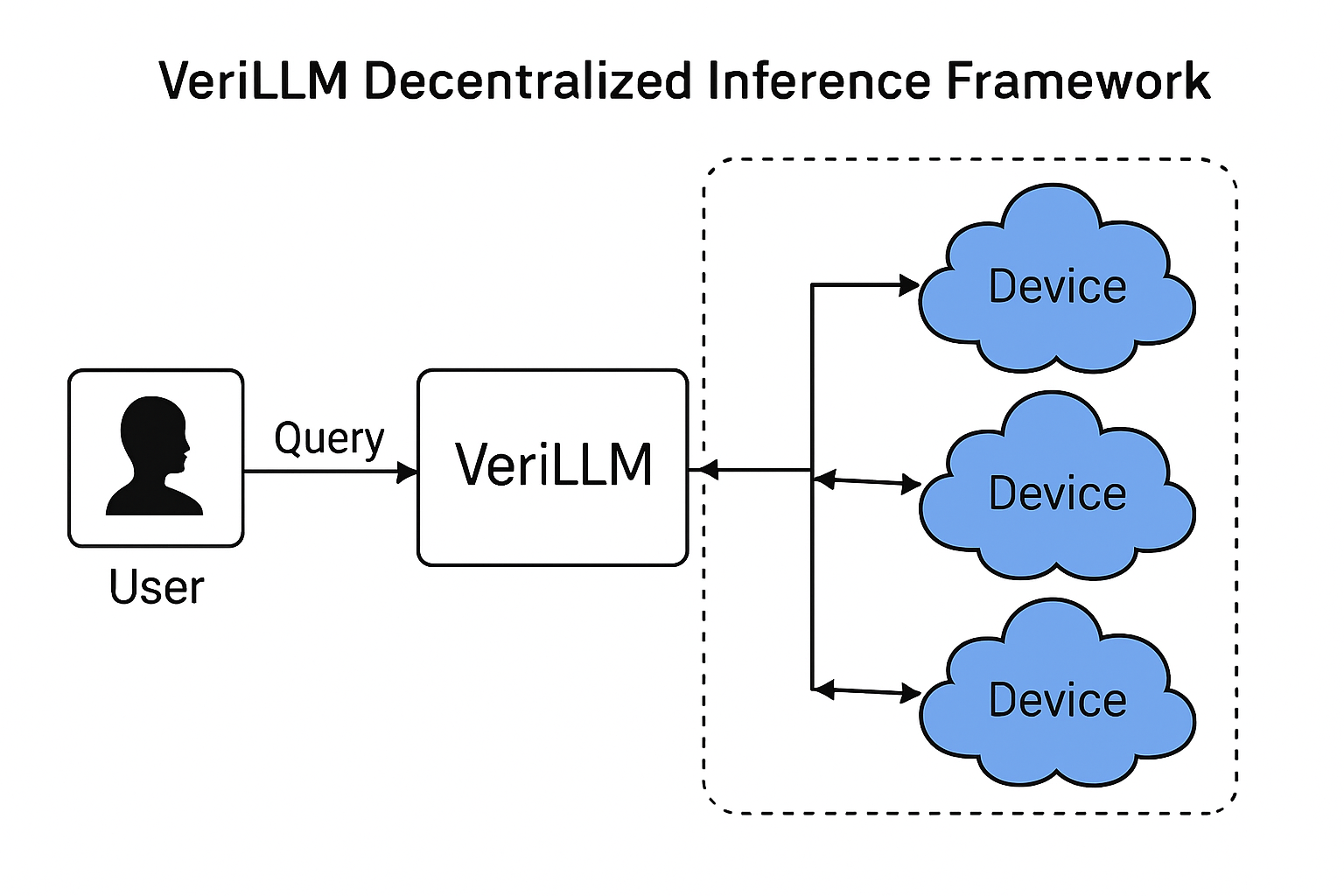

VeriLLM Framework: VeriLLM is a pioneering framework for publicly verifiable decentralized inference, achieving security under minimal trust assumptions and enabling efficient, auditable AI computations. Learn more.

The Mechanics of Trustless Coordination

The promise of open-state AI systems hinges not just on computation but on coordination: How do thousands of independent nodes agree on what work was done and who should be rewarded?

Proof-of-Compute mechanisms have become essential here. These protocols validate that computational tasks, whether training or inference, were completed honestly before any compensation is issued. This ensures economic incentives align with genuine contributions rather than wasted cycles or fake results. For those interested in technical details about proof-of-compute schemes for distributed model training, see this deep dive from Mitosis University.

Decentralized Inference Machines (DIN), such as those developed by PAI3, take things further by routing inference requests through multiple models and environments simultaneously. They evaluate disagreements between outputs to assign trust scores and maintain transparent logs, making every prediction traceable back through its execution path.

Pioneering Projects Shaping the Future

The decentralized AI ecosystem is rich with projects tackling verifiable inference and trustless coordination head-on:

- Ratio1 AI Meta-OS: A unified MLOps protocol delivering encrypted federated learning and decentralized authentication across devices.

- VeriLLM: A framework designed for efficient public verification of large language model outputs under minimal trust assumptions.

- Lattica: A communication mesh enabling scalable peer-to-peer state replication for distributed training and inference workloads.

This modular approach means developers can mix-and-match components, like secure enclaves or cryptographic proofs, to suit their application’s risk profile without sacrificing performance or openness.

The result? Decentralized AI compute networks are laying the groundwork for an era where anyone can contribute resources, verify outcomes independently, and build atop truly open infrastructure, no trusted gatekeepers required.

By weaving together cryptographic verification, hardware security, and economic alignment, decentralized AI compute networks are redefining what it means to trust digital infrastructure. These systems are not just technical novelties; they represent a paradigm shift toward open-state AI systems where transparency and accountability are built in from the ground up.

Real-World Impact: From Research to Deployment

The transition from theoretical frameworks to practical, production-grade deployments is already underway. For example, Ratio1 AI Meta-OS is demonstrating how decentralized authentication and encrypted federated learning can empower organizations to deploy sensitive models across a global fleet of devices, without ceding control to any single authority. Similarly, VeriLLM offers a blueprint for public verification of language model outputs, making it possible for enterprises and auditors to independently confirm that inferences were executed as promised.

Meanwhile, frameworks like Lattica are tackling the thorny challenge of distributed state consistency, a foundational requirement for training large AI models over peer-to-peer networks. By ensuring that every node can synchronize reliably with its peers, Lattica paves the way for scalable inference and collaborative model updates at unprecedented scale.

The Road Ahead: Challenges and Opportunities

Despite these advances, challenges remain. Achieving low-latency inference across geographically dispersed nodes requires ongoing optimization of both network protocols and incentive structures. There is also an urgent need for standardized benchmarks so that users can compare performance and security guarantees across different DePIN infrastructure providers.

The role of incentive mechanisms cannot be overstated, projects like Prime Intellect have shown that when contributors are rewarded precisely for their work (and only when it passes verification), network reliability soars while fraud is minimized. As more compute providers join the ecosystem, expect competition to drive further innovation in both protocol design and user experience.

Key Benefits of Trustless Coordination in Decentralized AI

-

Enhanced Security and Tamper Resistance: Trustless coordination leverages blockchain and cryptographic protocols like Zero-Knowledge Proofs (ZKPs) and Trusted Execution Environments (TEEs) to ensure computations are performed securely and are resistant to tampering, protecting sensitive data and intellectual property.

-

Transparent and Auditable Processes: Systems such as Decentralized Inference Machines (DIN) and frameworks like VeriLLM provide transparent logging and public verifiability of AI computations, enabling developers and enterprises to audit inference pathways and outcomes without relying on centralized authorities.

-

Fair and Automated Incentive Mechanisms: Trustless coordination enables robust, automated economic incentives through protocols like Proof-of-Compute and platforms such as Prime Intellect, ensuring contributors are rewarded based on genuine, verifiable work—eliminating manual arbitration and reducing disputes.

-

Scalability and Resource Efficiency: By distributing compute tasks across global networks—exemplified by projects like Ratio1 AI Meta-OS and Lattica—developers and enterprises can access scalable, heterogeneous resources, optimizing costs and performance without dependence on single providers.

-

Reduced Reliance on Centralized Entities: Trustless coordination removes the need for intermediaries, fostering greater autonomy and resilience for developers and enterprises, and enabling permissionless participation in AI development and deployment.

Looking forward, the integration of DePIN infrastructure with emerging sectors such as autonomous agents, on-chain governance, and edge AI will unlock new applications, from real-time analytics on IoT devices to permissionless AI-driven marketplaces. The modularity of decentralized networks means that open-source communities can rapidly iterate on components like ZKPs or TEEs without waiting for top-down mandates from cloud incumbents.

Why This Matters Now

The convergence of blockchain AI compute with distributed GPU networks is not a distant vision, it’s happening today as organizations seek alternatives to opaque centralized cloud platforms. With protocols like Ratio1 Meta-OS and VeriLLM moving from research into production pilots, we’re witnessing the birth of a new digital commons where verifiable inference is not a luxury but a baseline expectation.

If you’re building or investing in next-generation AI applications, now is the time to explore how decentralized AI compute networks can offer you:

- Scalability: Tap into idle GPUs worldwide without vendor lock-in.

- Security: Ensure every computation is auditable via cryptography or hardware enclaves.

- Transparency: Leverage open logs and proof-of-compute schemes for full traceability.

- Ecosystem growth: Join a developer community pushing the boundaries of open-state infrastructure.

The bottom line: Decentralized AI compute networks are not just solving old problems, they’re creating entirely new possibilities for trustless collaboration at global scale. As these systems mature, expect them to become foundational layers for everything from autonomous supply chains to censorship-resistant scientific research, ushering in an era where verifiable inference and trustless coordination are simply how things get done.