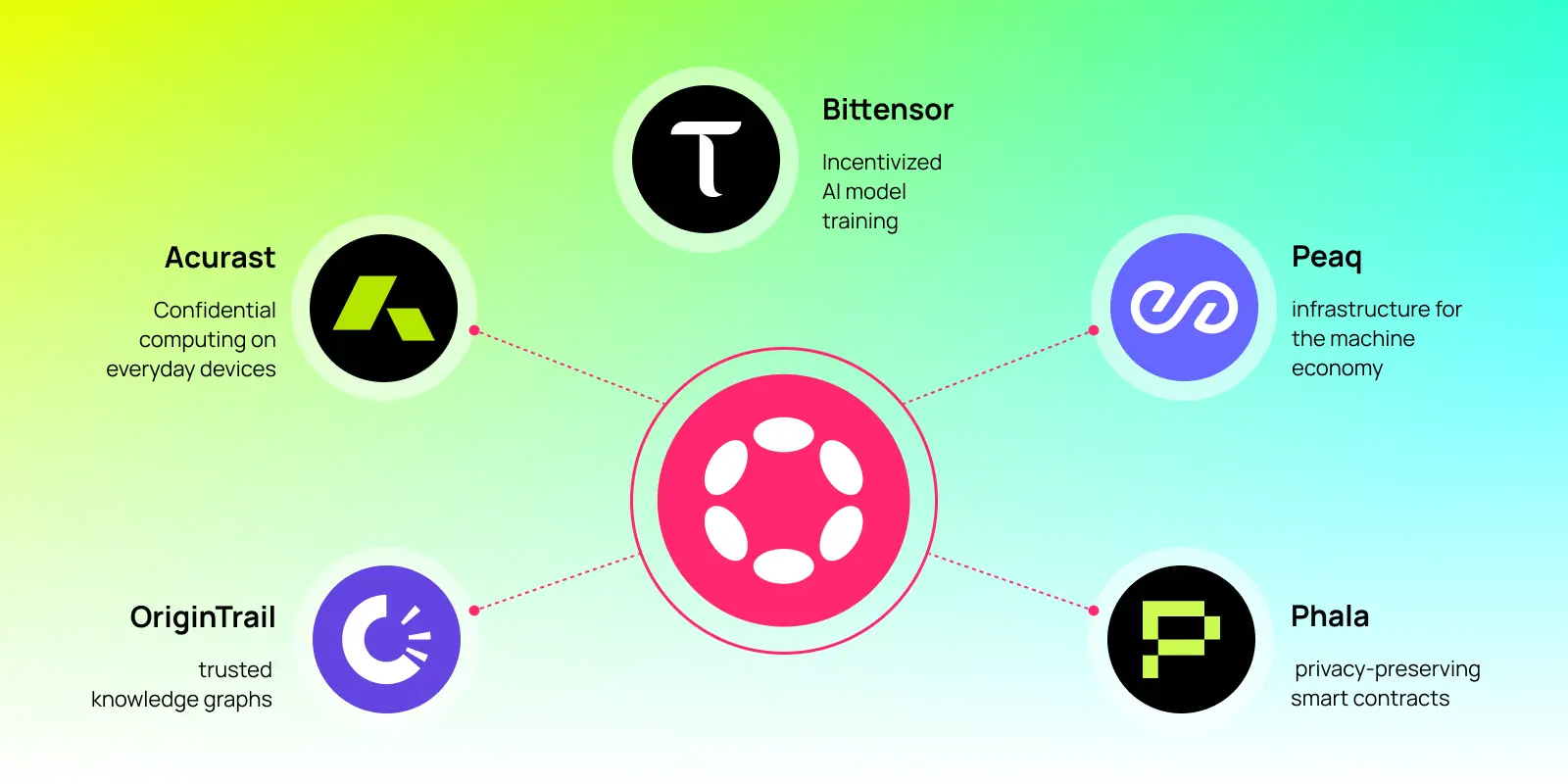

As AI compute networks move from centralized hyperscalers to decentralized, crypto-powered infrastructure, the question of trust becomes existential. In open-state AI systems where anyone can contribute compute, how do we ensure that model outputs are correct, unmanipulated, and reproducible? The answer is verifiable inference: a set of cryptographic and protocol-level techniques that let users independently verify the integrity of AI computations, without needing to trust any single party.

Why Verifiable Inference Matters for Decentralized AI Compute

Traditional cloud-based AI relies on closed environments and reputation. But in decentralized AI compute networks, where workloads are distributed among permissionless nodes, old assumptions break down. Anyone can operate a node, and incentives exist for malicious actors to cut corners or tamper with results. This is not just a theoretical risk: as Theta EdgeCloud’s recent launch demonstrates, verifiable inference is now essential for enterprise adoption and mission-critical use cases.

The stakes are high:

- Algorithmic trading depends on trustworthy models for split-second decisions

- Fraud detection in DeFi and fintech requires tamper-proof outputs

- Healthcare applications cannot tolerate silent errors or data leaks

- Open-source LLMs must prove their outputs are unaltered by adversarial nodes

The core challenge: how do you prove to a user, or even an on-chain smart contract, that an LLM or ML model ran correctly on remote hardware?

The Core Approaches: ZKPs, Fraud Proofs, and Cryptoeconomics

Top Approaches to Verifiable Inference in Decentralized AI

-

Zero-Knowledge Proofs (ZKPs): ZKPs enable one party to prove the correctness of an AI computation to another party without revealing the underlying data or model. Inference Labs utilizes ZKPs in its Proof of Inference protocol, allowing for confidential and verifiable AI outputs. This cryptographic approach preserves privacy while ensuring trust in decentralized environments.

-

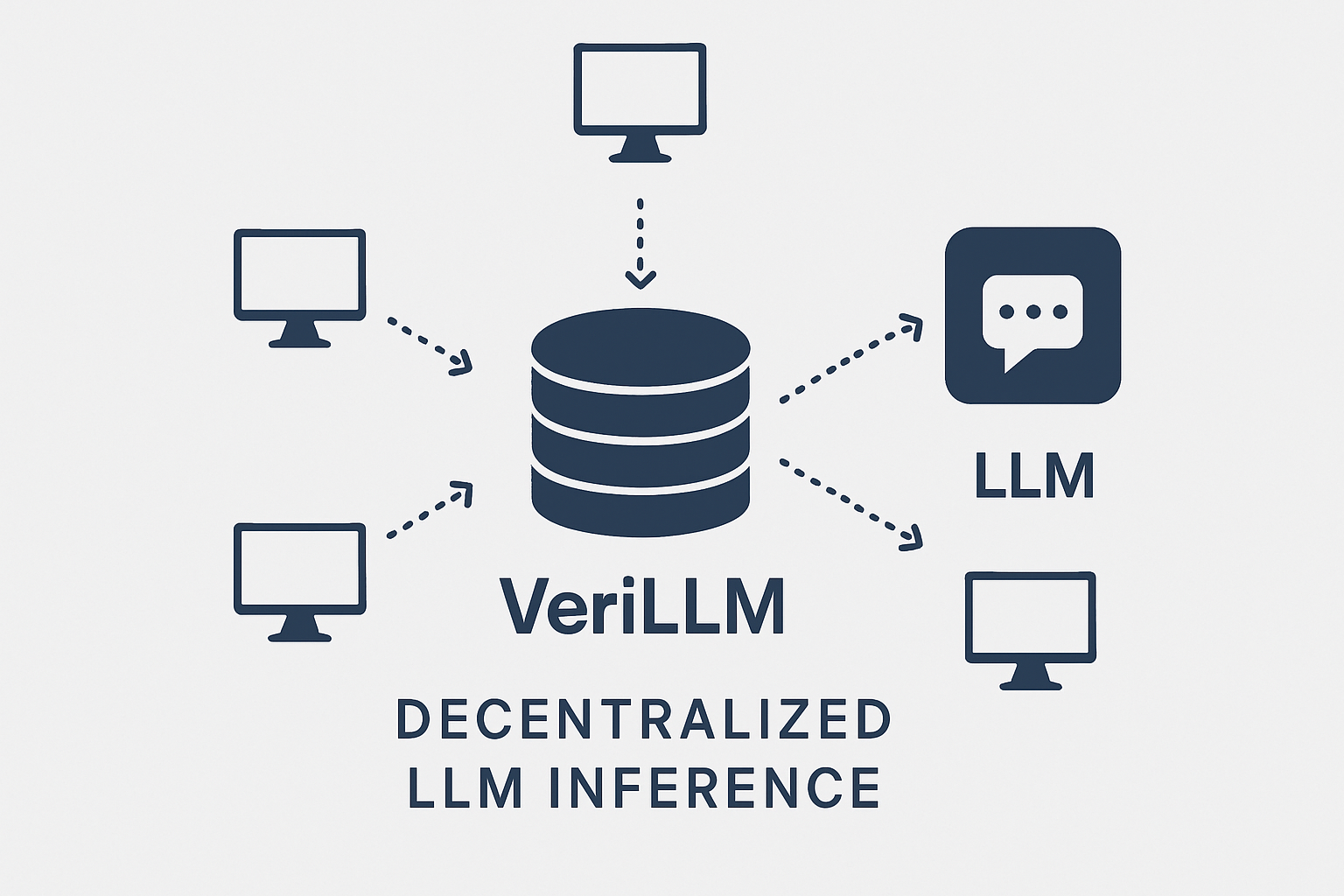

Optimistic Fraud Proofs: This mechanism assumes computations are correct unless challenged. If a node suspects fraud, it can submit a fraud proof, triggering a verification process. VeriLLM implements this with a peer-prediction system to discourage lazy verification, achieving efficient and scalable decentralized inference verification.

-

Cryptoeconomic Incentives: By aligning economic rewards and penalties, cryptoeconomic systems motivate honest behavior among network participants. Nodes are incentivized to perform correct computations and penalized for malicious actions. This approach is foundational in many decentralized compute networks, supporting robust, trustless AI inference.

-

Trusted Execution Environments (TEEs) & Sampling Consensus: Atoma Network combines TEEs, which securely isolate computations, with sampling consensus—where randomly selected nodes verify inference. This hybrid method ensures both privacy and elastic verifiability in decentralized AI workflows.

-

Blockchain-Backed Randomness & Public Verification: Theta EdgeCloud leverages public randomness beacons anchored on blockchain to ensure that LLM inference outputs are reproducible and tamper-proof. This method enhances transparency and auditability in decentralized AI services.

The current landscape features three main strategies:

- Zero-Knowledge Proofs (ZKPs): These cryptographic primitives let a prover convince a verifier that an inference was performed correctly, without revealing sensitive model parameters or input data. For example, Inference Labs’ Proof of Inference protocol applies ZKPs so operators can validate outputs while protecting IP.

- Optimistic Fraud Proofs: Here, computations are assumed valid unless challenged. If a dispute arises (e. g. , two nodes return different outputs), a lightweight proof process resolves it. This approach is gaining traction for its scalability.

- Cryptoeconomics: Nodes stake tokens as collateral; if they cheat or return incorrect results, they lose their stake. Peer-prediction mechanisms like those in the VeriLLM framework (VeriLLM paper) discourage lazy verification by making collusion expensive.

The Latest Innovations Powering Trustless AI Infrastructure (2025)

The past year has seen rapid innovation in verifiable execution for decentralized AI compute:

- Tamper Detection at Model Level: TOPLOC uses locality sensitive hashing to detect unauthorized changes to models or prompts, achieving 100% accuracy across diverse hardware (TOPLOC research). This is critical as models become more portable and composable across networks.

- Mainnet-Ready ZK Protocols: Inference Labs’ Proof of Inference is now live on testnet with mainnet launch slated for late Q3 2025, showing real progress toward production-grade verifiability (Chainwire coverage).

- Punishing Bad Actors Efficiently: VeriLLM’s peer-prediction system reduces verification costs to just ~1% overhead while maintaining security under one-honest-verifier assumptions.

- Sovereign Compute Enclaves: Atoma Network integrates Trusted Execution Environments (TEEs) with sampling consensus so multiple nodes can independently verify each inference, balancing privacy with public auditability.

- Tamper-Proof Outputs via Blockchain Randomness: Theta EdgeCloud leverages blockchain-backed public randomness beacons to make LLM outputs reproducible and tamper-evident in real time.

The Impact: Building Open-State Trust Without Central Authorities

This new wave of verifiable inference protocols is transforming how users interact with decentralized AI compute networks. Instead of relying on trust in node operators or opaque cloud providers, users can now verify every step, from data ingestion through model execution, to output delivery. The result is an open-state system where anyone can audit computation history without privileged access.

Key Benefits of Verifiable Inference for Enterprises

-

Enhanced Trust and Integrity: Enterprises can independently verify the correctness of AI computations, reducing reliance on centralized authorities and minimizing risks of tampering or malicious behavior in decentralized networks.

-

Confidentiality and Privacy Preservation: Advanced cryptographic techniques, such as zero-knowledge proofs (used by Inference Labs’ Proof of Inference), enable verification of AI outputs without exposing proprietary models or sensitive data.

-

Robust Tamper Detection: Solutions like TOPLOC use locality sensitive hashing to detect unauthorized modifications to models, prompts, or precision with 100% accuracy, ensuring model integrity across diverse hardware.

-

Cost-Efficient and Scalable Verification: Frameworks such as VeriLLM provide lightweight, publicly verifiable inference with negligible overhead (about 1% of computation cost), making large-scale decentralized AI feasible for enterprises.

-

Data Security with Trusted Execution Environments: Atoma Network integrates TEEs and sampling consensus, offering secure computation and elastic verifiability, which protects enterprise data while enabling transparent AI processing.

-

Reproducibility and Auditability: Theta EdgeCloud’s verifiable LLM inference service leverages blockchain-backed randomness beacons, ensuring AI outputs are reproducible and tamper-proof, supporting regulatory compliance and audit trails.

-

Frictionless Collaboration and Ecosystem Growth: By enabling trustless verification, enterprises can confidently collaborate and share resources within decentralized AI networks, fostering innovation without the need for centralized gatekeepers.

This paradigm shift unlocks new markets, from DeFi risk engines that demand auditable algorithms to healthcare platforms requiring provable privacy compliance, all while preserving decentralization’s core ethos: don’t trust, verify.

As these protocols mature, the cost and complexity of verification are dropping rapidly, making trustless AI infrastructure not just feasible but economically compelling. Consider VeriLLM’s approach: by reducing verification overhead to around 1% of total inference cost, it removes a major barrier for scaling LLM-powered applications in decentralized environments. Meanwhile, Atoma Network’s hybrid of TEEs and sampling consensus shows that privacy and auditability can coexist, even for sensitive enterprise workloads.

What’s most striking is how these mechanisms are being woven into the fabric of on-chain AI services. For example, Theta EdgeCloud’s public randomness beacon means that every LLM output can be checked against a transparent, immutable source, making tampering immediately obvious to any auditor or smart contract downstream. This is the kind of open-state assurance that centralized providers simply can’t offer.

From Theory to Real-World Adoption

The adoption curve is steepening as verifiable inference moves from research to production:

- DeFi protocols are integrating verifiable AI risk engines to satisfy auditors and regulators.

- Healthcare data platforms use ZKPs and TEEs to assure patients that their data remains confidential during model inference.

- Enterprises are piloting blockchain-backed AI services that produce tamper-evident audit trails for compliance-sensitive processes.

- Developers finally have frameworks like VeriLLM and TOPLOC to build apps where users can independently verify outputs, no matter who runs the backend hardware.

The Road Ahead: Challenges and Opportunities

This shift isn’t without its challenges. Zero-knowledge proofs remain computationally intensive for some advanced models, and optimistic fraud proofs require robust dispute resolution systems. But with mainnet launches imminent for protocols like Inference Labs’ Proof of Inference (see coverage here) and continued breakthroughs in efficient hashing (as seen with TOPLOC), the pace of progress is undeniable.

The real promise lies in composability: as more networks adopt standardized verifiable inference layers, open-state AI systems will be able to interoperate seamlessly. Imagine a future where a DeFi protocol on one chain calls an LLM hosted by a separate decentralized network, verifies its output on-chain using cryptographic proofs, then settles trades, all without ever trusting a single party or exposing sensitive data.

Key Design Tradeoffs in Verifiable Inference for Decentralized AI

-

Zero-Knowledge Proofs vs. Performance Overhead: Zero-knowledge proofs (ZKPs), as used in Inference Labs’ Proof of Inference, ensure strong privacy and integrity but can introduce significant computational overhead, impacting inference speed and scalability.

-

Lightweight Verification vs. Security Assumptions: Protocols like VeriLLM achieve low verification costs (about 1% of inference cost), but rely on the one-honest-verifier assumption, which may not be suitable for all threat models.

-

Confidentiality vs. Auditability: Trusted Execution Environments (TEEs), as integrated by Atoma Network, provide strong data privacy but may limit transparency and auditability compared to fully on-chain or cryptographic approaches.

-

Detection Accuracy vs. Generalizability: TOPLOC uses locality sensitive hashing to detect unauthorized model or prompt changes with 100% accuracy, but its effectiveness across diverse model architectures and adversarial techniques must be continually validated.

-

Randomness and Reproducibility vs. User Control: Theta EdgeCloud leverages blockchain-backed randomness beacons for reproducibility and tamper resistance, but this can reduce user control over certain inference parameters or customizations.

-

Decentralization vs. Verification Latency: Sampling consensus mechanisms, such as those in Atoma Network, increase decentralization and resilience but may introduce higher latency as multiple nodes must independently verify inferences.

The bottom line? Verifiable inference is not just a technical milestone, it’s the foundation for trustless collaboration at global scale. By anchoring AI computation in cryptographic truth rather than reputation or closed infrastructure, decentralized AI compute networks are poised to unlock entirely new markets and applications where trust cannot be assumed but must be proven at every step.