Verifiable inference is rapidly redefining the landscape of decentralized AI compute. As AI workloads migrate from centralized silos to distributed networks, ensuring the integrity and trustworthiness of inferences becomes a mission-critical challenge. The days of “trusting” black-box outputs are fading. Instead, the mantra is: Don’t trust, verify. This paradigm shift is driving a wave of technical innovation at the intersection of AI, cryptography, and blockchain.

Why Verifiable Inference Matters for DePIN and Decentralized GPU Networks

In decentralized environments, anyone can contribute compute power – but this permissionless openness creates new attack vectors. Malicious or faulty nodes might return incorrect results, leak sensitive data, or manipulate models. Without robust verification mechanisms, the entire promise of open AI infrastructure security collapses.

Verifiable inference addresses these risks by enabling cryptographic proof that an AI model was run correctly on unaltered inputs with untampered weights. The result? Users gain confidence that outputs are genuine – even when computation is offloaded to anonymous nodes across a global decentralized GPU network.

The Core Approaches Powering Trustless Verification

The current generation of verifiable inference leverages three main technical pillars:

- Zero-Knowledge Proofs (ZKPs): Allow validators to confirm correctness without seeing sensitive data or model internals.

- Optimistic/Fraud Proofs: Assume honest computation by default but enable rapid challenge and correction if fraud is detected.

- Cryptoeconomic Incentives: Use staking and slashing to align node behavior with network integrity.

This trinity forms the backbone for projects like Inference Labs’ Proof of Inference protocol – which uses ZKPs for privacy-preserving output validation – and Hyperbolic’s Proof of Sampling (PoSP), which randomly audits computations without heavy overhead (source). These advances are not just academic; they’re being deployed in live networks today.

Leading Protocols & Frameworks for Verifiable Inference

-

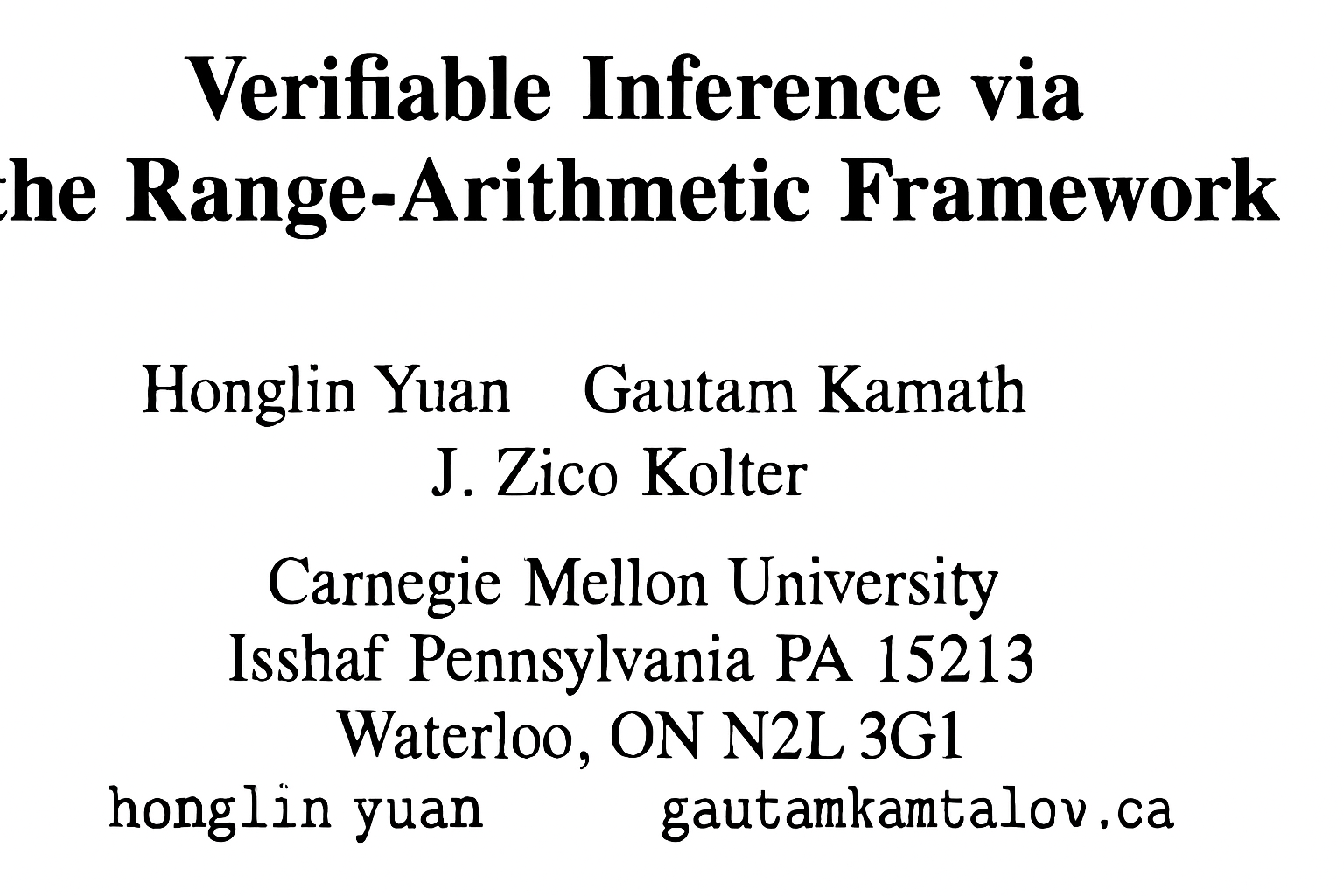

Range-Arithmetic Framework by Rahimi et al.: Efficiently transforms deep neural network inference into arithmetic operations, enabling scalable verification with sum-check protocols and range proofs, reducing costs in decentralized AI networks.

-

VeriLLM Protocol by Wang et al.: A lightweight, publicly verifiable protocol for decentralized large language model (LLM) inference, offering low verification overhead and robust peer-prediction mechanisms to prevent dishonest validation.

-

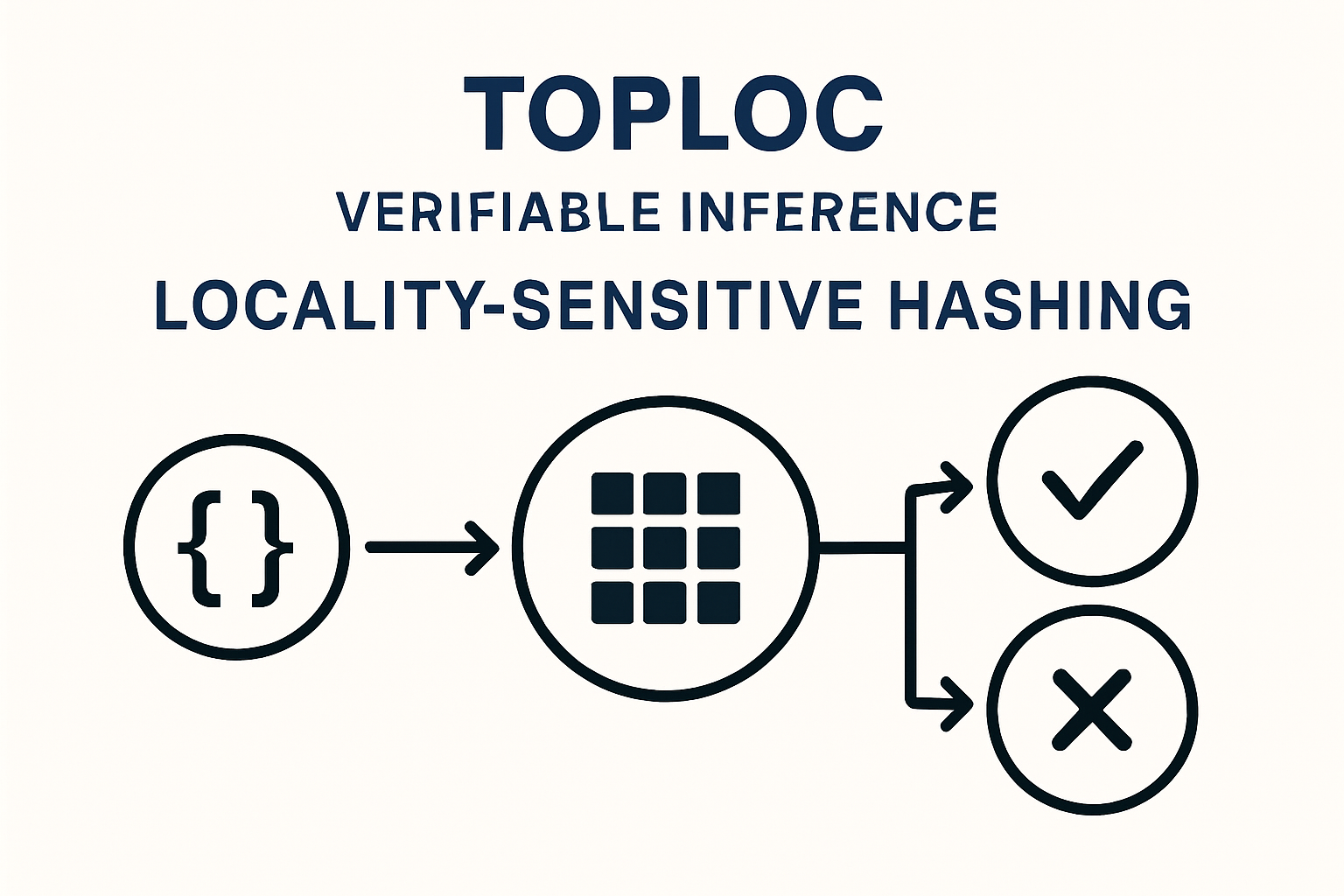

TOPLOC Scheme by Ong et al.: Utilizes locality-sensitive hashing for trustless, verifiable inference, ensuring 100% accuracy in detecting unauthorized model or data modifications across diverse hardware.

-

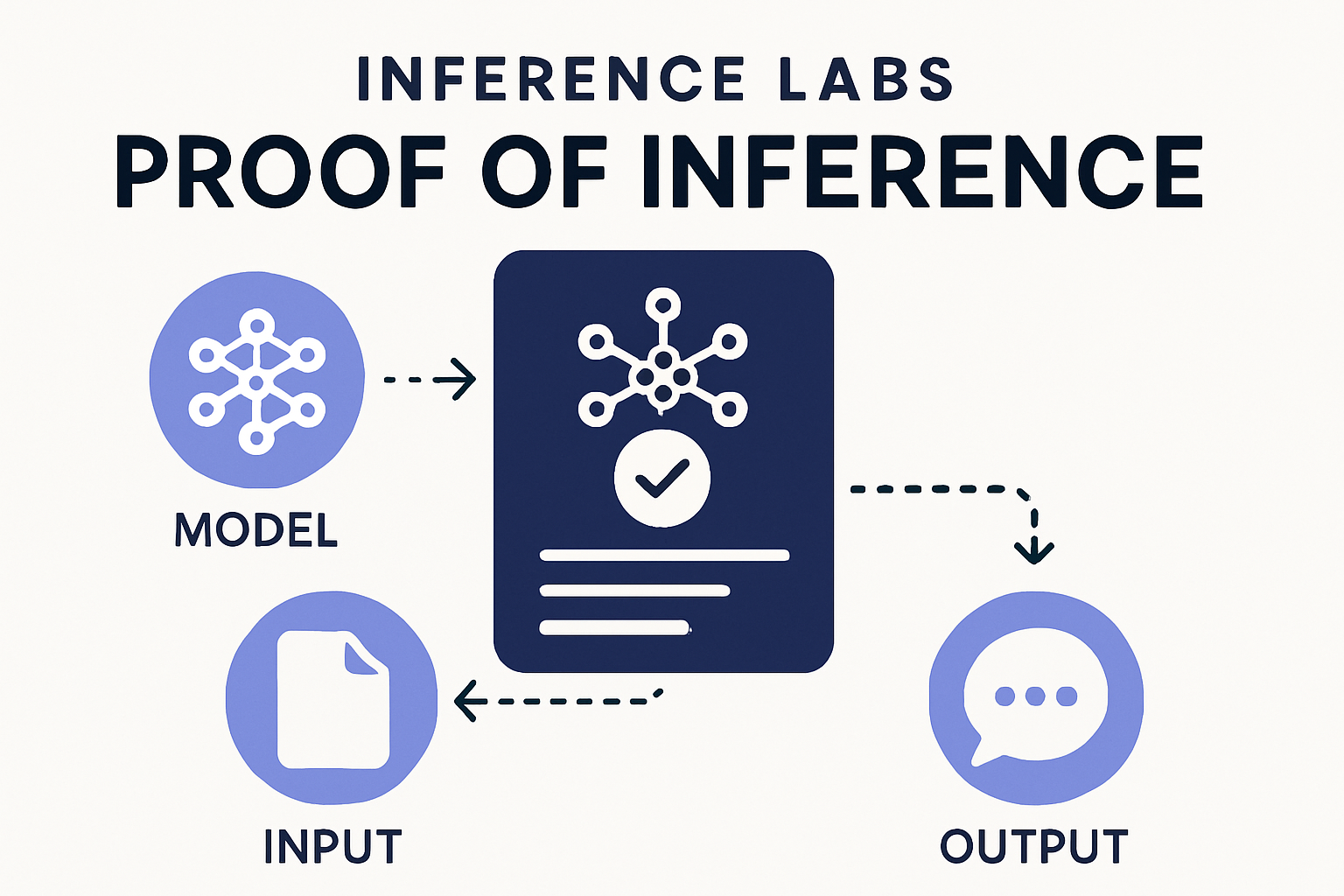

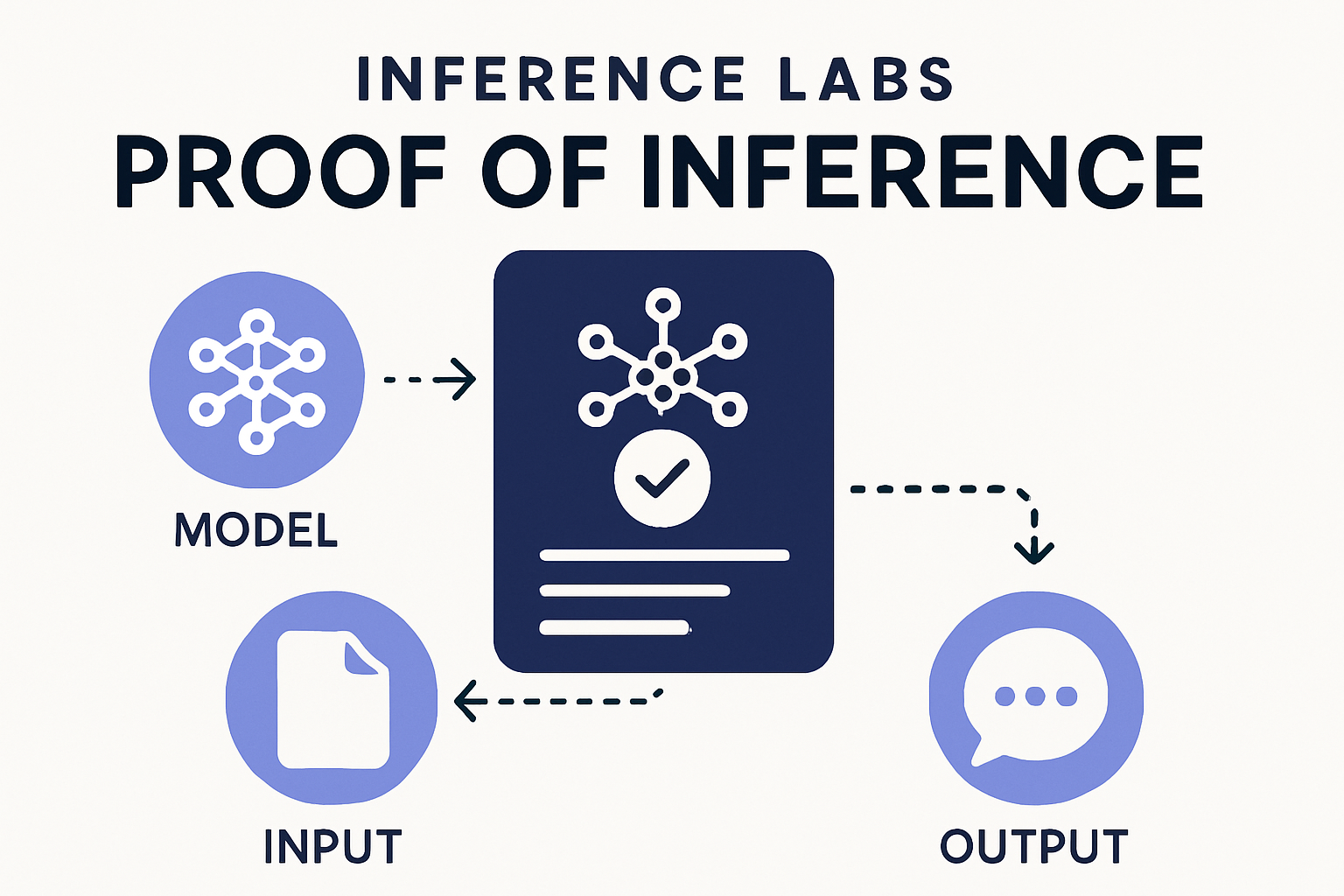

Inference Labs’ Proof of Inference: Implements zero-knowledge proofs to validate AI agent outputs, providing a cryptographic trust layer for decentralized compute and enabling privacy-preserving, scalable AI verification.

-

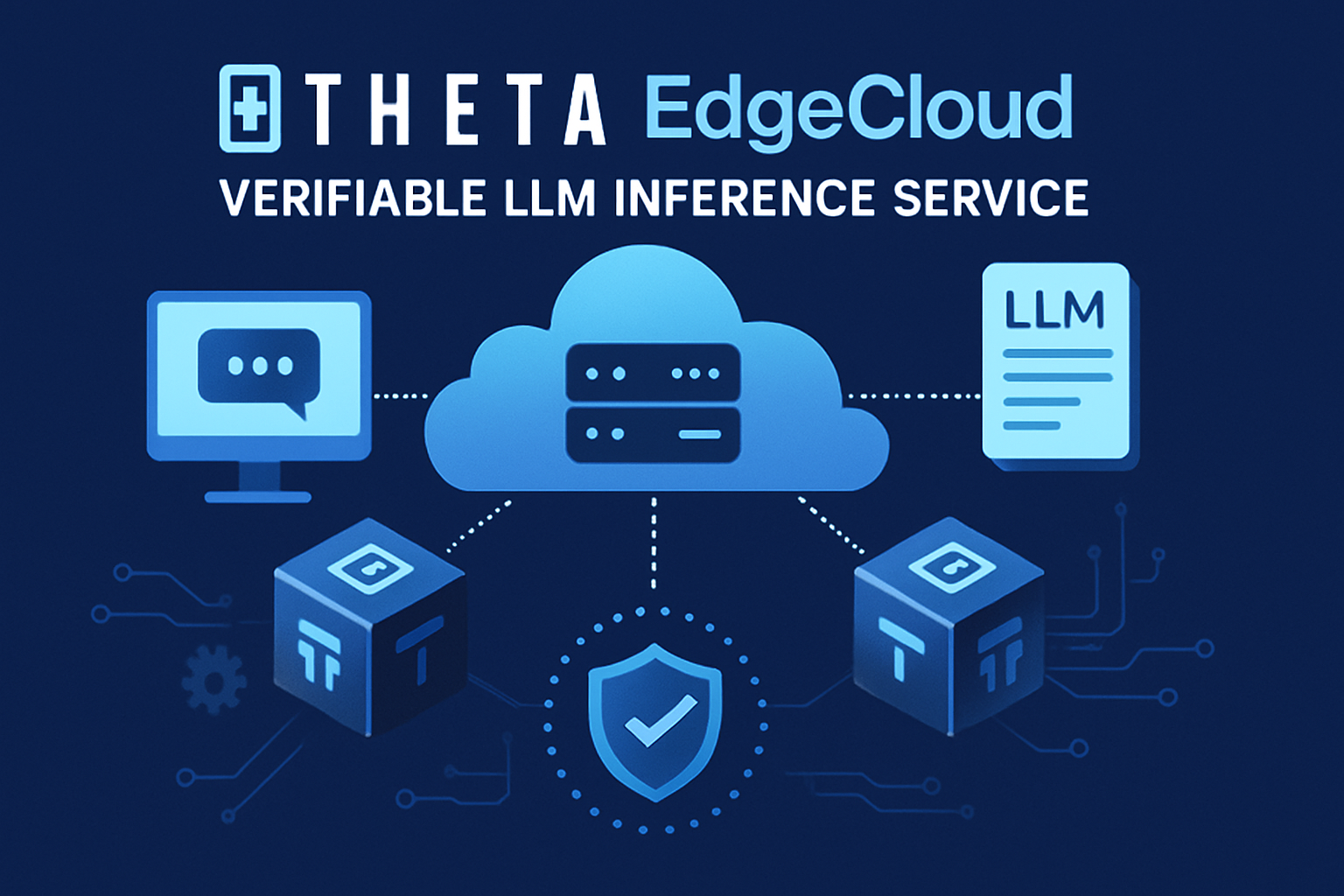

Theta EdgeCloud’s Verifiable LLM Inference Service: Combines AI with blockchain-backed randomness beacons to deliver reproducible, tamper-proof LLM outputs for high-integrity applications.

-

Trusted Compute Units (TCUs) by Castillo et al.: A composable framework for interoperable, verifiable computations across heterogeneous decentralized networks, supporting proof generation and data confidentiality.

-

Proof-of-AI (PoAI) by GSwarm: Integrates verifiable AI inference into blockchain consensus, where miners perform inference tasks to secure the network and advance real-world AI computation.

-

Hyperbolic’s Proof of Sampling (PoSP): A sampling-based verification mechanism ensuring decentralized AI computation integrity with minimal overhead, enabling rapid and secure processing across distributed nodes.

-

Zero-Knowledge Proofs (ZKPs) by IBM Research: Provides a framework for verifiable decentralized AI pipelines, ensuring data and model confidentiality alongside rigorous provenance and output verification.

Pioneering Protocols: Range-Arithmetic, VeriLLM and TOPLOC

The pace of research in this field is staggering. Consider three recent breakthroughs:

- Range-Arithmetic Framework: Converts deep neural network operations into arithmetic steps suitable for fast sum-check protocols and range proofs. This slashes verification costs while preserving accuracy across distributed settings.

- VeriLLM Protocol: A lightweight system engineered for decentralized large language model (LLM) inference. It achieves public verifiability under a one-honest-verifier assumption with minimal overhead (about 1% of total inference cost). Peer-prediction mechanisms keep validators honest without centralization risk.

- TOPLOC Scheme: Deploys locality-sensitive hashing to detect unauthorized changes in models or prompts with perfect accuracy – even across heterogeneous hardware – while reducing memory requirements dramatically.

Together, these frameworks provide a robust toolkit for building scalable, trustless DePIN infrastructure where every step of the AI pipeline can be independently verified on-chain or off-chain as needed.

Industry adoption is accelerating as both startups and established networks race to integrate verifiable inference into their AI infrastructure security stacks. Inference Labs recently secured $6.3 million to scale their Proof of Inference protocol, which leverages zero-knowledge cryptography to generate tamper-evident on-chain receipts for every AI output. This approach not only deters manipulation but also unlocks new use cases for decentralized AI agents, from DeFi automation to privacy-sensitive healthcare applications.

Meanwhile, Theta EdgeCloud is pushing the envelope with a verifiable LLM inference service that fuses AI with blockchain randomness beacons. This guarantees output reproducibility and auditability, a critical requirement for regulated industries and high-stakes enterprise deployments. By anchoring inference results to public randomness, Theta ensures that even powerful adversaries cannot game the system or retroactively alter outputs.

Composable Frameworks and Blockchain-Native Verification

Beyond individual protocols, the ecosystem is moving toward composable, interoperable verification frameworks that allow decentralized applications to mix and match trusted compute modules. Trusted Compute Units (TCUs), as described by Castillo et al. , offer a plug-and-play approach for offloading complex AI computations while receiving cryptographic proof of correctness. This modularity is essential for DePIN projects that must support a diverse array of models, workloads, and privacy requirements.

On the consensus layer, mechanisms like Proof-of-AI (PoAI) are embedding AI inference directly into blockchain security. Here, miners compete to solve verifiable AI tasks, producing blocks only when computation passes cryptographic muster. This not only secures the chain but also advances the state of real-world AI by aligning economic incentives with useful computation.

Top 5 Verification Mechanisms for Decentralized AI

-

Range-Arithmetic Framework by Rahimi et al. transforms deep neural network operations into arithmetic steps, enabling efficient verification with sum-check protocols and range proofs. This reduces computational and communication overhead in decentralized AI inference.

-

VeriLLM Protocol is a publicly verifiable protocol for decentralized large language model (LLM) inference. Developed by Wang et al., it achieves low verification costs and leverages a peer-prediction mechanism to deter lazy verification, ensuring robust and scalable LLM inference.

-

TOPLOC Scheme utilizes locality-sensitive hashing for trustless verifiable inference. Created by Ong et al., it detects unauthorized modifications to models or prompts with 100% accuracy, maintaining robustness across diverse hardware and minimizing memory overhead.

-

Inference Labs’ Proof of Inference is a cryptographic trust layer for AI agents and off-chain computation. It employs zero-knowledge proofs to validate AI outputs, enabling scalable and privacy-preserving decentralized inference.

-

Theta EdgeCloud’s Verifiable LLM Inference Service combines AI technology with blockchain-backed randomness beacons. This service ensures AI outputs are reproducible and tamper-proof, making it ideal for sectors requiring high computational integrity.

Sampling-based schemes such as Hyperbolic’s PoSP and IBM Research’s zero-knowledge pipeline framework further reduce verification overhead. They enable rapid, scalable validation without exposing sensitive data or model logic, which is vital as decentralized GPU networks expand to support ever-larger AI workloads.

The Road Ahead: What Verifiable Inference Unlocks for DePIN

The impact of trustless verification in decentralized AI compute extends well beyond technical guarantees. It fundamentally changes the economics and governance of open AI:

- Democratized Access: Anyone can contribute compute or develop new AI services without being part of a trusted cartel.

- On-Chain Auditability: Every inference can be traced, verified, and attributed, creating a transparent provenance trail for regulatory or scientific needs.

- Composability: Secure AI modules can be permissionlessly combined into larger pipelines, accelerating innovation across sectors.

- Incentive Alignment: Crypto-powered rewards and penalties keep node operators honest, even in adversarial environments.

The next phase will see even tighter integration between AI model verification blockchain protocols and decentralized GPU networks. Expect to see more projects leveraging range-arithmetic, lightweight LLM verification, and locality-sensitive hashing as standard features. As these primitives mature, the DePIN stack will evolve from experimental to production-grade infrastructure powering everything from autonomous agents to on-chain scientific research.

The message is clear: verifiable inference is not a theoretical exercise. It is the foundation for scalable, secure, and censorship-resistant AI infrastructure. As trust shifts from opaque intermediaries to transparent cryptographic proofs, the decentralized future of AI moves from possibility to inevitability.