In the rapidly evolving landscape of AI and blockchain, Gensyn has emerged as a standout protocol tackling one of the thorniest challenges in decentralized machine learning: how to reliably verify that distributed, untrusted nodes have correctly performed complex model training. With the explosion of interest in DePIN (Decentralized Physical Infrastructure Networks), and the growing demand for scalable, low-cost AI compute, Gensyn’s approach is not just timely – it’s crucial for democratizing access to advanced AI capabilities.

The Verification Problem in Decentralized AI Training

At the heart of any decentralized AI compute network lies a fundamental dilemma: how do you trust that a remote node has honestly trained your model, especially when financial incentives might tempt bad actors to cut corners? Traditional distributed computing platforms rely on reputation systems or expensive redundancy. But for large-scale machine learning – where training can take days or weeks and cost thousands of dollars – these methods are inefficient or infeasible.

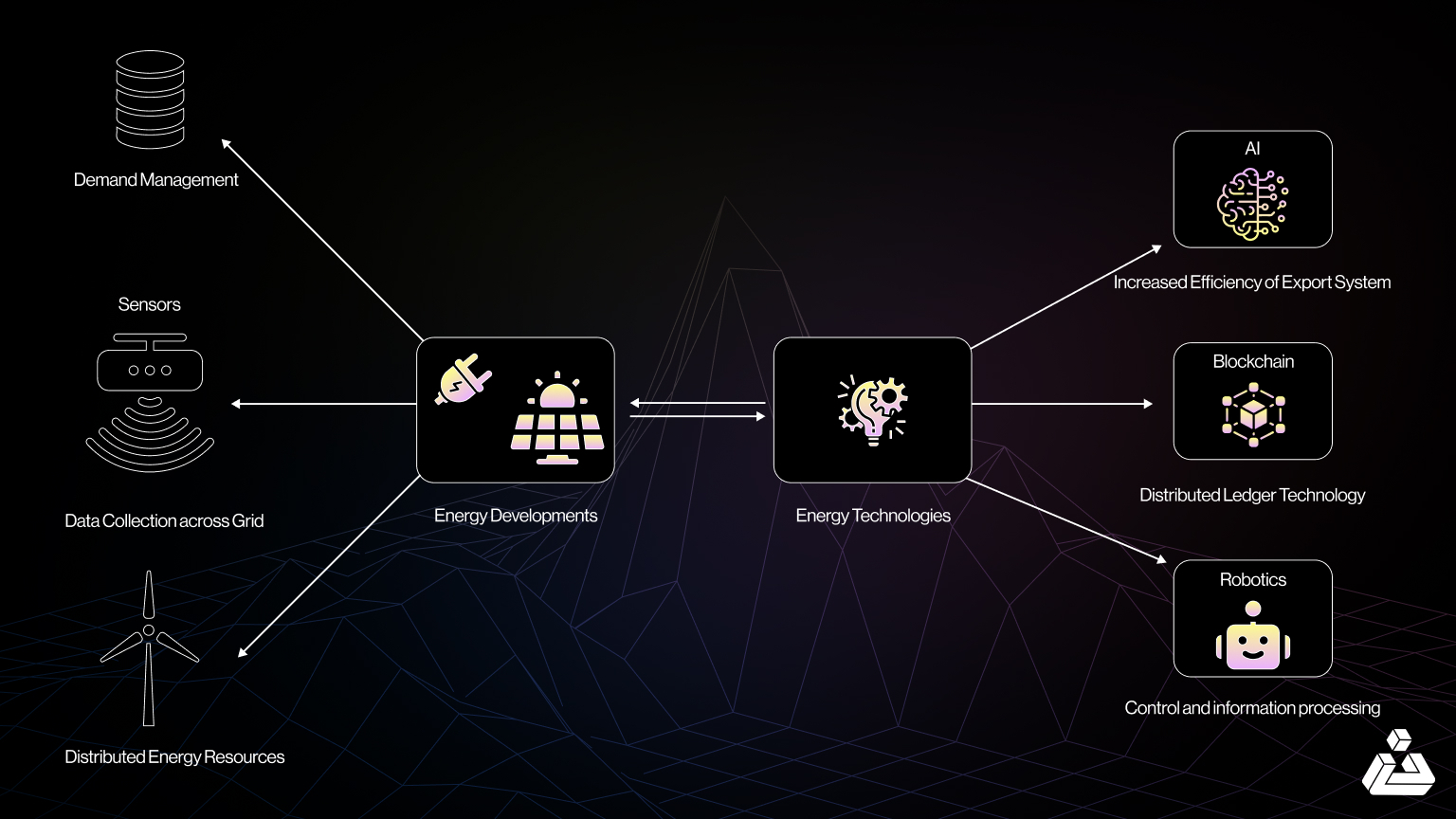

This is where Gensyn’s innovation shines. By combining off-chain model training with on-chain verification, Gensyn creates an open, high-efficiency global marketplace for AI workloads. The protocol doesn’t just distribute GPU tasks across idle hardware; it ensures those tasks are executed faithfully through cryptographic proofs and economic incentives.

Verde Protocol: Pinpointing Discrepancies Efficiently

The cornerstone of Gensyn’s solution is the Verde verification protocol. Unlike brute-force approaches that require retraining entire models to check for correctness, Verde introduces a lightweight dispute resolution mechanism tailored for deep learning. Here’s how it works:

- Stepwise Verification: When a verifier suspects an error in a solver’s submitted proof, Verde drills down to the exact step and operator where results diverge.

- Targeted Recalculation: Only the disputed computation is recomputed on-chain. This sharply reduces overhead while maintaining high assurance.

- Economic Alignment: Verifiers and solvers stake tokens; incorrect results are penalized via slashing, incentivizing honest behavior throughout the network.

This granular approach makes large-scale verifiable ML training possible without bottlenecking on redundant computation or centralized trust. It also sets Gensyn apart from earlier distributed computing projects which struggled with scalability or security trade-offs.

Tackling Hardware Variance with RepOps

A persistent challenge in distributed machine learning is hardware-induced non-determinism. Different GPUs or CPUs may perform floating-point operations in slightly different orders, leading to tiny output variations that can undermine reproducibility – and thus verifiability.

Gensyn addresses this directly with its RepOps library: a suite of machine learning operators engineered for bitwise reproducibility across diverse hardware setups. By enforcing strict execution order and numerical consistency at every step, RepOps ensures that honest nodes will always produce identical outputs for the same task input. This is foundational for reliable dispute resolution within Verde and elevates Gensyn above generic compute-sharing protocols.

An Incentivized Ecosystem: Submitters, Solvers, Verifiers and Whistleblowers

The strength of any DePIN network lies in its incentive design. Gensyn orchestrates a multi-role ecosystem:

- Submitters: Users who provide ML tasks and pay rewards for successful training completion.

- Solvers: Nodes executing tasks using their own hardware resources; they generate cryptographic proofs as evidence of correct work.

- Verifiers: Randomly selected participants who partially re-execute tasks to validate solver claims; they earn fees by ensuring accuracy.

- Whistleblowers: Any participant able to detect fraud or errors can challenge results; successful challenges are rewarded from slashed stakes.

This dynamic not only deters malicious behavior but also fosters robust collaboration among global participants – from solo GPU owners to institutional compute providers – all coordinated by smart contracts on Gensyn’s purpose-built blockchain layer.

Beyond the technical elegance of Verde and RepOps, Gensyn’s architecture is designed for real-world usability and scale. The protocol’s RL Swarm application serves as a compelling demonstration of decentralized AI in action. Here, models are trained collaboratively by a swarm of nodes, each contributing to, critiquing, and improving the collective intelligence. This not only accelerates model convergence but also democratizes access to advanced reinforcement learning techniques that would otherwise be gated behind expensive centralized compute clusters.

What makes this especially powerful is the transparent accounting of contributions: every node’s work and resulting impact on model performance is tracked on-chain. This opens the door for new forms of open-source AI development and rewards structures, imagine a global leaderboard where contributors are compensated in real time based on measurable advances in model quality.

Security, Trust, and Economic Alignment

Security is not just a feature, it’s existential for any verifiable ML training DePIN network. Gensyn weaves together cryptographic proofs with game-theoretic incentives to create a system where honest behavior is always in participants’ best interest. Submitters gain confidence that their models are trained faithfully; solvers are motivated by direct payment and reputation; verifiers and whistleblowers keep everyone honest through active oversight and slashing mechanisms.

This design also lowers barriers for smaller developers or startups who lack access to proprietary cloud infrastructure. By making distributed GPU compute AI both accessible and trustworthy, Gensyn empowers innovation at the edges, not just within tech giants’ data centers.

The Four Key Roles in Gensyn’s Decentralized AI Ecosystem

-

Submitters initiate the process by posting AI training tasks to the Gensyn network. They specify requirements, datasets, and rewards, creating opportunities for global compute providers to participate. Submitters fund the tasks, ensuring solvers are incentivized to deliver accurate results.

-

Solvers are decentralized compute providers who execute the submitted training tasks. They utilize their hardware to train models, then generate cryptographic proofs of their work. Solvers are rewarded upon successful verification, making use of Gensyn’s RepOps library to ensure reproducibility across diverse hardware.

-

Verifiers play a crucial role by replicating and checking the solvers’ proofs. Using the Verde Verification Protocol, they pinpoint discrepancies in computations, ensuring results are trustworthy. Verifiers help maintain the integrity of the network and are compensated for their diligence.

-

Whistleblowers act as the network’s watchdogs. They monitor for dishonest behavior, such as incorrect proofs or manipulated results. When they detect fraud, whistleblowers can challenge the outcome, triggering dispute resolution and potential slashing of malicious actors’ stakes, thus upholding the network’s honesty.

The Road Ahead: Scaling DePIN Training Verification

The implications of state-dependent compute blockchains like Gensyn extend far beyond today’s use cases. As models grow larger and more specialized, think custom LLMs or edge-deployed vision systems, the need for scalable, trustless training verification will only intensify. Gensyn’s modular approach means it can adapt quickly as new operator types or hardware architectures emerge.

Moreover, by integrating with broader Web3 infrastructure, Gensyn positions itself at the intersection of decentralized compute markets and tokenized incentive layers. This could catalyze entirely new business models around open-source AI development or privacy-preserving federated learning, areas where traditional cloud providers have struggled to offer credible solutions.

Why It Matters Now

The market for decentralized AI compute is heating up rapidly as demand outpaces what centralized clouds can deliver affordably or transparently. With its focus on verifiable ML training DePIN, hardware-agnostic reproducibility, and robust incentive alignment, Gensyn stands out as a blueprint for how next-generation AI infrastructure should operate: open by default, secure by design, and economically inclusive.

If you’re building, or betting on, the future of distributed machine intelligence, understanding protocols like Gensyn isn’t optional; it’s foundational.