Machine learning is fast becoming the backbone of digital innovation, but the traditional approach to AI compute is showing its limits. Centralized data centers face mounting issues with cost, privacy, and scalability. Enter decentralized AI compute networks: a new paradigm where blockchain technology and distributed infrastructure combine to power the next generation of AI applications. By leveraging idle computational resources across a global network of nodes, these systems are transforming how we train, deploy, and monetize machine learning models.

Why Decentralized Compute is Reshaping Blockchain Machine Learning

The convergence of blockchain and machine learning has always promised more than just buzzwords. Decentralized networks are now delivering tangible benefits that address the core pain points in AI infrastructure:

- Data Privacy: Sensitive data can remain local, with only model updates shared across the network. This approach minimizes exposure and aligns with evolving privacy regulations.

- Scalability: Distributed computation allows for massive scaling by tapping into thousands of independent nodes equipped with GPUs or specialized hardware.

- Cost Efficiency: By utilizing underused global resources, decentralized networks drastically reduce the expense compared to centralized cloud providers.

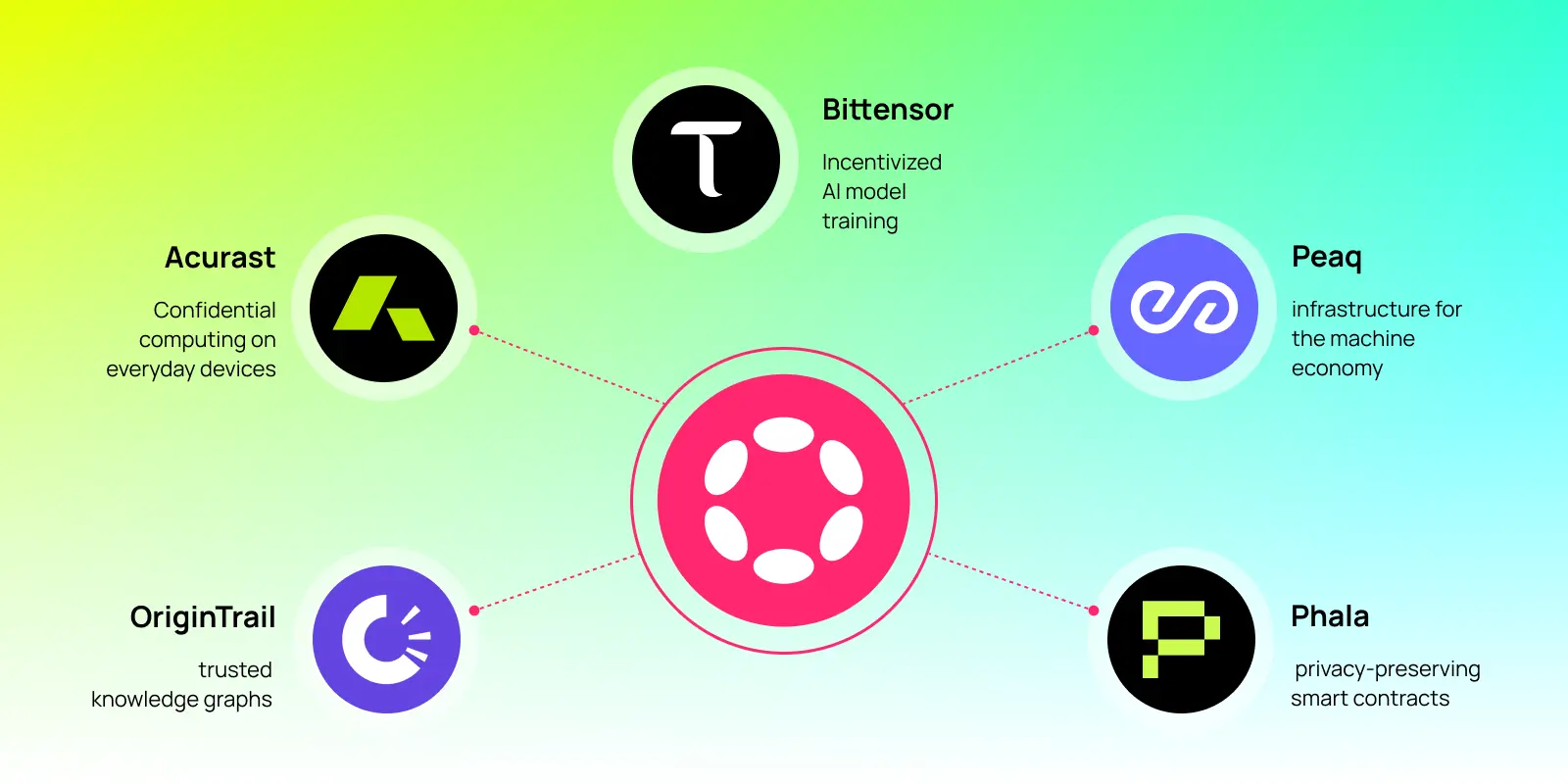

These advantages are no longer theoretical. Projects like Akash Network, Gensyn, and Bittensor are already making waves by offering real-world decentralized compute solutions for blockchain machine learning.

Pioneers in Distributed AI Infrastructure

The landscape is rapidly evolving, with several standout projects leading the charge in crypto-powered AI compute:

Leading Decentralized AI Compute Projects

-

Akash Network: An open-source, decentralized cloud marketplace that enables users to buy and sell compute resources, including enterprise-grade GPUs, for AI and machine learning tasks. Akash lowers costs and increases accessibility by leveraging underutilized global infrastructure.

-

Bittensor: A blockchain-based protocol that incentivizes the development, sharing, and access of machine learning models through a decentralized network. Bittensor fosters a transparent, censorship-resistant marketplace for AI models and compute.

-

Gensyn: This platform connects distributed compute nodes to orchestrate scalable and cost-effective machine learning training. Gensyn enables developers to access large-scale compute without relying on centralized providers.

-

Nous Research: Focused on decentralized AI training, Nous Research utilizes a permissionless network of compute contributors to efficiently train large language models, promoting open and collaborative AI development.

-

Planck Network: A blockchain infrastructure company offering a decentralized compute network aimed at reducing costs for artificial intelligence and machine learning workloads, while enhancing security and scalability.

-

Qubic: Provides ultra-fast, scalable blockchain infrastructure specifically designed for AI compatibility and decentralized machine learning, facilitating efficient and secure AI model training and deployment.

Bittensor, for example, creates an open marketplace for machine learning models where contributors are rewarded in crypto for sharing valuable models or computational power. This not only incentivizes participation but also fosters a transparent ecosystem for sharing and improving AI capabilities (source).

Akash Network, initially focused on general-purpose compute marketplaces, has expanded to provide access to enterprise-grade GPUs via a decentralized cloud platform. This shift is critical as demand for AI model training on blockchain continues to surge (source).

The Technology Behind Crypto-Powered AI Compute Networks

The success of these platforms hinges on key technological innovations that enable trustless collaboration at scale:

- Proof-of-Compute: A cryptographic mechanism that verifies computational tasks (like model training) have been performed correctly before rewards are distributed (learn more here). This ensures both performance integrity and fair compensation.

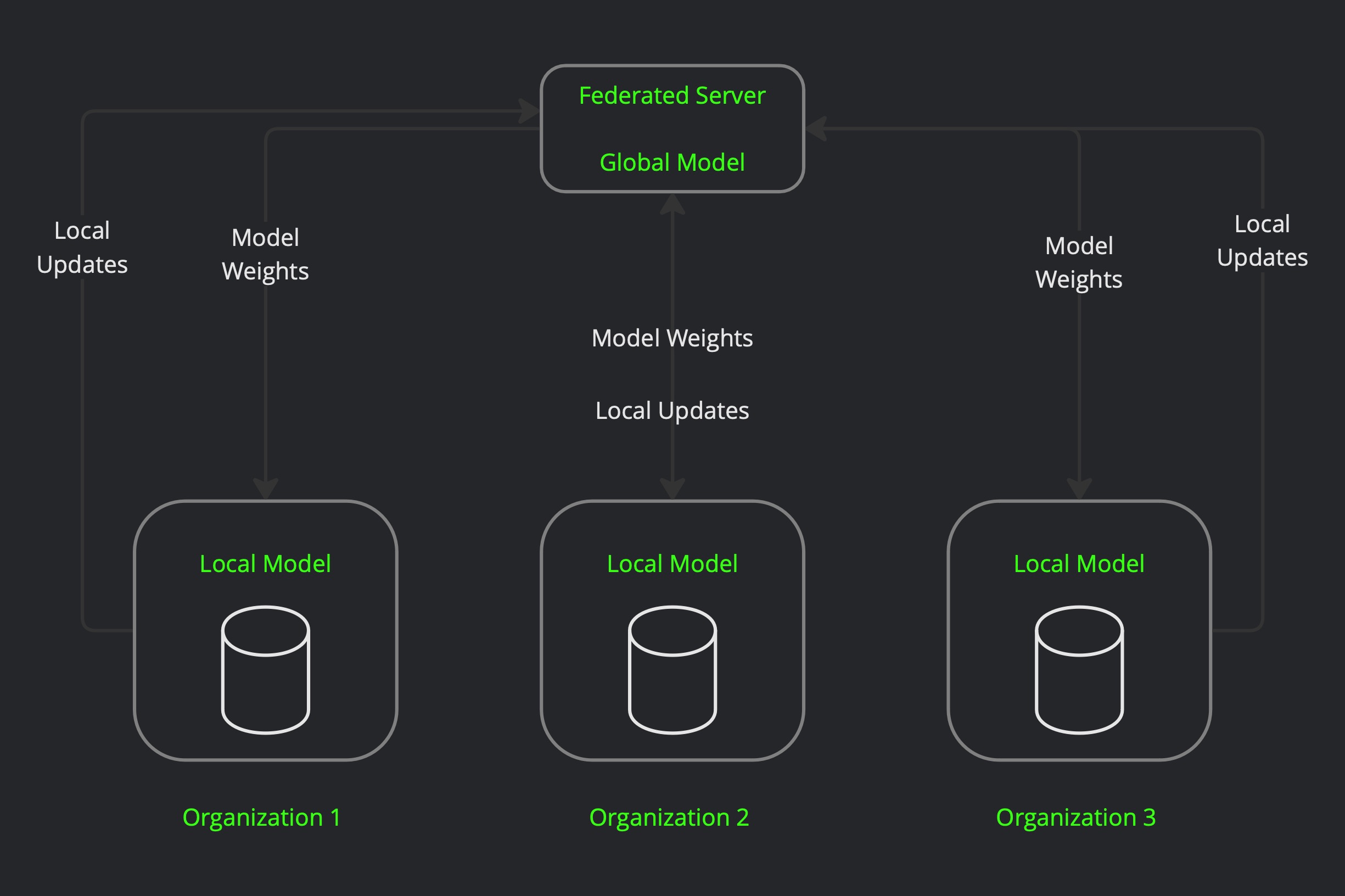

- Federated Learning: Instead of pooling raw data centrally, multiple participants train a shared model collaboratively while keeping their data local, a breakthrough for privacy-sensitive industries.

- Censorship Resistance: By distributing both computation and model hosting across many independent nodes, these networks reduce single points of failure or control, critical as AI becomes more embedded in societal infrastructure.

This shift doesn’t just impact developers; it opens up new opportunities for enterprises seeking scalable, secure solutions without surrendering control over their proprietary data or relying on monolithic tech giants.

One of the most compelling aspects of decentralized AI compute networks is their ability to democratize access to advanced machine learning. By lowering the barriers to entry, these platforms allow independent developers, startups, and even academic researchers to compete on a more level playing field with established tech firms. The traditional model, where only those with deep pockets can afford vast GPU clusters, no longer holds absolute power over innovation.

Take Nous Research, for instance. By orchestrating a permissionless network of compute contributors, they facilitate collaborative training of large language models without the gatekeeping typically found in centralized environments. This approach not only accelerates AI research but also ensures that breakthroughs are shared more widely across the ecosystem.

Challenges and Trade-Offs in Distributed AI Compute

No technological leap is without its growing pains. While decentralized AI compute networks offer significant advantages in terms of privacy and cost, they also introduce new complexities:

- Performance Variability: The global nature of node participation means compute quality can vary widely, impacting consistency for time-sensitive workloads.

- Network Coordination: Ensuring efficient task distribution and result aggregation across thousands of nodes requires robust orchestration protocols and incentive mechanisms.

- Security Risks: Although decentralization mitigates certain attack vectors, adversarial nodes may attempt to submit fraudulent results, making robust proof-of-compute essential (details here).

The industry is responding with rapid innovation. Projects like Gensyn are developing adaptive scheduling algorithms that dynamically allocate tasks based on node reliability and performance history. Meanwhile, advances in federated learning are making it possible for even privacy-sensitive sectors (like healthcare or finance) to benefit from distributed AI infrastructure without compromising data security.

The Road Ahead: Scaling Blockchain Machine Learning

The trajectory for blockchain-based machine learning is clear: as more projects enter the space and existing networks mature, expect an explosion of specialized applications leveraging crypto-powered AI compute. From decentralized marketplaces for custom models (like Ocean Protocol) to edge AI deployments that keep data local while updating global models, the possibilities are expanding rapidly.

Top Emerging Use Cases for Decentralized AI Compute in 2025

-

Decentralized AI Model Training Marketplaces: Platforms like Bittensor and Nous Research are enabling developers and organizations to train machine learning models collaboratively on distributed networks. These marketplaces incentivize contributors with crypto rewards, fostering open, censorship-resistant AI development.

-

Federated Learning for Privacy-Preserving AI: Decentralized compute networks are powering federated learning architectures, allowing multiple parties to train AI models collaboratively without sharing raw data. This enhances privacy and security, especially in sectors like healthcare and finance.

-

Decentralized Cloud GPU Marketplaces: Projects such as Akash Network are democratizing access to GPU resources by creating open marketplaces for compute power. This reduces costs and increases accessibility for AI startups and researchers.

-

AI Model Sharing and Monetization Platforms: Decentralized protocols like Ocean Protocol and Fetch.ai are enabling the secure exchange, sharing, and monetization of AI models and datasets on blockchain-based marketplaces, promoting innovation and collaboration.

-

Proof-of-Compute for Trustless AI Work Verification: Networks are adopting Proof-of-Compute mechanisms to verify that AI computations (such as training or inference) are performed correctly. This ensures trustless coordination and fair compensation within decentralized AI ecosystems.

-

Scalable Distributed Inference Services: Platforms like Gensyn are enabling scalable, distributed AI inference across global nodes, allowing real-time deployment of machine learning models without reliance on centralized cloud providers.

-

AI-Powered Decentralized Autonomous Organizations (DAOs): Decentralized compute networks are being used to power AI-driven DAOs, where autonomous agents govern and optimize protocols, manage resources, and execute smart contracts with minimal human intervention.

This momentum is not lost on investors or enterprises seeking scalable alternatives to hyperscale cloud providers. As regulatory scrutiny around data sovereignty intensifies worldwide, organizations will increasingly turn to distributed solutions that offer both compliance and flexibility out-of-the-box.

The convergence between blockchain and machine learning has moved from theoretical promise to pragmatic reality. Decentralized AI compute networks are no longer just experimental, they’re powering real-world workloads at scale while opening up new frontiers for innovation across industries.