For years, AI developers and enterprises have been locked into the pricing structures of hyperscale cloud providers, primarily AWS, Azure, and GCP, when it comes to running large-scale inference on GPUs. As demand for advanced models has exploded, so too have the costs and constraints associated with these centralized platforms. The rise of decentralized GPU networks is rapidly changing this equation, offering a new paradigm for AI inference that slashes costs by up to 80% compared to AWS’s on-demand pricing.

The Real Cost of AI Inference on AWS

To understand how decentralized GPU networks achieve such dramatic savings, it’s essential to examine the status quo. On AWS EC2, an NVIDIA Tesla V100 instance is priced at $3.06 per hour according to recent market data (GMI Cloud). For more advanced GPUs like the A100 or H100, often required for large language models or multi-modal AGI development, the hourly rates are even higher. While AWS has introduced services like Elastic Inference to reduce costs by up to 75%, actual savings are often limited by availability constraints and proprietary APIs.

This pricing structure creates a significant barrier for startups and research labs aiming to scale inference workloads. Even major enterprises are feeling the pinch; institutions like UC Berkeley and Leonardo. ai have begun migrating workloads off AWS in favor of decentralized solutions such as IO. net (BeInCrypto).

How Decentralized GPU Networks Unlock Massive Savings

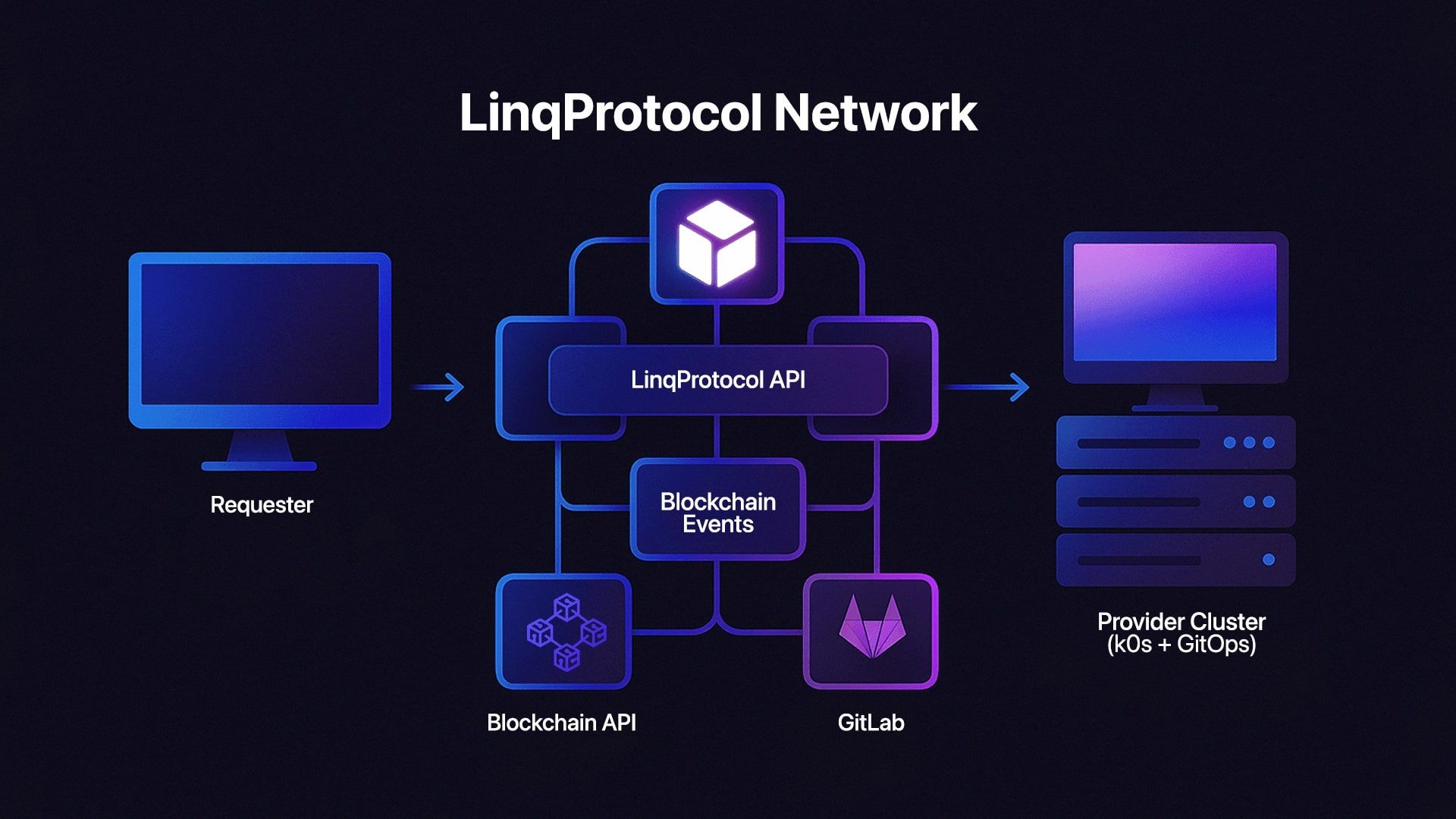

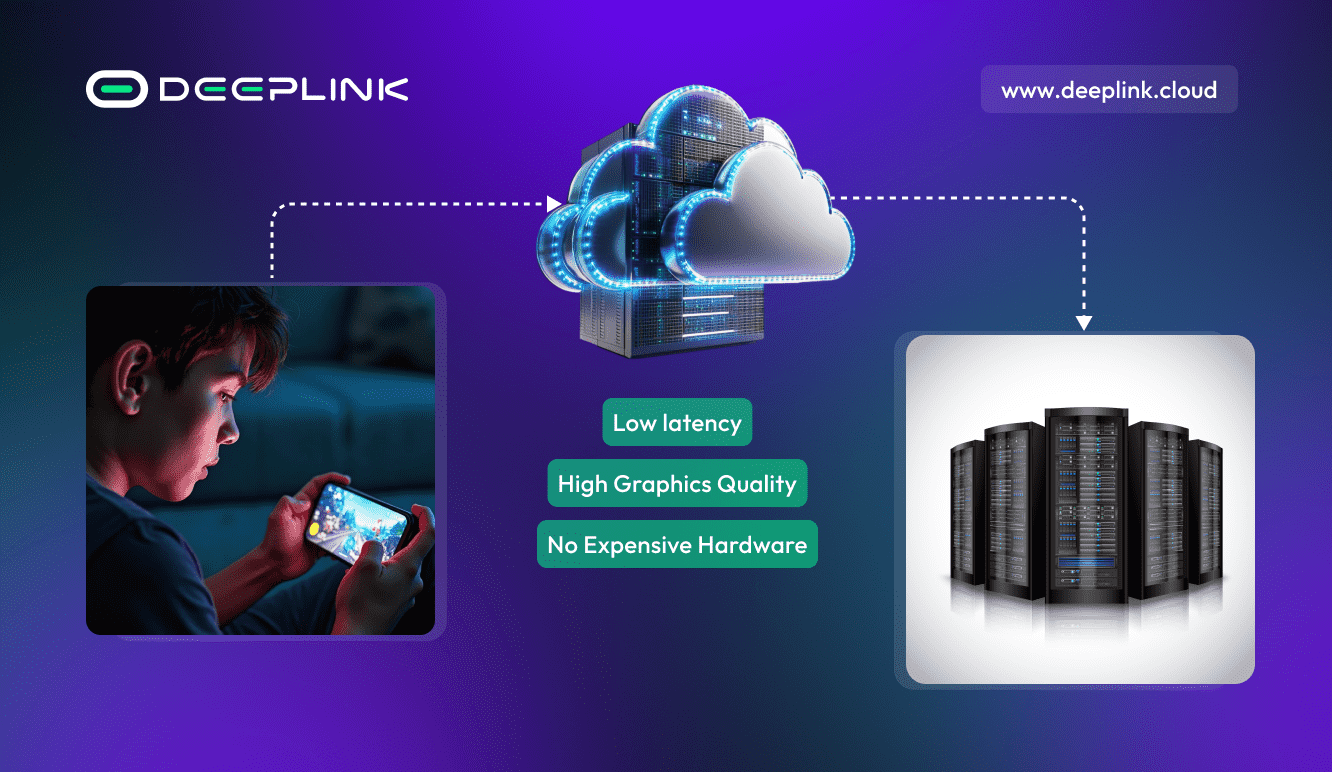

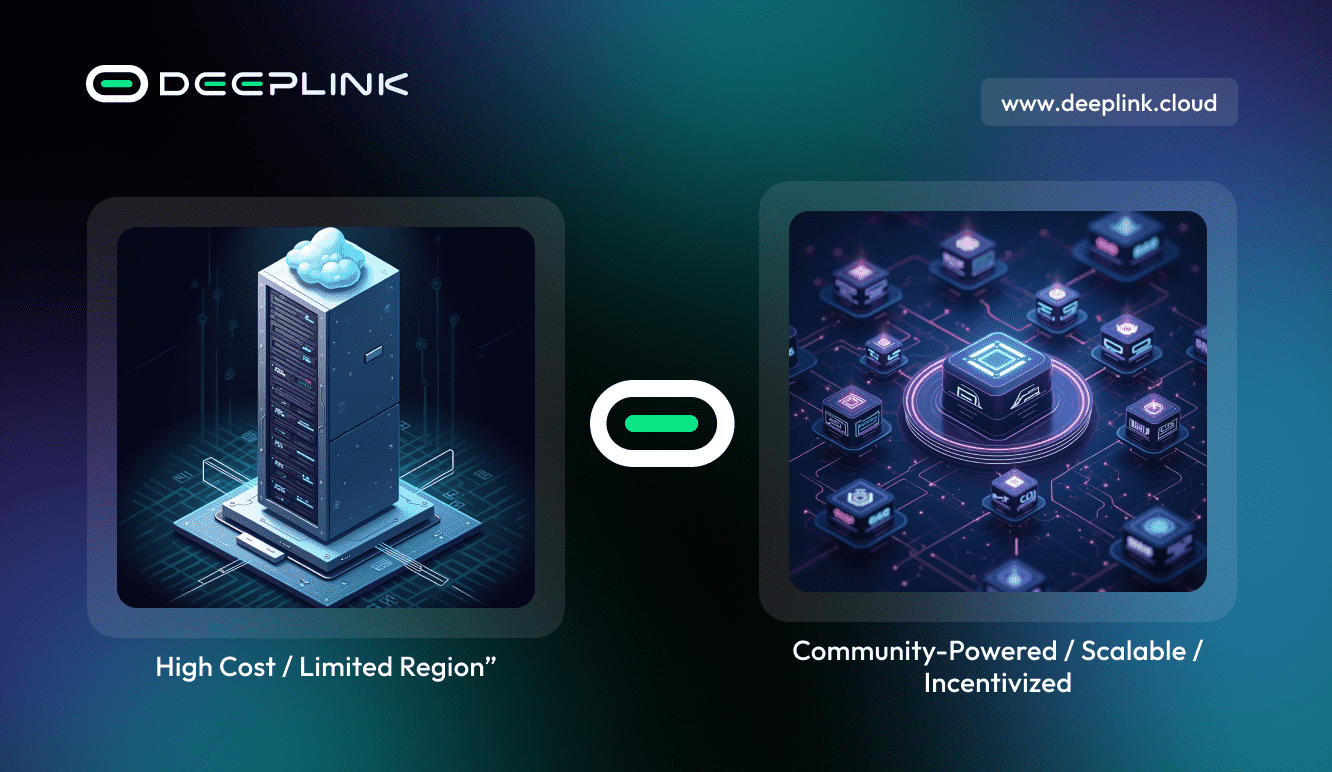

The core innovation behind decentralized GPU networks is their ability to tap into underutilized compute resources scattered across data centers, crypto mining farms, and even personal devices worldwide. Platforms like Hyperbolic’s GPU Marketplace use a decentralized operating system that orchestrates these resources into a single, globally accessible pool (hyperbolic. ai). This model enables fractionalized rentals, users pay only for what they need, when they need it.

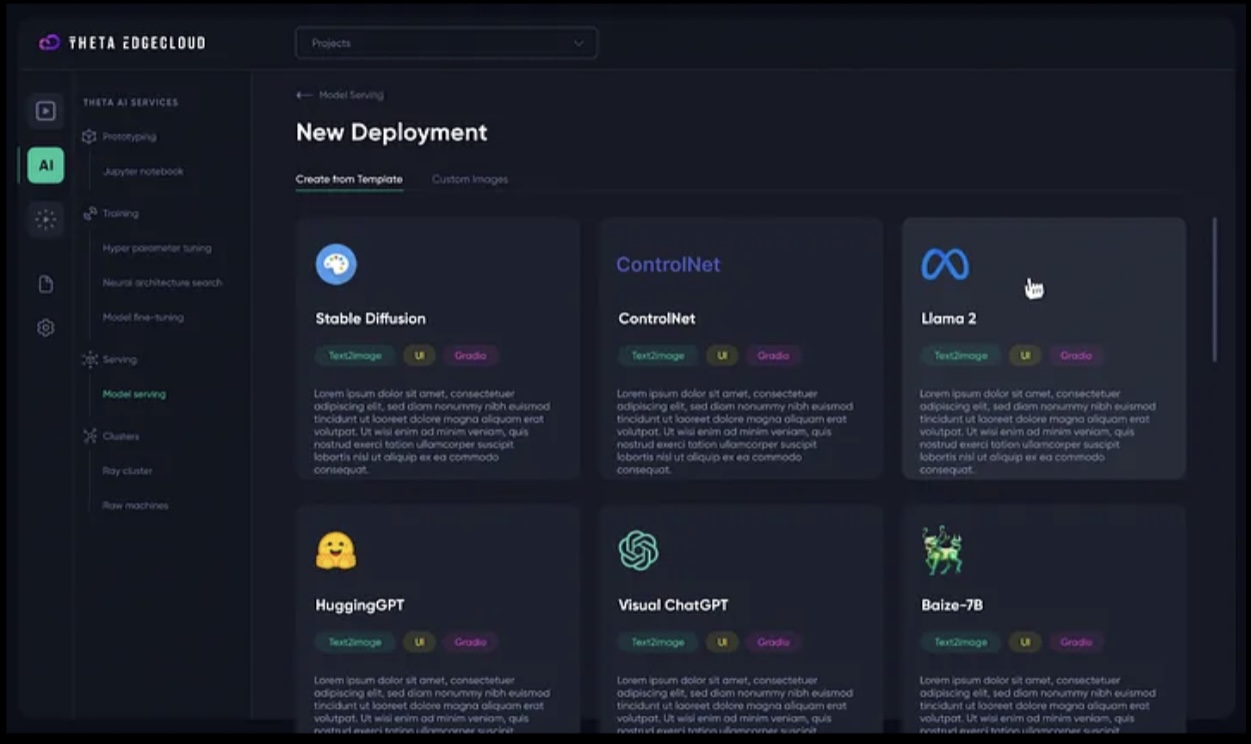

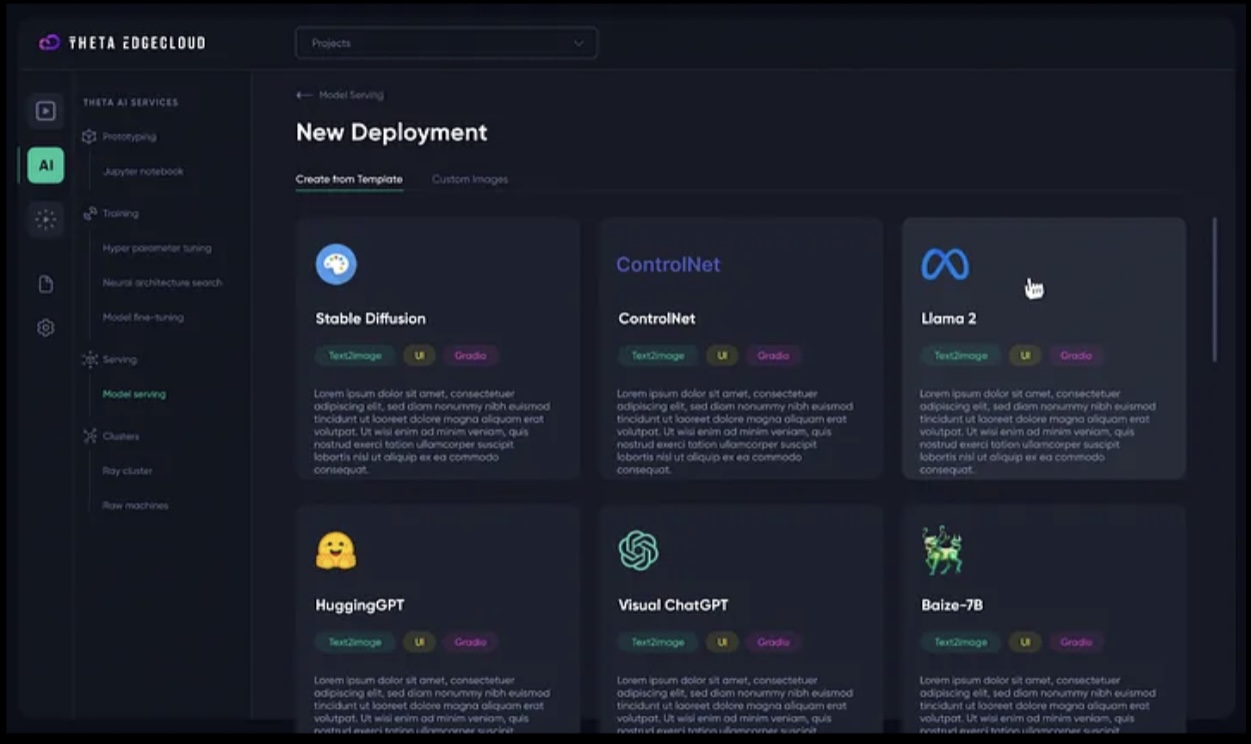

The impact is profound: Hyperbolic reports cost reductions of up to 75% compared to traditional cloud providers. Theta EdgeCloud’s integration of Nvidia CUDA optimization has boosted node participation by 40%, resulting in over 80 petaFLOPS of distributed compute power, and users report 50, 70% lower costs on rendering jobs (Blockchain Magazine). Nosana’s newly launched marketplace further democratizes access by allowing developers worldwide to rent affordable GPUs for both training and inference (Cointelegraph).

Key Differences: AWS GPU Pricing vs. Decentralized GPU Networks

-

Pricing Structure: AWS uses fixed on-demand and spot pricing models, with rates for GPUs like the NVIDIA Tesla V100 at approximately $3.06 per hour on EC2. In contrast, decentralized GPU networks like IO.net, Hyperbolic, and Nosana offer dynamic, marketplace-driven pricing that can be significantly lower due to global competition and utilization of underused hardware.

-

Cost Savings: Decentralized GPU networks consistently report 50–80% lower costs for AI inference compared to AWS. For example, Hyperbolic’s GPU Marketplace and Theta EdgeCloud users have achieved up to 75–80% cost reductions for inference and rendering workloads, surpassing even AWS’s own Elastic Inference savings.

-

Resource Utilization: AWS relies on dedicated data centers with proprietary infrastructure, often leading to underutilized capacity. Decentralized networks aggregate idle GPUs from data centers, mining farms, and individuals worldwide, increasing availability and driving down prices through higher resource utilization.

-

Scalability & Flexibility: Decentralized GPU platforms like Nosana and Theta EdgeCloud enable fractionalized, on-demand rentals and rapid scaling across a global pool of hardware. AWS offers scalability but is limited by regional availability and higher peak demand pricing.

-

Performance Optimization: Theta EdgeCloud integrates Nvidia CUDA optimization, boosting performance and node participation, while AWS provides standardized GPU instances. Decentralized networks can optimize across diverse hardware, potentially delivering better price-to-performance ratios for specific workloads.

-

Enterprise Adoption: Major organizations such as UC Berkeley and Leonardo.ai have migrated from AWS to decentralized GPU networks like IO.net, citing massive cost savings and improved access to compute resources.

A Side-by-Side Price Comparison: Decentralized vs. Centralized Compute

The headline numbers speak volumes. On AWS EC2, running an AI inference job on a Tesla V100 costs $3.06 per hour. Decentralized networks like Hyperbolic can offer similar or superior hardware at discounts ranging from 50% up to 80%, depending on supply-demand dynamics and geographic distribution. This means you could be paying as little as $0.61 per hour for comparable compute power, a game-changer for both lean startups and established enterprises looking to optimize margins.

These cost dynamics are not just theoretical; they’re already driving real-world adoption among leaders in AI innovation who are seeking scalable solutions without sacrificing performance or security.

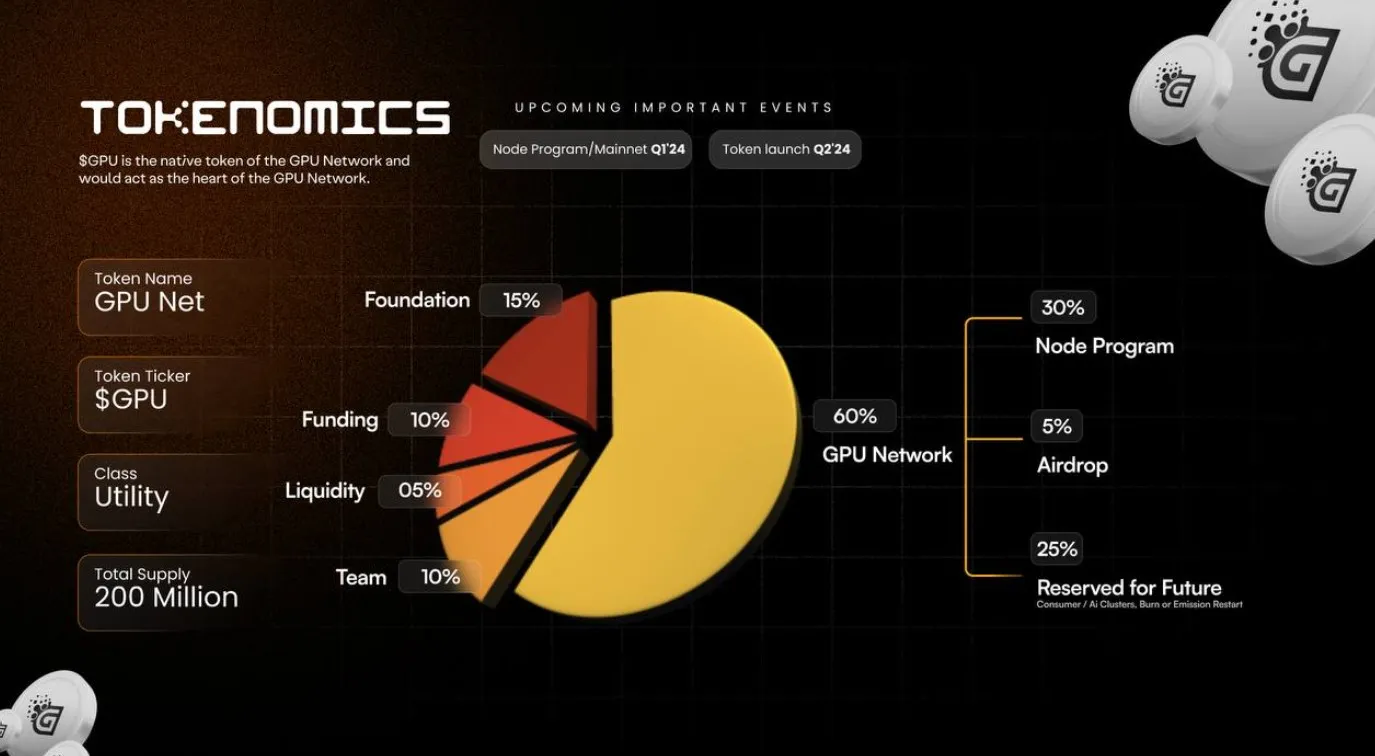

Beyond raw cost savings, decentralized GPU networks are introducing new incentive models that further differentiate them from legacy cloud providers. Many platforms leverage native tokens and crypto-powered mechanisms to reward node operators and facilitate transparent, low-friction transactions. This approach not only drives higher node participation but also creates a virtuous cycle of supply growth and price competition, ultimately benefiting developers and enterprise users with even lower AI inference costs.

For example, Nosana’s marketplace taps into a global pool of underutilized GPUs, letting developers scale workloads elastically and affordably. Because the network is decentralized, there’s no single point of failure or vendor lock-in, making it easier to avoid the unpredictable spot pricing and regional shortages that often plague AWS and other hyperscalers. The democratization of access means researchers in emerging markets can now train and deploy state-of-the-art models at a fraction of historical costs. This is more than just incremental savings; it’s a structural shift in how AI compute markets operate.

The Broader Impact: Fueling Multi-Modal AGI and Open Innovation

With inference costs dropping as much as 80% compared to AWS’s $3.06 per hour baseline for V100s, previously cost-prohibitive projects, like multi-modal AGI development or large-scale generative AI applications, are suddenly within reach for smaller teams and open-source communities. This shift is catalyzing a surge in experimentation across domains ranging from medical imaging to real-time content generation.

Importantly, these networks are not standing still technologically. Solutions like Theta EdgeCloud have integrated Nvidia CUDA optimization for heterogeneous hardware environments, while Hyperbolic’s fractionalized rental model ensures that compute resources can be matched precisely to workload requirements. The result: higher utilization rates for node operators and dramatically improved efficiency for end-users.

Key Benefits of Switching from AWS to Decentralized GPU Networks

-

Significant Cost Savings: Decentralized GPU networks like Hyperbolic and Theta EdgeCloud can reduce AI inference costs by up to 80% compared to AWS, thanks to fractionalized, on-demand rentals of underutilized GPUs worldwide.

-

Global Scalability and Resource Availability: Platforms such as Nosana and Hyperbolic tap into GPUs from data centers, mining farms, and personal devices, providing a vast, scalable pool of compute power that can flexibly meet fluctuating AI workload demands.

-

Enhanced Performance Through Optimization: Theta EdgeCloud integrates Nvidia CUDA optimization, boosting node participation and delivering over 80 petaFLOPS of distributed compute power for AI inference and rendering tasks.

-

Democratized Access to AI Compute: By leveraging decentralized networks, developers and enterprises of all sizes can access affordable, high-performance GPUs, making advanced AI model training and inference more accessible than ever before.

-

Reduced Vendor Lock-In: Decentralized GPU marketplaces allow users to avoid reliance on a single cloud provider like AWS, fostering a more competitive ecosystem with flexible pricing and service options.

Strategic Considerations for Enterprises

Enterprises evaluating the leap from centralized clouds to decentralized GPU networks should weigh several factors:

- Performance Consistency: Modern DePIN compute networks have improved reliability through redundancy and dynamic workload routing.

- Security and Compliance: Decentralized solutions are rapidly evolving to meet enterprise-grade security standards, with encrypted data transfer and verifiable compute proofs becoming standard features.

- Ecosystem Integration: Many platforms now offer plug-and-play compatibility with existing ML frameworks (e. g. , PyTorch, TensorFlow) and orchestration tools.

The economics are clear: with decentralized GPU networks offering up to 80% lower inference costs versus AWS’s current $3.06 hourly rate for V100s, the incentive to migrate is stronger than ever, especially as demand for scalable AI infrastructure accelerates into 2025.

Looking Ahead: The Future of Crypto-Powered AI Infrastructure

The convergence of blockchain incentives, global hardware liquidity, and open-source innovation signals a new era for AI infrastructure. As more enterprises make the switch, and as token utility (such as DecentralGPT) matures, the market share of traditional hyperscalers will continue to erode in favor of agile, community-driven networks.

This paradigm shift won’t just lower barriers for today’s startups; it will enable entirely new classes of applications previously considered too expensive or logistically complex under old pricing regimes. For investors, builders, and researchers alike, the message is clear: The future of scalable AI belongs to decentralized GPU networks, and those who embrace them early will capture outsized value as adoption accelerates.