Imagine a world where anyone with a spare GPU can contribute to the next wave of AI innovation – and get paid for it. That’s not some sci-fi dream, it’s the rapidly evolving reality of decentralized GPU networks. As AI workloads explode and demand for compute skyrockets, centralized giants like AWS and Google Cloud are hitting their limits. Enter DePIN compute infrastructure: a global mesh of distributed AI hardware that’s rewriting the rules for scalable, transparent, and verifiable AI inference.

Why Decentralized GPU Networks Are Changing the Game

The traditional model for running powerful AI models relies on massive, centralized data centers. While these have driven much of today’s progress, they’re also expensive, opaque, and increasingly bottlenecked by supply chain issues (just ask anyone who tried to rent an H100 this year). Decentralized GPU networks flip this script by tapping into underutilized GPUs worldwide – from gaming rigs in Seoul to research clusters in Berlin.

This is more than just a cost play. By distributing workloads across a permissionless compute layer, these networks unlock:

- Scalability: Spin up thousands of GPUs on demand without vendor lock-in or waitlists.

- Transparency: On-chain proof of compute lets anyone verify which model ran where – no more black boxes.

- Resilience: No single point of failure means better uptime and censorship resistance.

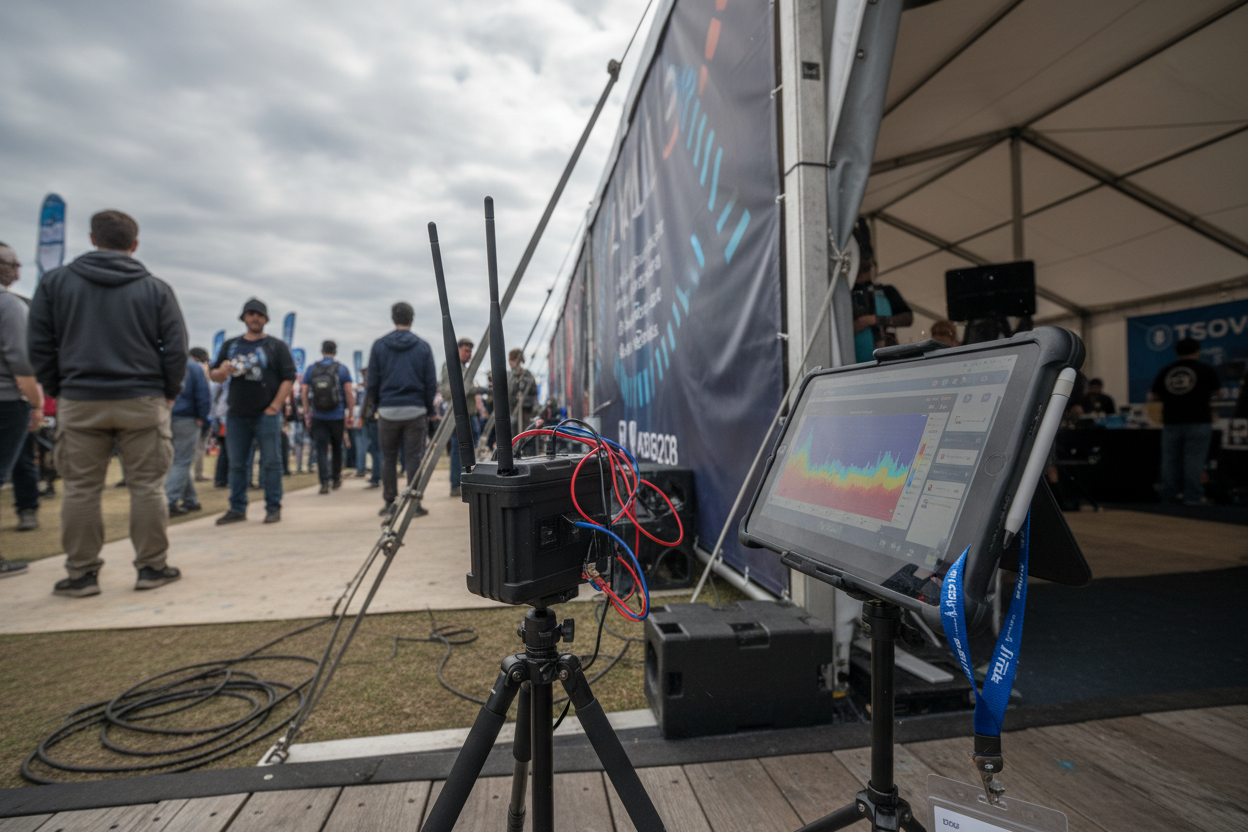

The recent launch of OGPU Network’s task-based billing platform is just one example. By connecting providers, enterprises, and users in real time, they’re turning idle silicon into productive capital. And with NVIDIA Corp (NVDA) currently priced at $180.28, the economic incentive to put every available GPU to work has never been higher.

Pillars of Verifiable AI Inference at Scale

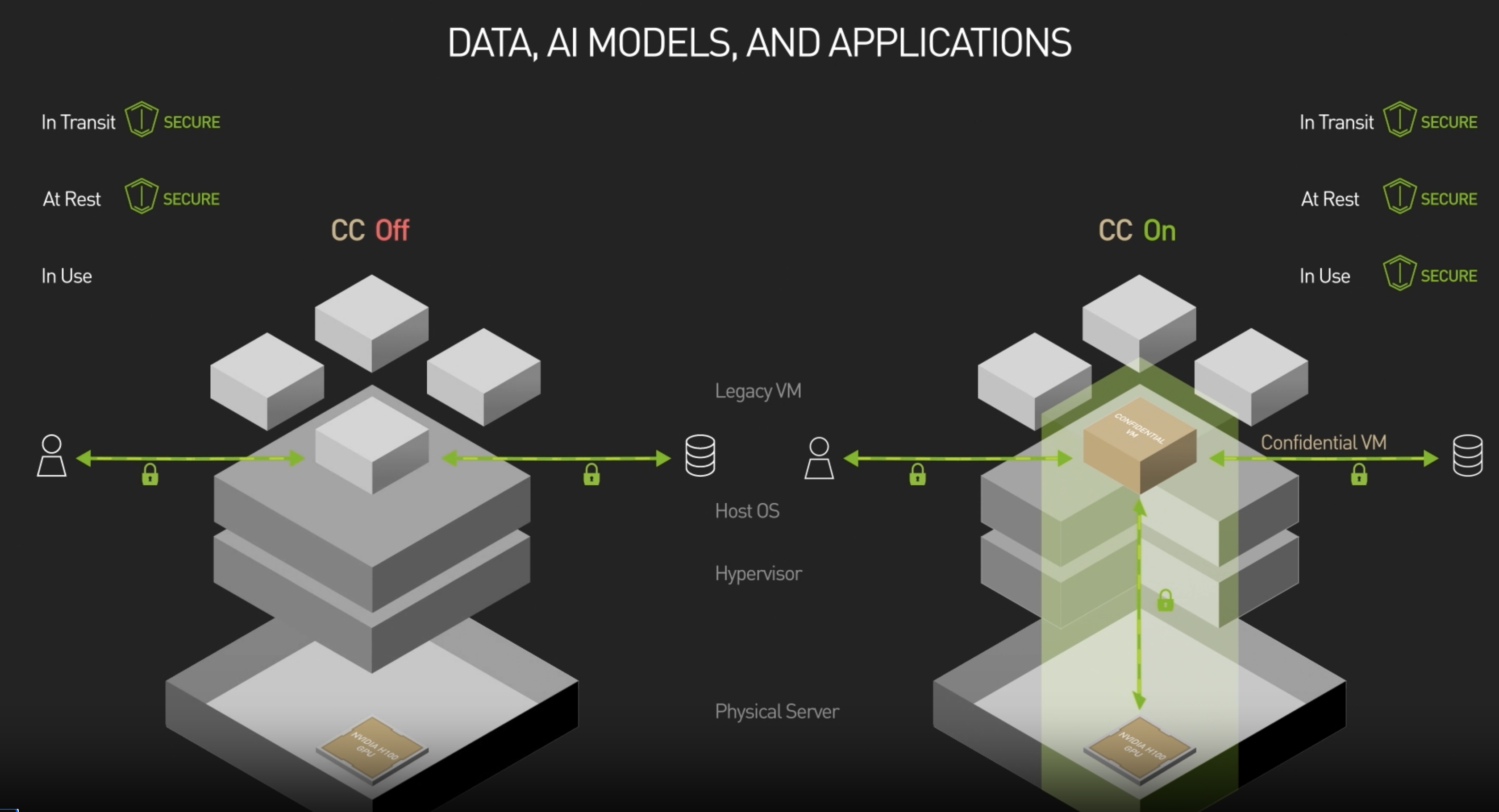

The real magic happens when decentralization meets verifiability. In August 2025, GenLayer made headlines by partnering with LibertAI and Aleph Cloud to create an inference layer that runs inside AMD SEV-SNP secured TEEs (Trusted Execution Environments). This means sensitive data stays private while outputs are validated on-chain – so developers can prove their models did what they claim, without exposing proprietary code or user data.

This isn’t just theoretical. Platforms like Inferium have chosen decentralized clouds like Aethir for exactly this reason: scalable inference that can be audited by anyone. The VeriLLM framework goes even further with its lightweight protocol for verifying LLM outputs at negligible cost – a crucial breakthrough as large language models become core infrastructure across industries.

Top Decentralized GPU Networks for Verifiable AI Inference (2025)

-

GenLayer – In August 2025, GenLayer partnered with LibertAI and Aleph Cloud to deliver confidential, verifiable AI inference. Their use of AMD SEV-SNP secured TEEs and blockchain validation ensures privacy, integrity, and auditability for AI workloads.

-

Inferium x Aethir – Inferium leverages Aethir’s decentralized GPU cloud to power its verifiable AI inference platform. This partnership brings scalable, transparent, and auditable AI services to developers and enterprises worldwide.

-

VeriLLM Framework – Debuted in September 2025, VeriLLM is a lightweight protocol for publicly verifiable decentralized inference of large language models (LLMs), offering robust security and minimal verification costs.

-

Phala Network – In September 2024, Phala Network launched the first GPU-TEE benchmark, advancing secure, privacy-preserving AI computation using Trusted Execution Environments for decentralized inference.

-

Hyperbolic – Hyperbolic delivers cost-effective, high-performance AI inference by tapping into a global pool of underutilized GPUs, making enterprise-grade AI accessible to more developers and organizations.

-

Nosana – Nosana’s decentralized GPU marketplace connects developers to global GPU resources for scalable, affordable AI model training and inference, democratizing access to compute power.

If you want to dig deeper into how these mechanisms build trust and transparency into distributed compute markets, check out our deep dive on how verifiable inference builds trust in decentralized AI compute networks.

The Economic Edge: Lower Costs and Wider Access

No discussion about decentralized GPU networks is complete without talking economics. Hyperbolic’s approach shows how leveraging global idle GPUs slashes costs while maintaining enterprise-grade performance – making high-powered AI accessible even to smaller teams. Nosana’s open marketplace takes this further by letting developers tap into affordable resources worldwide for both training and inference.

This isn’t just about saving money; it’s about democratizing access to cutting-edge AI tools. With solutions like Phala Network benchmarking TEE-enabled GPUs for privacy-preserving applications, we’re seeing the dawn of an era where robust security doesn’t come at the expense of speed or affordability.

As the decentralized GPU revolution accelerates, the benefits are rippling far beyond just cost savings. We’re seeing a virtuous cycle: more contributors mean more available compute, which means developers can scale their projects faster and with greater transparency. The days of waiting weeks for a GPU allocation or being locked into proprietary clouds are quickly fading.

One of the most exciting aspects is on-chain proof of compute. By recording every inference task on a public ledger, networks like GenLayer and VeriLLM empower users to independently verify that models were run as promised, no more taking a provider’s word for it. This shift is turning AI from an opaque service into an auditable utility, setting new standards for accountability in everything from fintech to healthcare.

Real-World Impact: From Research Labs to On-Chain Applications

The implications go way beyond tech circles. For AI startups, decentralized GPU networks mean launching ambitious products without multimillion-dollar cloud budgets. For enterprises, it means tapping into global pools of compute with built-in redundancy and compliance features. And for researchers, it’s a game-changer: access to scalable resources without bureaucratic red tape or vendor lock-in.

Perhaps most importantly, these networks are laying the groundwork for verifiable AI inference in on-chain applications. Imagine decentralized finance protocols using AI risk models whose outputs can be publicly audited, or healthcare apps running privacy-preserving diagnostics where patients control their data end-to-end. The convergence of DePIN compute infrastructure and blockchain validation is making these visions real.

Real-World Use Cases Powered by Decentralized GPU Networks

-

GenLayer x LibertAI & Aleph Cloud: Confidential, verifiable AI inference for enterprises—GenLayer’s partnership with LibertAI and Aleph Cloud delivers privacy-first AI computations secured by AMD SEV-SNP TEEs and blockchain-based validation.

-

Inferium & Aethir: Scalable, auditable AI inference in the cloud—Inferium leverages Aethir’s decentralized GPU platform to offer transparent, trustworthy AI services for businesses and developers.

-

VeriLLM Protocol: Publicly verifiable LLM inference—VeriLLM enables secure, lightweight, and cost-effective verification of large language model outputs, boosting trust in decentralized AI.

-

Phala Network: Privacy-preserving AI with GPU-TEE benchmarks—Phala pioneers secure AI by benchmarking TEE-enabled GPUs, ensuring confidential processing for sensitive data.

-

Hyperbolic: Affordable, high-performance AI for all—Hyperbolic taps into global idle GPUs to deliver enterprise-grade inference at a fraction of traditional costs, democratizing AI access.

-

Nosana: Global GPU marketplace for AI developers—Nosana’s decentralized marketplace connects developers to scalable, affordable GPU resources for both AI training and inference.

The market momentum is undeniable. With NVIDIA Corp (NVDA) holding steady at $180.28, even small-scale GPU owners have strong incentives to join the network and earn yield on hardware that would otherwise gather dust. It’s not just about “mining” coins anymore, it’s about fueling the next generation of AI breakthroughs.

What Comes Next: Scaling Trustless AI for Everyone

Looking ahead, expect even more innovation at the intersection of distributed AI hardware and verifiable inference protocols. As standards like VeriLLM mature and more projects integrate TEE-enabled GPUs (thanks to pioneers like Phala Network), we’ll see trustless coordination become table stakes for any serious AI deployment.

For developers and enterprises ready to dive in, now’s the time to explore how decentralized GPU marketplaces can supercharge your next project, whether you’re training massive LLMs or deploying privacy-first edge applications. If you want to see how these networks compare on price and performance versus traditional clouds, check out our breakdown on how decentralized GPU networks slash AI inference costs by 80% compared to AWS.

The bottom line? Decentralized GPU networks aren’t just a technical curiosity, they’re quickly becoming essential infrastructure for scalable, transparent, and verifiable AI solutions worldwide. Whether you’re an investor eyeing DePIN tokens or a builder looking for your next competitive edge, this movement is one you can’t afford to ignore.