Idle GPUs sitting in your rig represent untapped potential in the exploding DePIN landscape. Gensyn flips that script, letting you monetize spare compute power for AI training tasks while earning tokens in a network that’s already reshaping machine intelligence. With the Gensyn Public Testnet live since March 2025, early node operators are positioning for substantial gensyn ai node rewards 2025 as adoption surges.

This isn’t hype; it’s a calculated play on decentralized ai compute gpu demand. AI models demand massive parallel processing, and centralized clouds charge premiums. Gensyn’s Ethereum rollup testnet distributes that load, verifying computations on-chain for trustless payouts. Node runners contribute to real ML workloads, from model training to inference, all powered by your hardware.

I’ve tracked DePIN trends for years, and Gensyn’s persistent identity layer sets it apart. Unlike fleeting testnets, it builds lasting infrastructure for human-AI collaboration. Expect network effects to amplify idle gpu rewards depin as enterprises tap in.

Hardware Blueprint for Gensyn Node Success

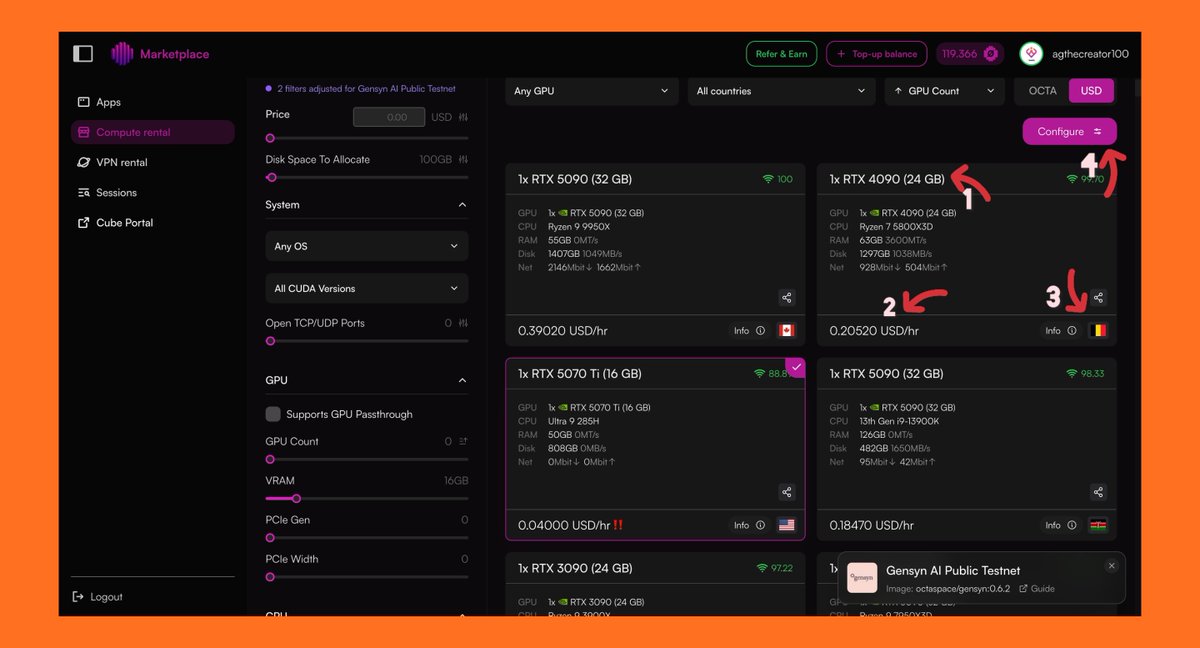

Skimp on specs, and your node idles while others rack up participations. Gensyn demands solid foundations: arm64 or amd64 CPU, 16GB RAM minimum (push for 20GB and ), and NVIDIA GPUs like RTX 3090,4090, A100, or H100. Python 3.10 and, ample SSD storage, and 100Mbps and internet seal the deal. 1Gbps uplinks shine for uninterrupted tasks.

Cloud VPS? Viable for testing, but owned hardware maximizes margins. CPU-only mode slashes costs below $250/month, per community guides, though GPU acceleration unlocks premium jobs. Cross-reference providers via official docs for vetted options.

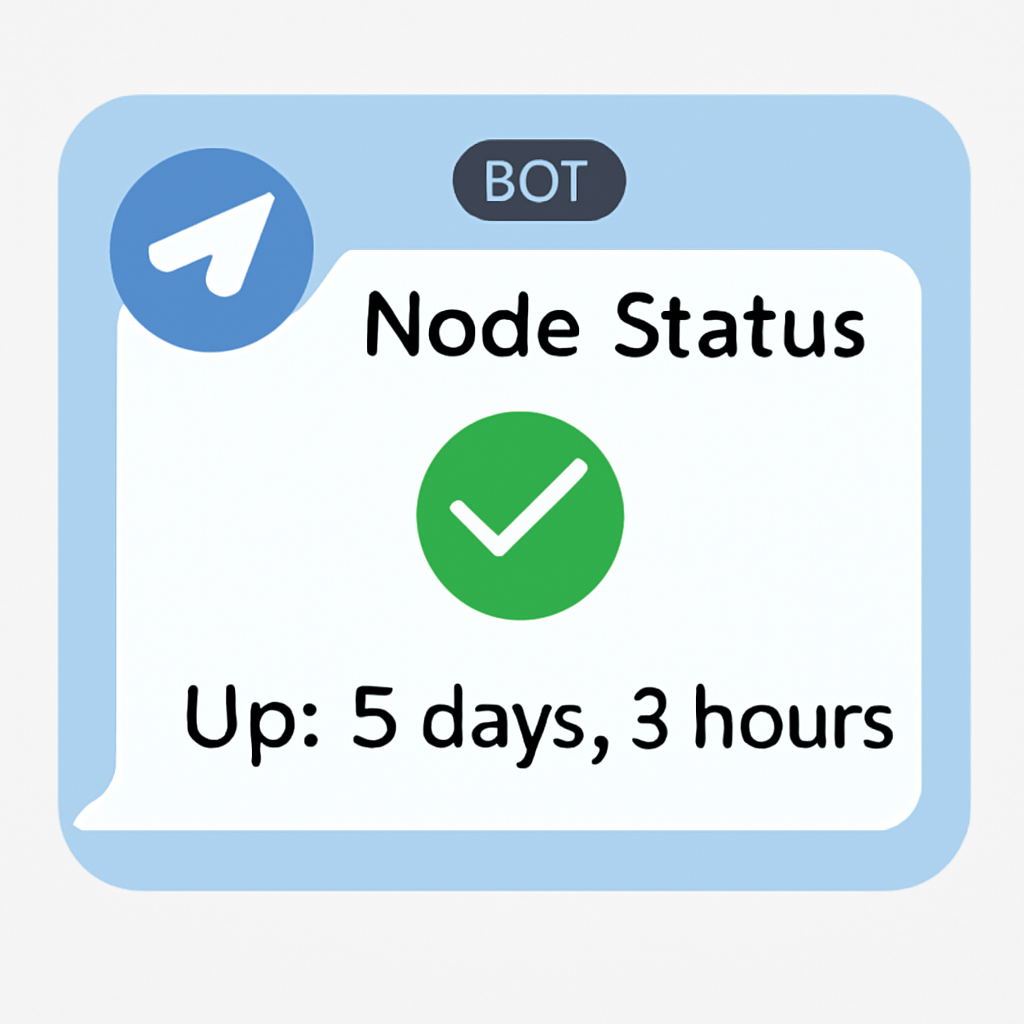

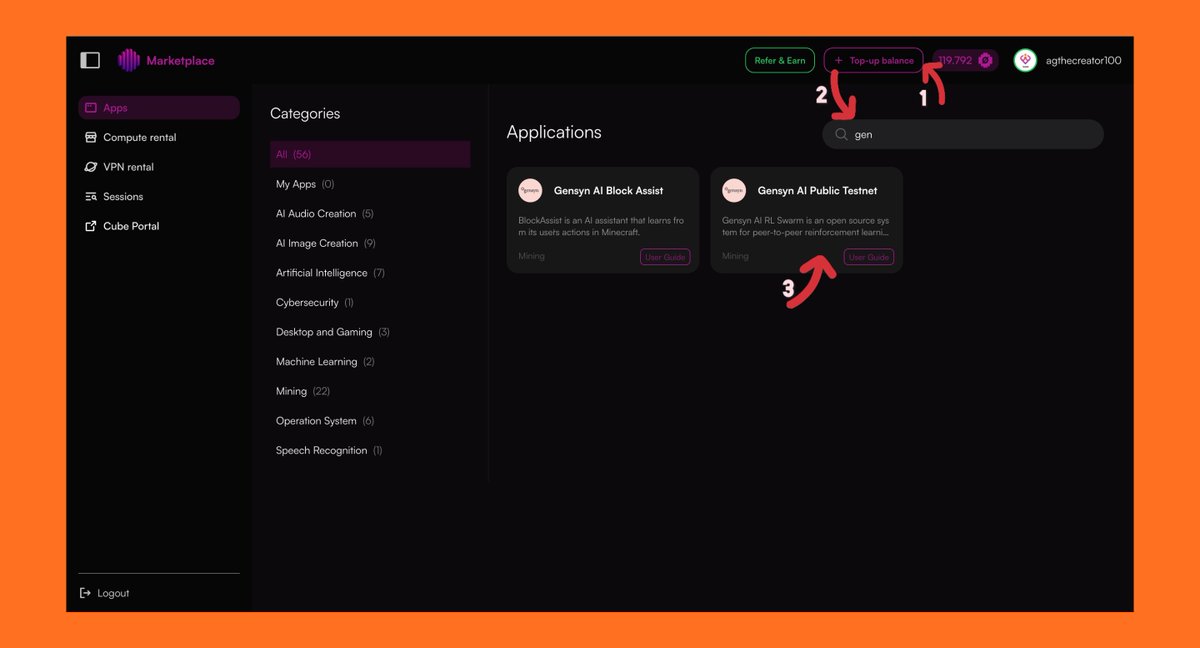

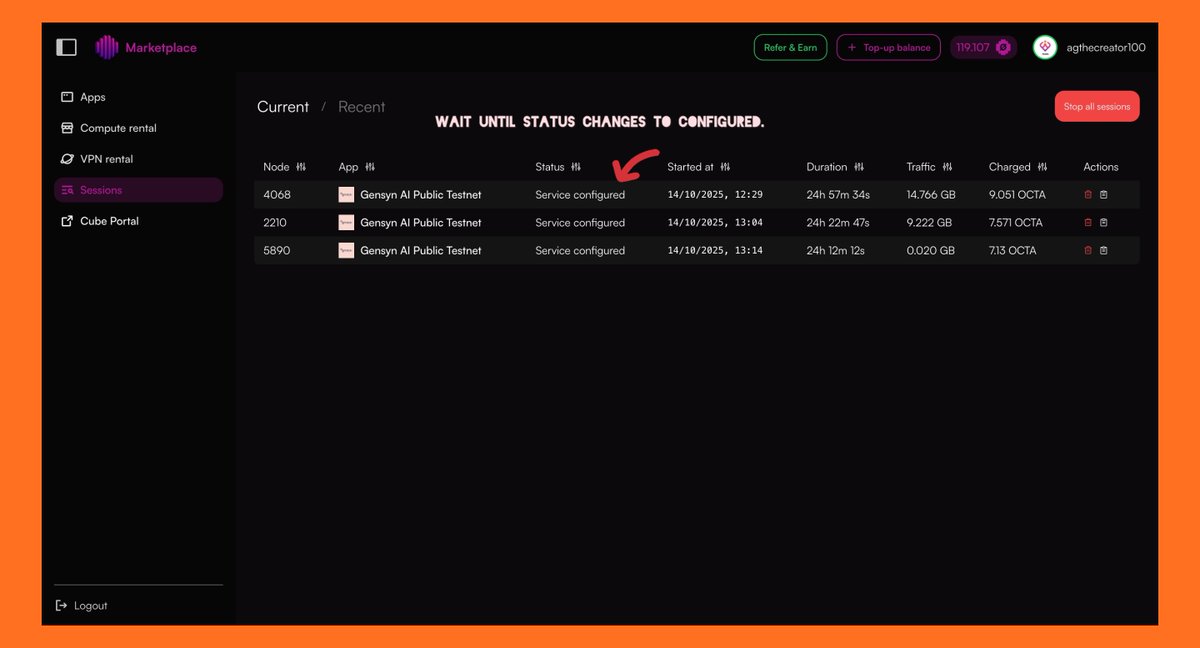

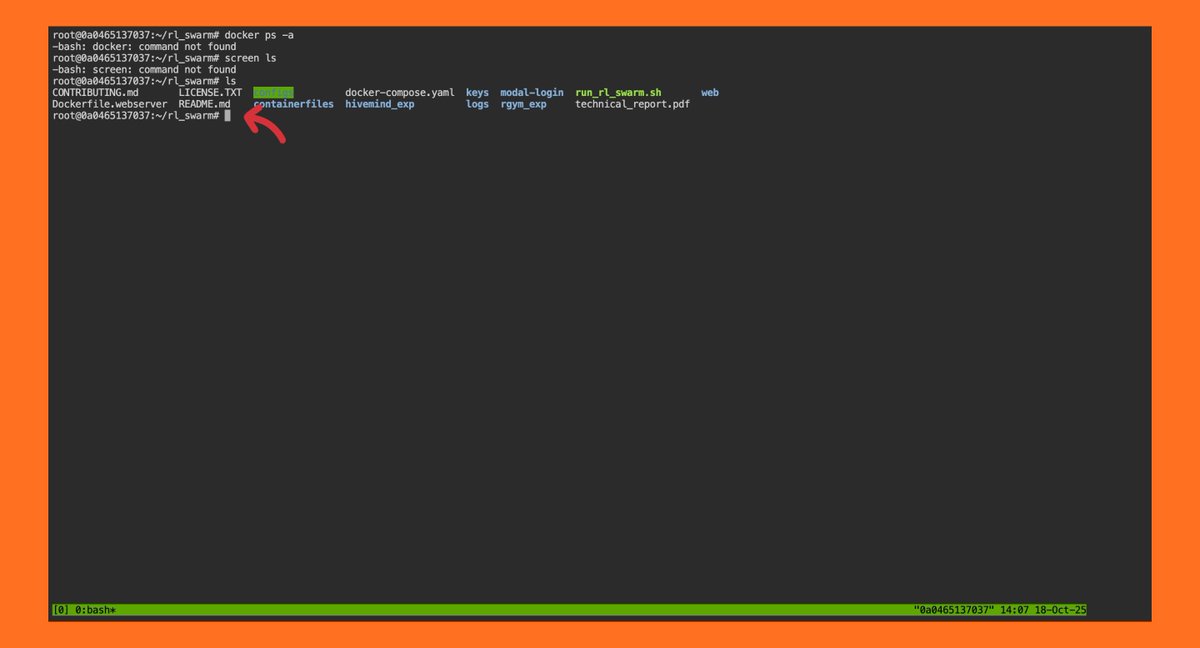

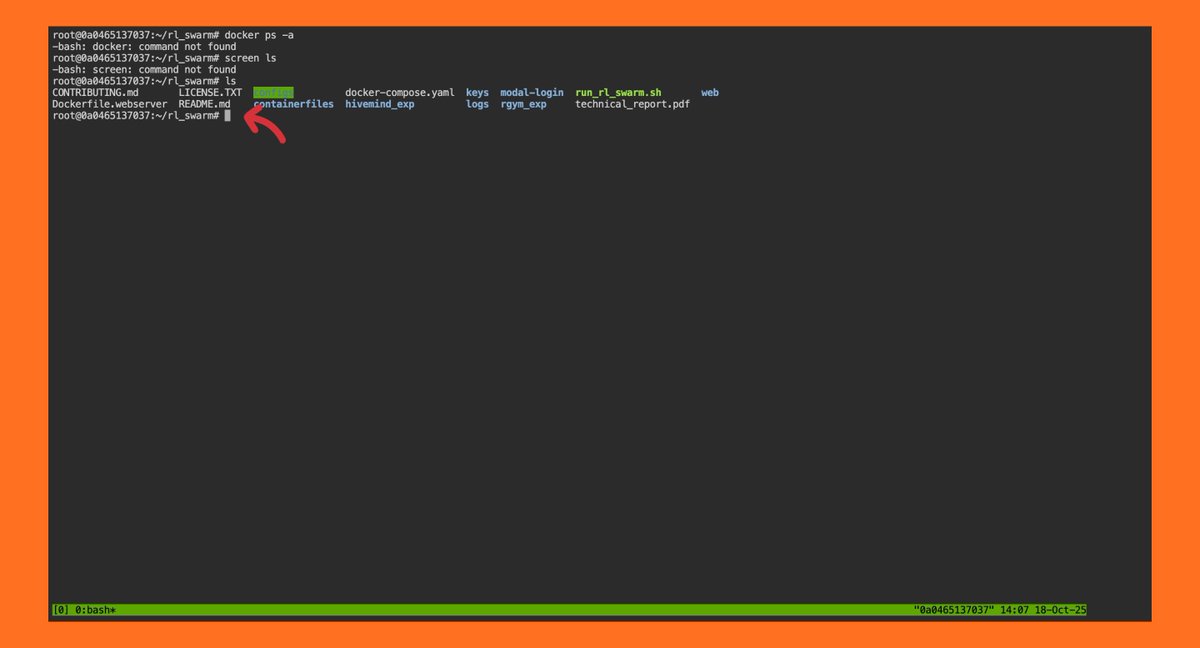

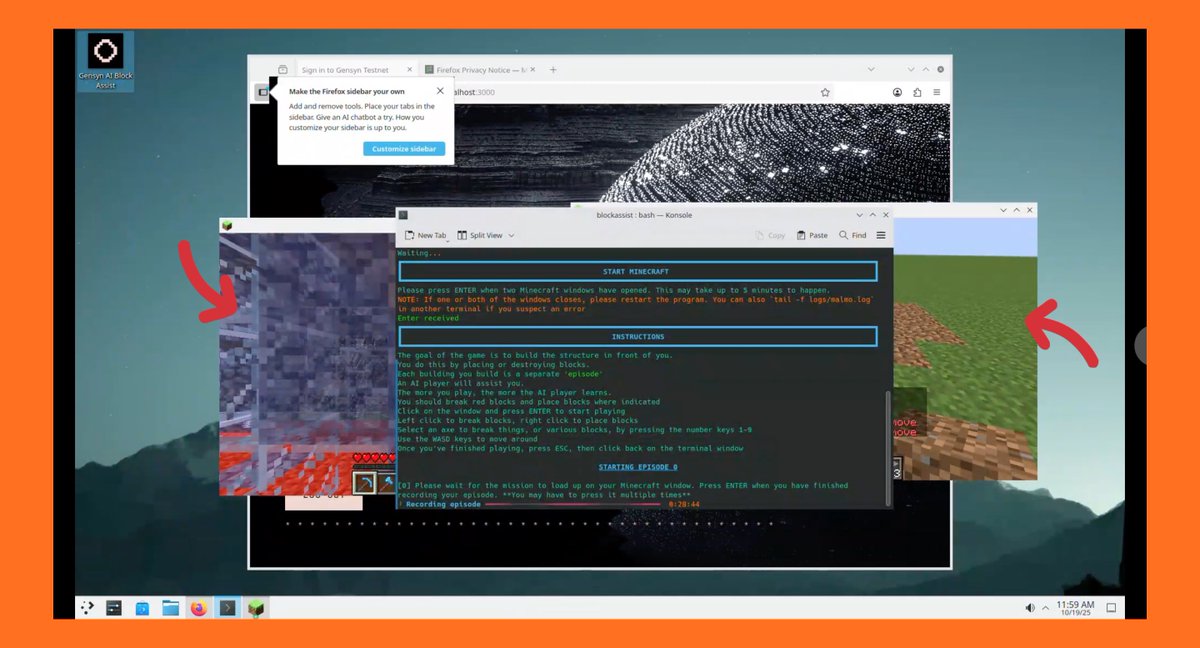

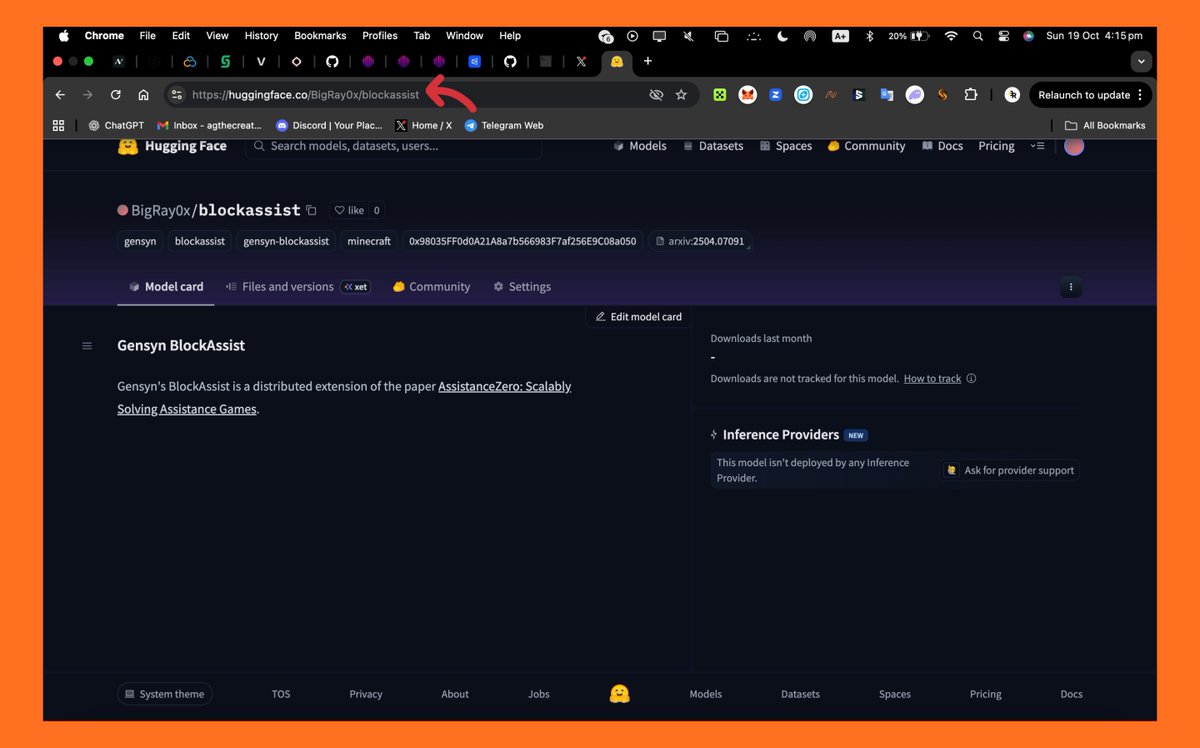

Setup boils down to one script, democratizing gensyn node setup. Fire up a terminal on Ubuntu-compatible systems. Grab the installer: Grant permissions, install screen for detachment, spawn a session, and execute. On-screen prompts handle Hugging Face login; NGROK bridges any tunnel snags. Minutes later, your node ID and name pop up – jot them down religiously. Pro tip: Backup Layer in the Hyperbolic Dashboard for full control. Register, hit settings, generate a public SSH key, and paste it. Select your GPU tier – match it to hardware for optimal matching. This step syncs your node with swarm orchestration, queuing ML jobs based on availability. Gensyn’s testnet emphasizes local model training, so expect diverse workloads. CPU fallback ensures baseline earnings, but NVIDIA firepower commands top depin gpu sharing ai tiers. Monitor via Telegram bot: forward your node ID for instant status and accrual snapshots. Forecast rewards at GSwarm’s estimator; input uptime and specs for daily projections. High-availability nodes crush it, blending reliability with raw power. Check this DePIN guide for broader node strategies. Operators who nail uptime and task completion rates pull ahead in the gensyn ai node rewards 2025 race. Downtime kills momentum; aim for 95% and availability to hit peak participations. Fine-tune your setup beyond basics. Tweak GPU utilization via Hyperbolic settings – allocate cores precisely to avoid thermal throttling. I’ve run nodes across configs, and undervolting RTX series GPUs extends sessions without sacrificing hash rates. Test CPU-only for low-stakes entry, then scale to full GPU swarm as tasks queue up. Network bottlenecks crush earnings. Prioritize 1Gbps symmetric connections; jitter under 10ms keeps verifications smooth. Use tools like iperf3 to benchmark before launch. On VPS, cherry-pick providers with NVLink support for multi-GPU beasts. Gensyn payouts hinge on verified computations, not just uptime. Each ML job – think fine-tuning LLMs or diffusion models – scores based on accuracy and speed. Top performers snag premium allocations, with testnet points converting to tokens post-TGE. Community estimators peg RTX 4090 rigs at 10-20x CPU yields, but factor electricity at $0.10/kWh for net math. Real-world alpha: Early testnet runners report 50-200 points daily on H100s, scaling linearly with swarm size. Stake your node ID in the dashboard for bonus multipliers. Track accrual via/check commands; laggy responses signal sync issues – restart swarm services pronto. NGROK tunnels flake under load? Switch to Cloudflare Zero Trust for persistent SSH. Hugging Face auth fails? Clear tokens and relogin via incognito. PEM corruption? Restore from backup; no excuses. GPU detection errors scream driver mismatches – stick to CUDA 12. x canonically. Overheating? Implement fan curves and ambient monitoring scripts. I’ve scripted alerts to Slack for temp spikes over 80C, saving rigs from meltdowns. Dive into Discord’s #node-support for peer fixes; the community’s gold for edge cases. Security first: Expose only necessary ports, firewall SSH to key auth. Gensyn’s rollup verifies zero-knowledge proofs, but your endpoint’s vulnerability invites exploits. Run fail2ban and update weekly. Scale smart. Multi-node swarms via Ansible amplify output, but sync PEMs across instances. For depin gpu sharing ai, Gensyn’s edge lies in verifiable ML, outpacing rivals on proof latency. Positioning now means outsized gains as mainnet beckons. Idle GPUs fuel the AI gold rush; run your Gensyn node, stack points, and ride the decentralized compute wave. Zero-Friction Gensyn Node Deployment

/root/rl-swarm/swarm. pem immediately. This PEM file is your node’s digital passport; lose it, and you’re rebuilding from scratch. I’ve seen operators forfeit weeks of uptime over this oversight. Hyperbolic Dashboard Integration and GPU Selection

Reward Mechanics and Earnings Breakdown

Gensyn Node Reward Tiers

Hardware

Est. Daily Tasks

Approx. Rewards (based on uptime)

CPU-only

5-10

Low 💰

RTX 3090

20-40

Medium 💰💰

A100

50+

High 💰💰💰

Troubleshooting: Bulletproof Your Node