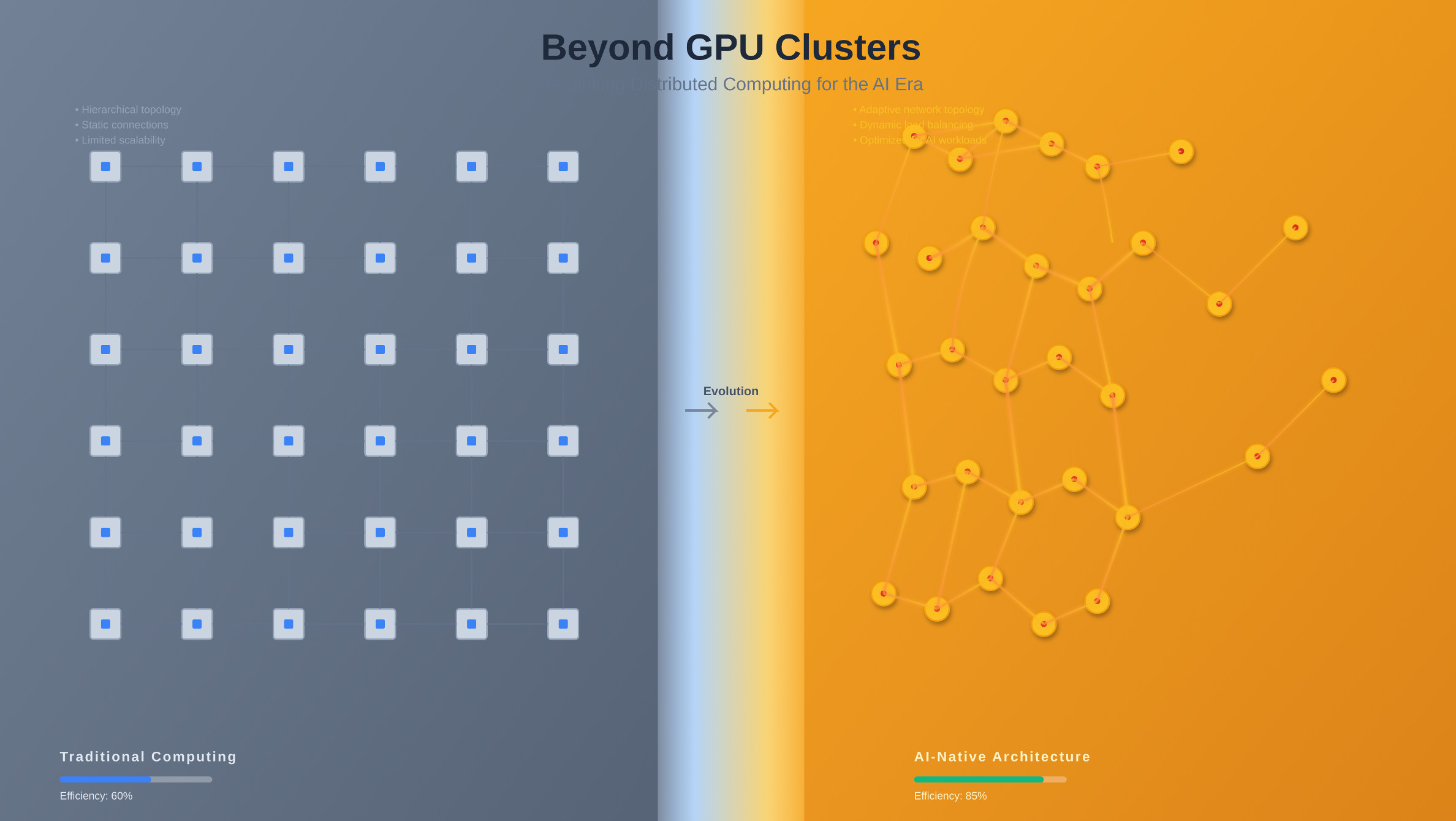

Decentralized AI compute networks are rapidly reshaping the landscape of on-chain model training, offering a compelling alternative to the limitations of centralized AI infrastructure. By distributing computational workloads across a global mesh of independent nodes, these networks are unlocking new levels of scalability, security, and accessibility for AI development. The convergence of blockchain and AI compute DePIN (Decentralized Physical Infrastructure Networks) is not only a technical evolution but a philosophical shift toward democratized, transparent, and privacy-preserving machine learning.

Breaking Through Centralized Bottlenecks

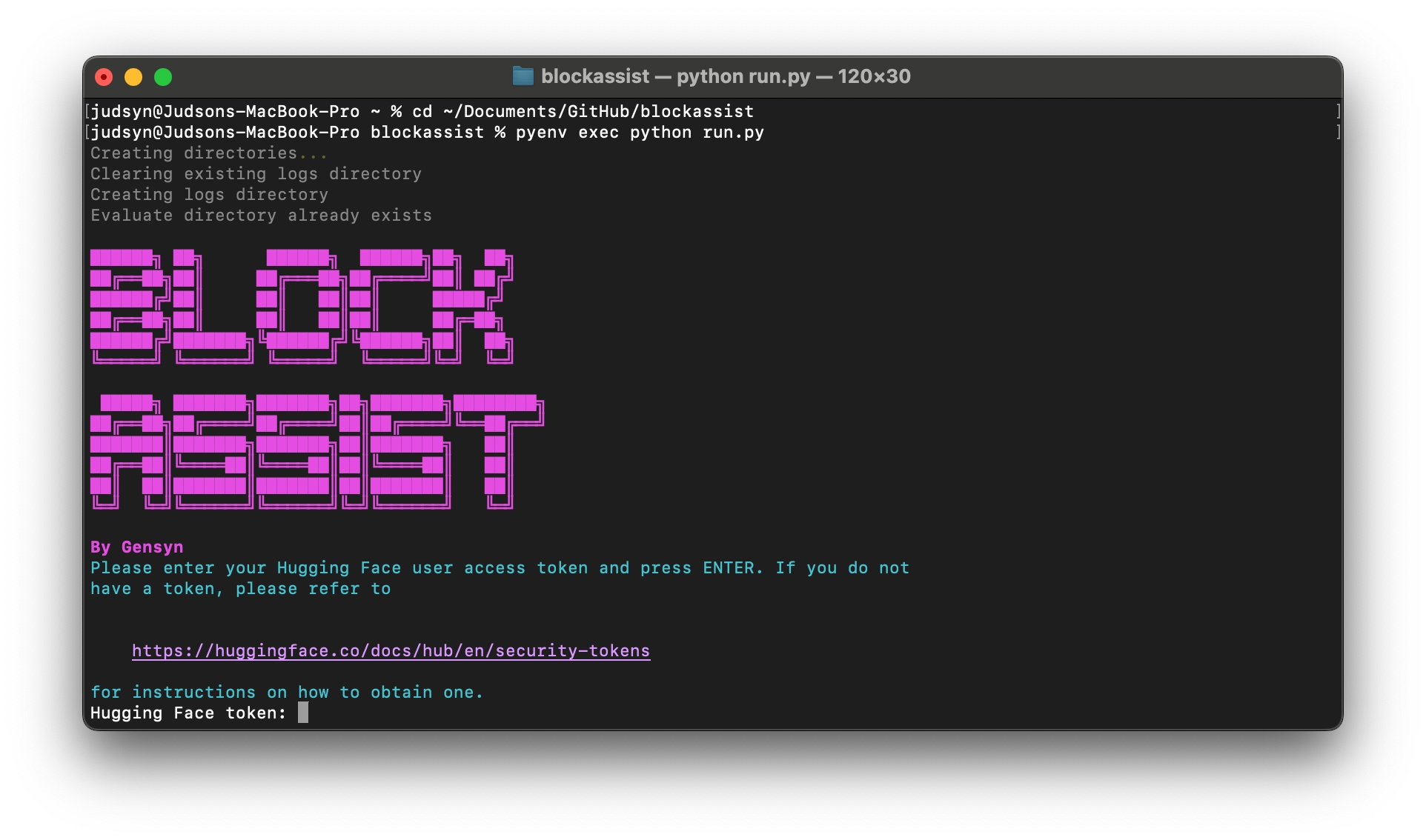

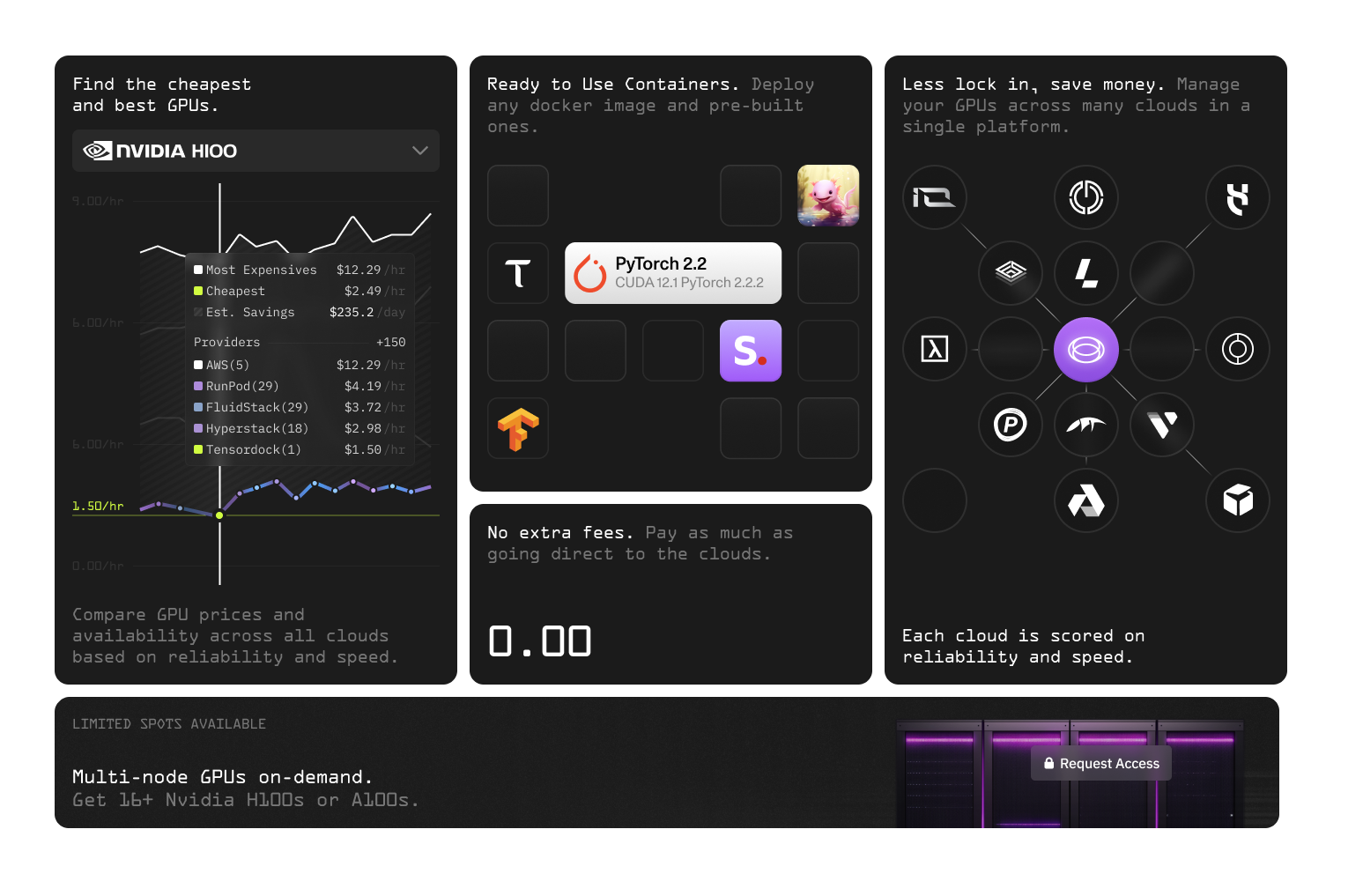

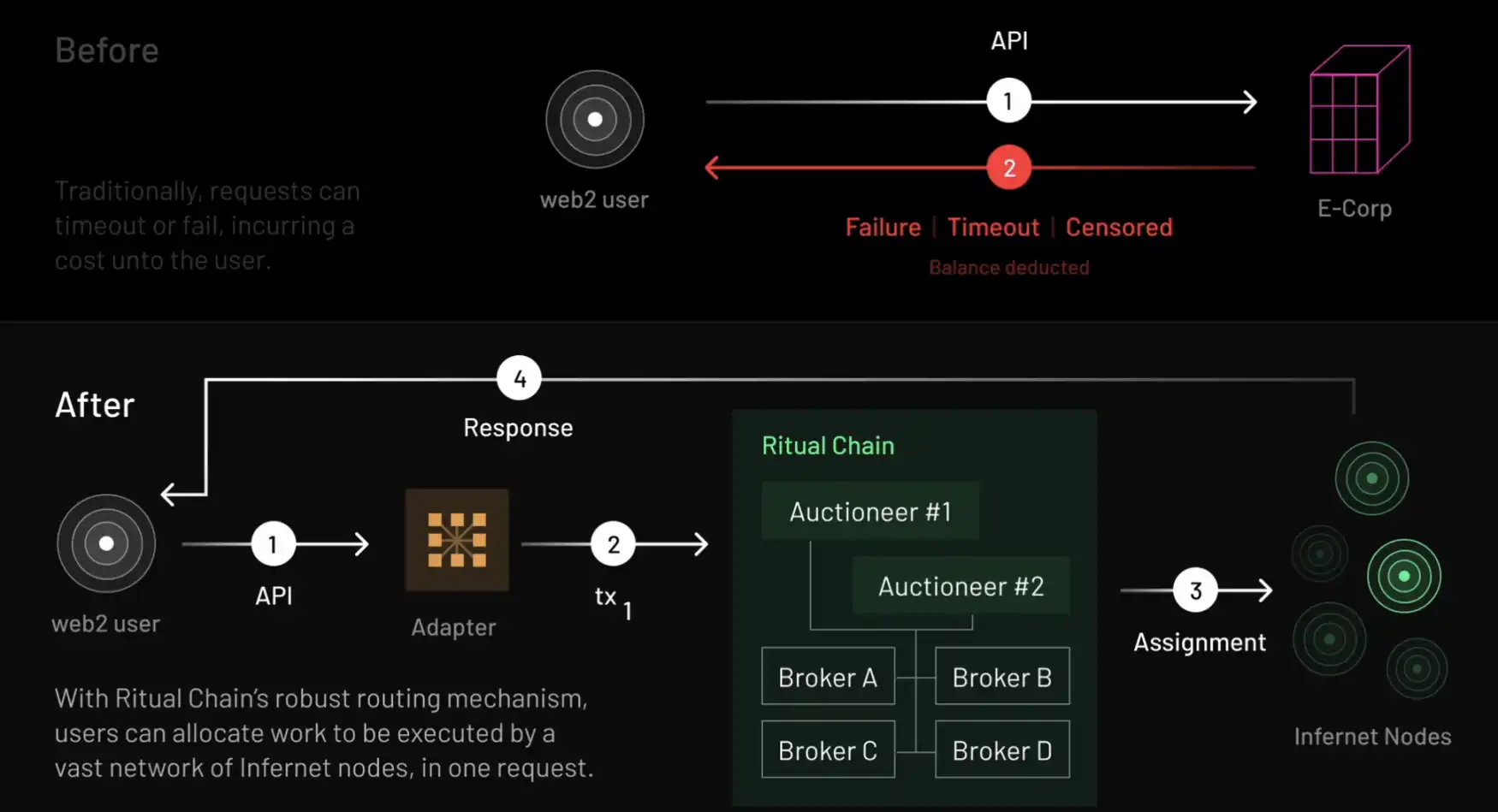

Traditional AI model training has long depended on centralized cloud providers, which control access, pricing, and data custody. This model introduces vulnerabilities around data privacy, censorship risk, and single points of failure. In contrast, decentralized AI compute networks leverage blockchain protocols to orchestrate secure, verifiable, and permissionless computation. Projects like Gensyn and Akash Network are spearheading this paradigm, allowing developers to tap into idle GPU resources worldwide and pay for compute with crypto, dramatically reducing costs and barriers to entry.

Recent innovations are pushing the boundaries of what’s possible. In July 2025, 0G Labs, in partnership with China Mobile, unveiled the DiLoCoX framework, enabling decentralized training of large language models with over 100 billion parameters. This breakthrough achieved a 357x speed improvement in distributed training compared to conventional methods, signaling a new era for blockchain AI infrastructure. For a deep dive on decentralized model training mechanics, see this technical guide.

Key Benefits: Security, Scalability, and Democratization

The advantages of decentralized AI compute networks are multifaceted:

Key Benefits of Decentralized AI Compute Networks

-

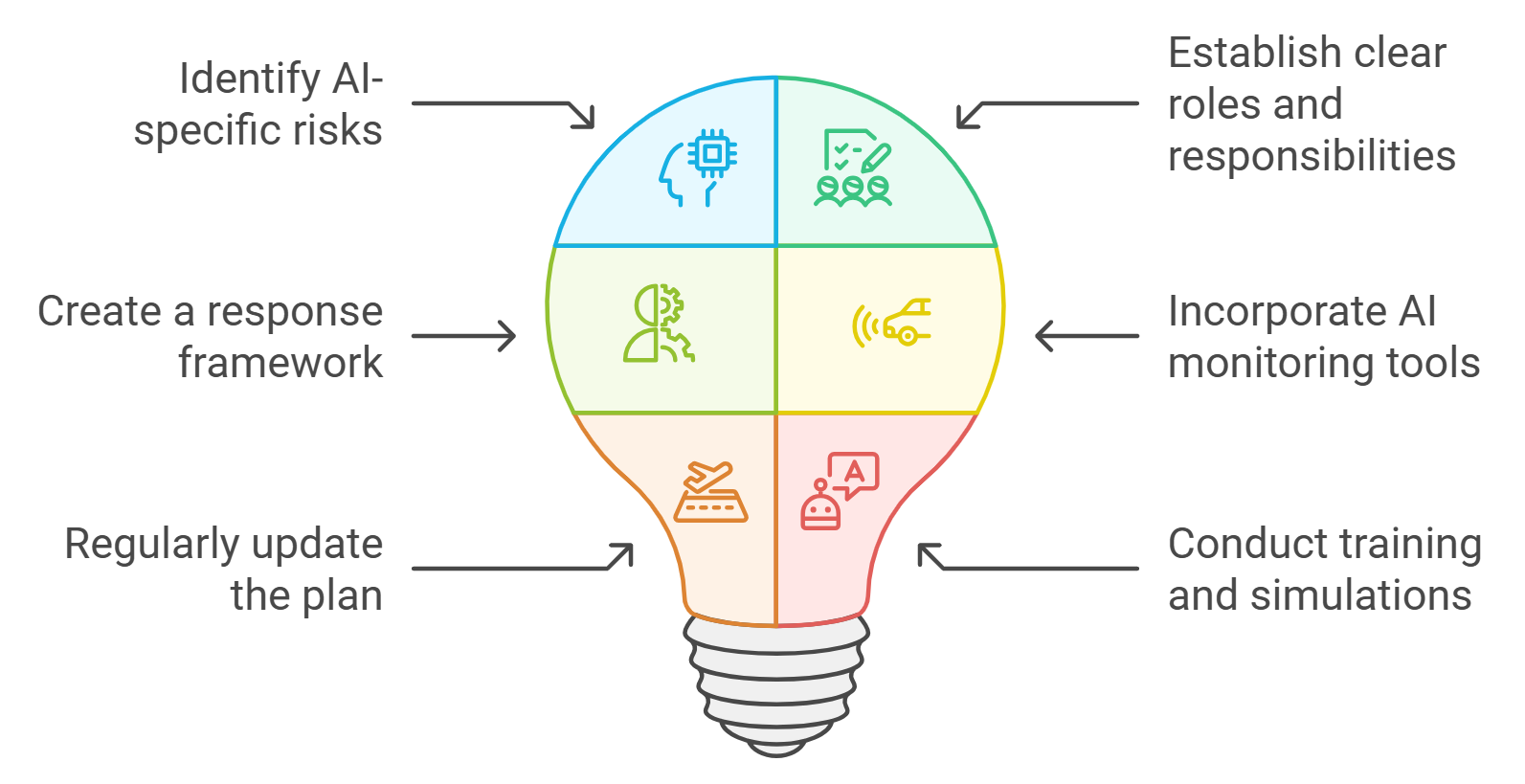

Enhanced Security & Privacy: Decentralized AI compute networks process data locally on individual nodes, ensuring that sensitive information remains private and is less vulnerable to centralized breaches. Technologies like AI-Chain employ Zero-Knowledge Proofs and Fully Homomorphic Encryption to further protect user data.

-

Scalability Through Distributed Computation: By leveraging a global network of nodes equipped with GPUs or specialized hardware, these networks scale AI model training far beyond traditional data centers. Innovations such as 0G Labs’ DiLoCoX framework have demonstrated significant speed improvements in distributed training.

-

Democratized Access to AI Development: Decentralized networks like Gensyn lower barriers for independent researchers and smaller organizations, providing shared access to compute resources and transparent governance, which fosters innovation and inclusivity.

-

Improved Transparency & Accountability: Utilizing blockchain’s immutable ledger, every step in the AI lifecycle—from data collection to deployment—is traceable and auditable, building trust among users and stakeholders.

-

Cost Efficiency & Resource Optimization: By tapping into idle or underutilized GPUs worldwide, decentralized networks reduce the overall cost of AI training and make efficient use of global computational resources.

First, by keeping sensitive data local to individual nodes, these networks offer robust privacy guarantees. Technologies like Zero-Knowledge Proofs (ZK-SNARKs), Fully Homomorphic Encryption (FHE), and Multi-Party Computation (MPC) are being integrated by projects such as AI-Chain to enable privacy-preserving collaborative training. This ensures that user data never needs to leave its original environment, mitigating risks associated with centralized data aggregation.

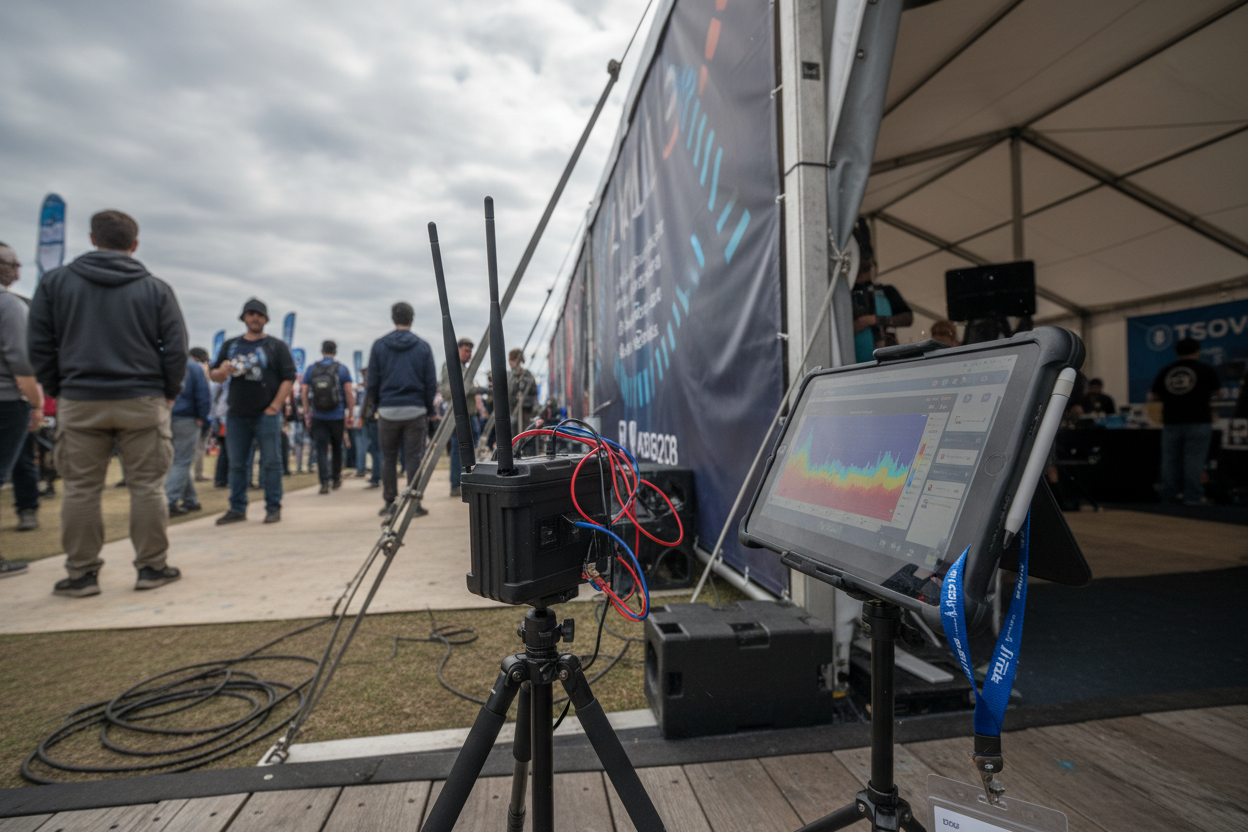

Second, the distributed nature of these networks means that compute tasks can be parallelized across thousands of nodes equipped with GPUs or specialized hardware. This architecture not only enables scaling to massive model sizes but also creates a more resilient infrastructure, immune to single points of failure or censorship.

Third, decentralized AI compute fosters democratized access. Independent researchers, startups, and organizations without deep pockets can now access world-class compute resources and participate in model training. Transparent governance structures and crypto-powered incentives further ensure that participation is open and equitable, driving innovation across the AI ecosystem.

Real-World Protocols: From Gensyn to AI-Chain

Several leading protocols illustrate the diversity and maturity of the decentralized AI compute landscape:

- Gensyn: Orchestrates scalable machine learning training across distributed nodes, lowering costs and reducing reliance on centralized providers.

- AI-Chain: Pioneers privacy-preserving training by integrating advanced cryptographic techniques, allowing encrypted computation and verifiable model outputs.

- 0G Labs DiLoCoX: Achieved a record-setting speed-up in decentralized training of large language models, demonstrating the viability of blockchain-based AI infrastructure at scale.

These projects exemplify how decentralized AI compute networks are not just theoretical constructs but production-ready solutions powering the next generation of on-chain AI model training. For further exploration of how these networks are transforming blockchain-based machine learning, visit our in-depth analysis.

Despite these advances, the journey toward fully decentralized, on-chain AI model training is not without friction. Bandwidth constraints remain a technical hurdle, especially when training models with hundreds of billions of parameters in geographically dispersed environments. Protocols like DiLoCoX address this by optimizing communication overhead and leveraging advanced data compression, but scalability at Internet scale will demand ongoing innovation.

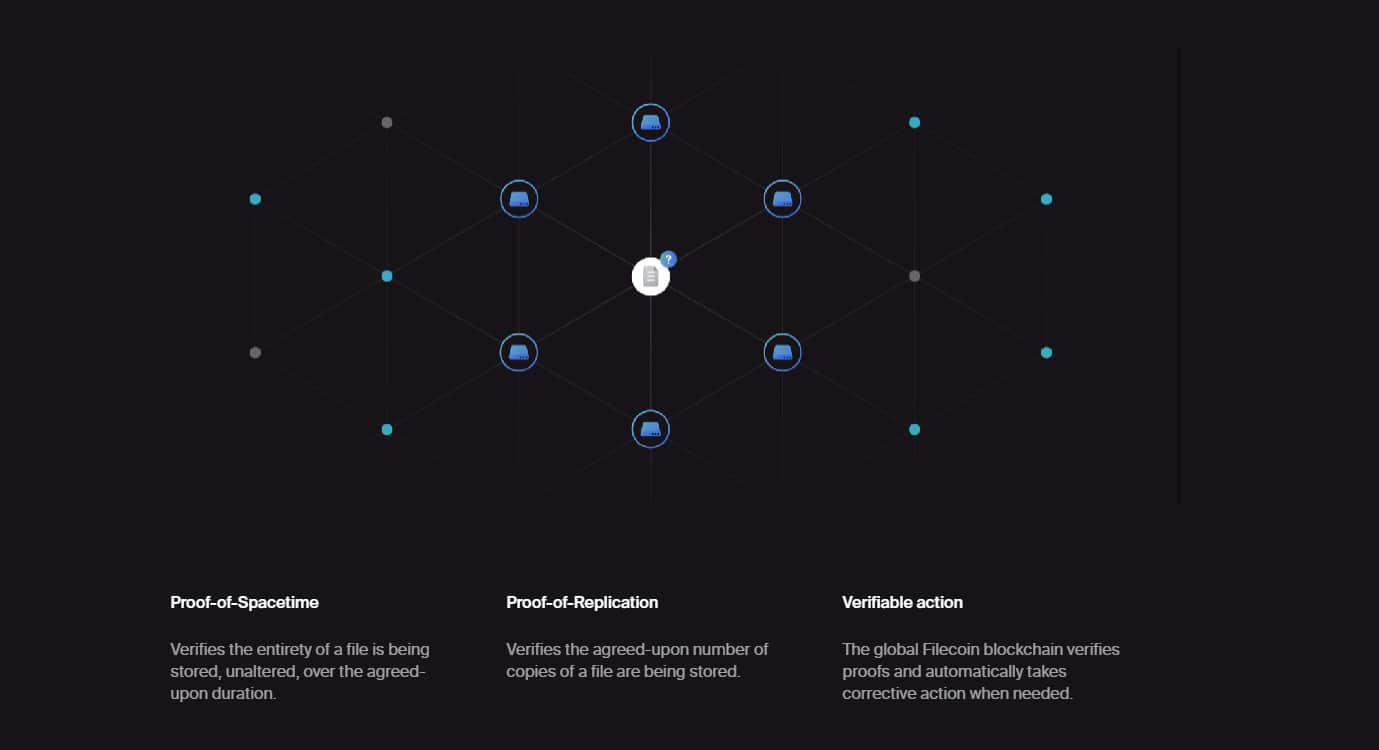

Equally critical is the challenge of trustless result verification. In decentralized settings, ensuring that off-chain compute nodes perform training tasks honestly and return valid results is nontrivial. Solutions such as cryptographic proofs of computation, reputation systems, and consensus-based validation are emerging as foundational primitives for secure AI compute DePIN. These mechanisms are essential for building user trust and ensuring that model training remains transparent and tamper-resistant.

The Role of Crypto Incentives and Governance

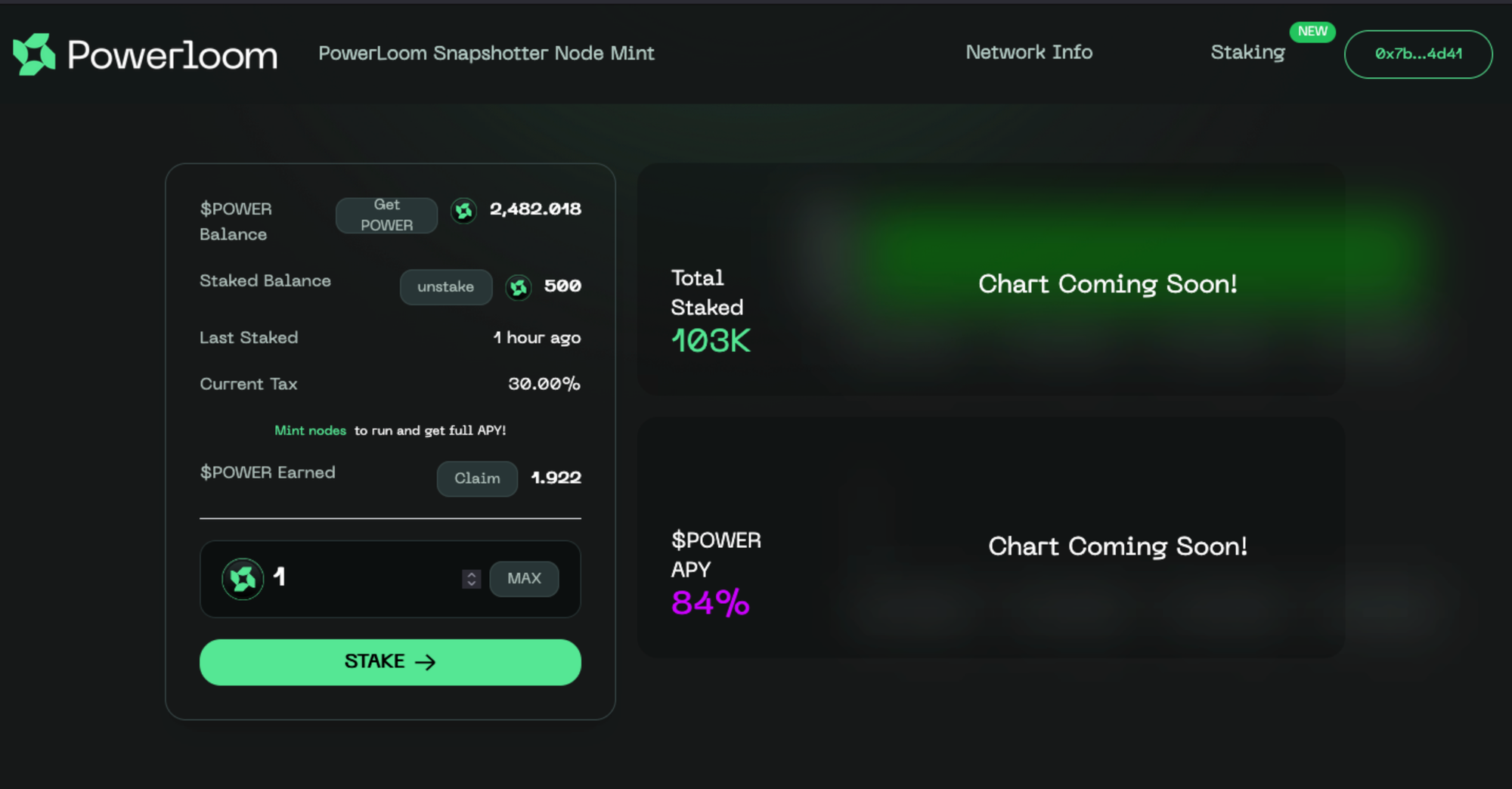

One of the most transformative aspects of decentralized AI compute networks is the integration of crypto-powered incentive structures. By rewarding node operators in native tokens, networks like Akash and Gensyn align economic incentives with network health, compute reliability, and data security. This approach not only lowers the cost of AI development but also fosters a vibrant, permissionless ecosystem where anyone can contribute resources and earn rewards.

Transparent, on-chain governance further empowers the community to dictate network upgrades, protocol parameters, and dispute resolution. This decentralized approach stands in stark contrast to the opaque policies of traditional cloud providers, giving stakeholders direct influence over the evolution of AI infrastructure.

Top Crypto-Powered Incentives in Decentralized AI Compute

-

Token Rewards for Compute Providers: Platforms like Akash Network and Gensyn incentivize node operators by offering native crypto tokens in exchange for supplying GPU or CPU power to train AI models. This rewards individuals and organizations for sharing idle hardware, fueling network growth.

-

Data Contribution and Privacy Bounties: AI-Chain utilizes crypto bounties to reward users who contribute valuable datasets for training, with privacy preserved through Zero-Knowledge Proofs and encryption. This encourages high-quality, privacy-respecting data sharing.

-

Governance Participation Rewards: Decentralized AI networks like Prime Intellect and GaiaNet offer token-based voting and proposal systems, rewarding active community members for shaping network policies and development priorities.

-

Proof-of-Useful-Work Validation: Protocols such as Gensyn implement cryptographic proofs to verify that compute providers have performed legitimate AI training tasks, distributing crypto rewards only for verifiable, useful work. This ensures network reliability and deters fraudulent activity.

Looking Ahead: Toward Scalable, Ethical AI

As we move into 2026, the convergence of blockchain and AI is laying the groundwork for a more inclusive and ethically grounded AI ecosystem. Decentralized compute networks are not only making large-scale model training more accessible but also introducing new standards for privacy, transparency, and accountability. By anchoring every step of the AI lifecycle on-chain, from data provenance to model deployment, these networks offer unprecedented assurances for both developers and end users.

The open-source ethos underpinning these protocols accelerates collective progress. Projects like Internet Computer (ICP) and 0G Labs are demonstrating that it is possible to run complex AI models as smart contracts, with verifiable integrity and censorship resistance. As more enterprises and researchers migrate workloads to decentralized infrastructure, we can expect an explosion of innovation in areas such as federated learning, autonomous on-chain agents, and privacy-preserving analytics.

For those looking to dive deeper into the mechanics and emerging best practices of decentralized model training, our technical review at How Decentralized GPU Networks Power Scalable AI Model Training offers further insights.

Ultimately, decentralized AI compute networks are shifting the paradigm from centralized control to community-driven innovation. As technical barriers fall and network effects compound, these networks will become the backbone of next-generation blockchain AI infrastructure, unlocking secure, scalable, and democratized on-chain model training for all.