The explosive growth of artificial intelligence has ignited a global arms race for GPU compute. Yet, as demand surges, traditional cloud providers have struggled to keep pricing in check and infrastructure open. A new paradigm is emerging: decentralized GPU networks, built atop DePIN (Decentralized Physical Infrastructure Networks) and EdgeAI frameworks. These networks aggregate idle GPUs from data centers and individuals worldwide, slashing AI compute costs by up to 70% while unlocking liquidity and scalability never before possible.

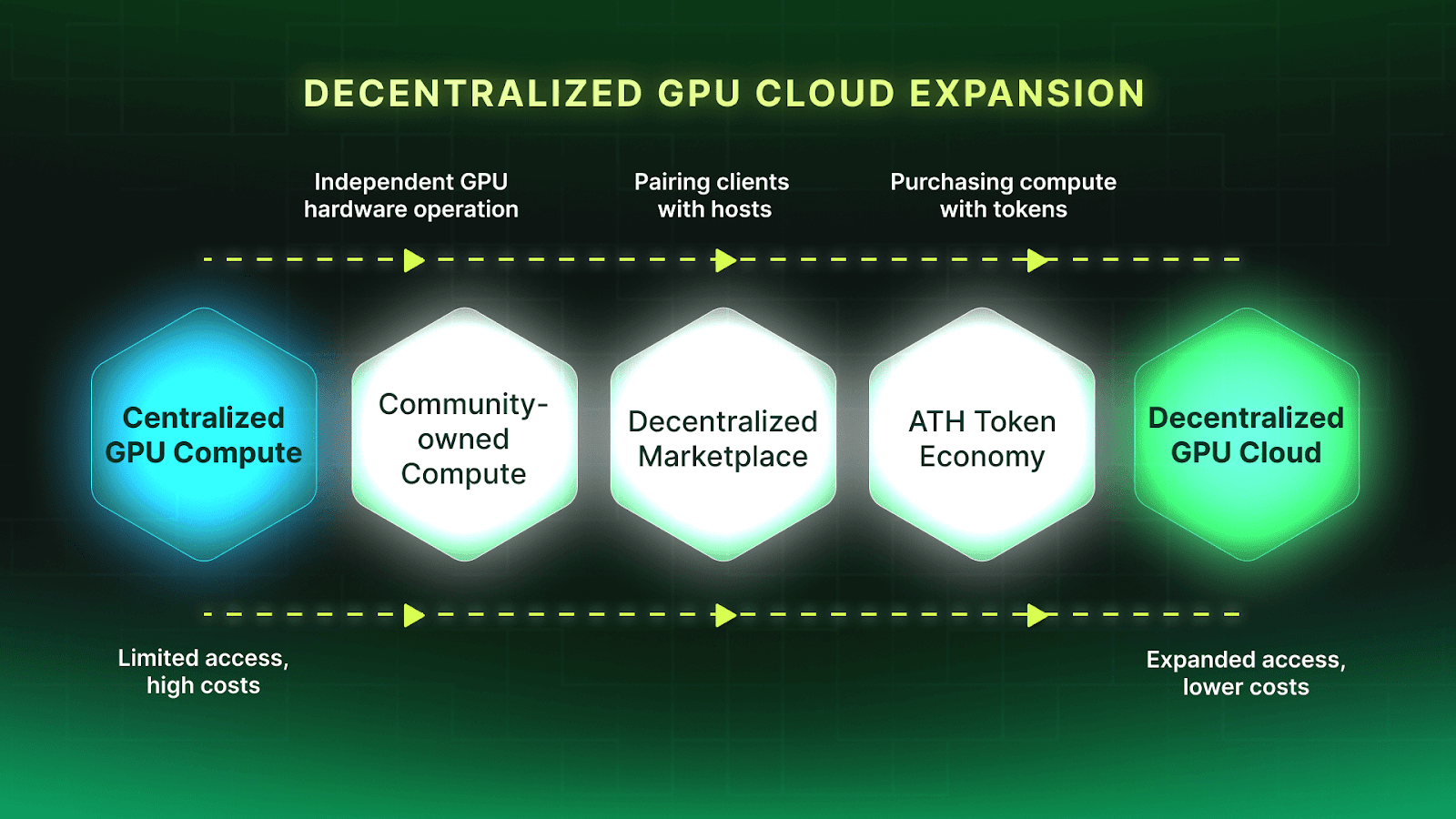

From Centralized Clouds to Decentralized GPU Networks

Legacy cloud giants have long dictated the economics of AI compute. Their vertically integrated stacks not only create bottlenecks but also drive up prices, especially during periods of high demand. For context, current market leaders like NVIDIA Corp are trading at $189.37, with AMD at $242.27, reflecting the immense value placed on GPU hardware in today’s AI economy.

Decentralized GPU networks flip this model on its head. Platforms such as io. net, Vertical Cloud, and Argentum AI tap into underutilized GPUs across continents, forming an on-demand marketplace for AI computation. According to recent data, io. net delivers AI compute at 90% less per TFLOP compared to centralized alternatives, an unprecedented drop that empowers both startups and enterprises to scale without prohibitive costs. Vertical Cloud users report up to 45% lower costs, all without sacrificing performance or accessibility.

This shift isn’t just about economics; it’s about breaking down barriers to innovation by making high-performance compute accessible globally. For a deeper dive into how these cost structures disrupt legacy cloud monopolies, see this analysis.

The Mechanics: Token Incentives and Compute Liquidity

The engine behind decentralized GPU networks is a sophisticated blend of blockchain incentives and tokenized resource markets. Providers stake their GPUs in a network and receive crypto rewards proportional to their contributions, a model that incentivizes participation while ensuring reliability for buyers.

This tokenized approach creates real-time liquidity for computational resources. Users can easily buy or lease GPU power as needed, while providers maximize the utility (and ROI) of their hardware investments, whether they’re running NVIDIA’s latest cards or older models that would otherwise sit idle.

Notably, platforms like OODA AI empower individuals and small businesses to monetize idle GPUs with minimal friction, further democratizing access to the AI economy.

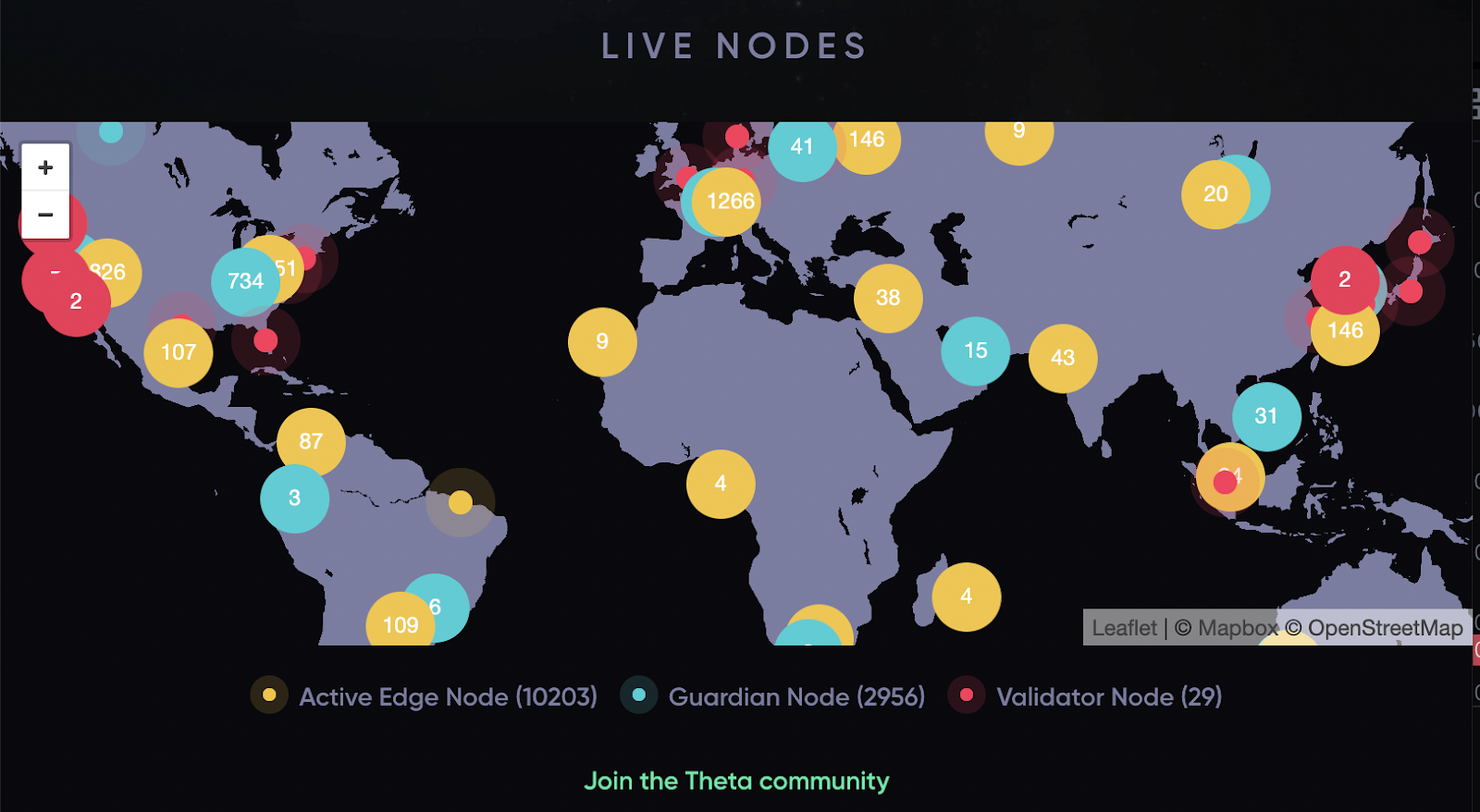

EdgeAI Meets DePIN: Real-World Impact at the Network’s Edge

The integration of EdgeAI with DePIN is redefining where, and how, AI workloads get processed. Instead of routing all data through distant hyperscale clouds, EdgeAI processes information right where it’s generated: at IoT endpoints, mobile devices, or local servers.

This architecture offers a trifecta of benefits: reduced latency (with sub-50ms inference now achievable), enhanced data privacy (since sensitive information need not leave the edge), and significant bandwidth savings. DePIN protocols secure these transactions on-chain while orchestrating the flow of tokens between buyers and sellers in real time.

For example, Kava. io reports that DePIN-powered GPU providers already deliver up to 70% cost savings for customers in production environments, a figure corroborated by multiple independent benchmarks across 2025.

Pushing Past Capacity Constraints: The New Scalability Frontier

The beauty of decentralized networks lies in aggregation, not just within major data centers but across thousands of smaller contributors worldwide. This model eliminates traditional capacity constraints faced by centralized clouds during peak periods or hardware shortages.

The result? Consistent availability for even the most demanding AI workloads, at predictable prices untethered from legacy vendor lock-in models. As more organizations explore these alternatives, real-world case studies are emerging that showcase dramatic improvements in project timelines and ROI.

Beyond raw cost savings, decentralized GPU networks are catalyzing a new era of AI compute accessibility. By enabling anyone with surplus GPU resources to participate, these networks create a resilient, global mesh that adapts dynamically to demand spikes and hardware cycles. This is especially impactful for AI startups and research teams that have historically been priced out of large-scale model training or high-frequency inference tasks.

Top Advantages of Decentralized GPU Networks

-

Massive Cost Reduction: Platforms like io.net and Vertical Cloud offer AI compute at up to 70–90% lower costs than centralized cloud providers, enabling affordable access to high-performance GPU clusters.

-

Global Accessibility & Scalability: Decentralized networks aggregate GPUs from data centers and individuals worldwide, ensuring on-demand access and eliminating capacity bottlenecks for AI workloads. Platforms like OODA AI let anyone monetize idle GPUs, democratizing participation.

-

Edge Processing & Data Privacy: Solutions like EdgeAI and EMC (Edge Matrix Chain) process data directly at the edge, reducing latency and enhancing privacy by keeping sensitive data closer to its source.

But the benefits extend further. Tokenized compute marketplaces foster transparent pricing and fair competition among providers. Instead of opaque pricing tiers set by cloud giants, users gain access to real-time rates driven by actual supply and demand. This liquidity also allows for micro-transactions, leasing compute power by the minute or even second, which is ideal for bursty workloads or rapid prototyping cycles.

The Role of Major Players and Current Market Data

As of November 6,2025, the importance of GPUs in AI infrastructure is underscored by current market prices: NVIDIA Corp (NVDA) at $189.37, AMD at $242.27, Intel at $37.41, Taiwan Semiconductor (TSM) at $290.40, and Qualcomm at $174.06. The decentralized model leverages both state-of-the-art cards and legacy hardware, making it possible for contributors with older GPUs to monetize assets that would otherwise depreciate in value.

Centralized vs Decentralized GPU Compute Costs in 2025

| Compute Provider Type | Example Platform(s) | Average Cost per TFLOP | Cost Savings vs Centralized | Scalability & Accessibility | Payment Method | Data Privacy |

|---|---|---|---|---|---|---|

| Centralized Cloud | AWS, Google Cloud, Azure | $2.00 per TFLOP | — | Limited by provider capacity | Bank account, credit card | Provider-controlled |

| Decentralized GPU Network | io.net, Vertical Cloud, OODA AI | $0.20 per TFLOP (io.net) | Up to 90% cheaper (io.net), 45% cheaper (Vertical Cloud) | Global, on-demand, scalable | On-chain wallet, crypto | User-controlled, enhanced privacy |

This economic inclusivity not only widens participation but also supports circular hardware economies, reducing e-waste while maximizing ROI for device owners worldwide.

Practical Impact: Real-World AI Compute Savings

The numbers speak volumes. Enterprises deploying on decentralized GPU clouds like io. net or Vertical Cloud routinely report cost reductions up to 70% compared to traditional providers, a figure validated by independent audits and industry benchmarks throughout 2025. These savings are not theoretical; they translate directly into faster go-to-market timelines, expanded R and D budgets, and broader experimentation across industries from healthcare AI to autonomous vehicles.

EdgeAI’s integration with DePIN further amplifies these advantages by enabling sub-50ms inference times on distributed edge nodes, a game-changer for applications demanding real-time responsiveness such as robotics or smart city infrastructure.

Navigating Risks and Looking Ahead

No technological revolution comes without challenges. Decentralized networks must continually address issues around quality assurance, node reliability, and data privacy compliance, especially as regulatory scrutiny intensifies globally. However, advances in cryptographic proofs (like zero-knowledge attestations) and automated staking penalties are rapidly maturing these ecosystems.

The trajectory is clear: as tokenized incentive models align network reliability with user demand, and as more organizations seek alternatives to cloud lock-in, decentralized GPU networks will become a foundational layer in the next generation of AI infrastructure.

If you’re considering how EdgeAI-DePIN can transform your own AI strategy or want more technical details on real-world deployments, explore our guides on scalable DePIN-powered compute or see how decentralized inference costs stack up against AWS.