AI is at a crossroads. As demand for large-scale model training and inference explodes, the cost and scarcity of GPU compute threaten to bottleneck innovation. Enter decentralized GPU networks like EdgenTech, which are redefining the economics of AI compute by unlocking idle resources and creating open, competitive marketplaces. This structural shift is more than just a technical upgrade – it’s a macroeconomic transformation that is already slashing costs, democratizing access, and accelerating the pace of AI adoption across sectors.

The Centralized Bottleneck: Why Traditional Cloud Pricing Is Broken

For years, hyperscale cloud providers have dictated the price and availability of GPU compute. Their dominance has created a rigid marketplace where prices are inflated by scarcity premiums, vendor lock-in, and opaque allocation policies. In 2025, renting high-performance GPUs from major clouds can cost anywhere from $2 to $10 per hour, depending on demand spikes and regional shortages. For AI startups and research labs operating on thin margins, these costs are prohibitive.

But the real inefficiency lies beneath the surface: millions of consumer-grade GPUs worldwide remain idle or underutilized. The centralized model simply can’t aggregate or monetize this latent capacity efficiently. As a result, both supply and pricing have remained artificially constrained – until now.

DePIN Networks: The Rise of Decentralized AI Compute Marketplaces

Decentralized Physical Infrastructure Networks (DePINs) like EdgenTech are overturning this paradigm by pooling GPU resources from thousands of independent operators. These networks leverage blockchain for transparent coordination and token-based incentives to reward contributors for making their hardware available to the network.

The impact on cost is nothing short of dramatic. According to recent data:

- exaBITS has onboarded over 65,000 GPUs globally, enabling clients to reduce compute costs by up to 71% while increasing performance by 130% (source).

- 0G Compute Network connects idle GPU owners with AI developers at prices up to 90% cheaper than traditional cloud providers.

- Spot pricing models in DePIN markets routinely offer rates 30-50% below on-demand cloud prices, with no long-term commitments or lock-in.

This isn’t theoretical; it’s happening now as DePIN-powered platforms enable enterprises to run real-world workloads at a fraction of legacy costs. By decentralizing supply chains and removing intermediaries, these networks convert what was once wasted capacity into an engine for scalable AI innovation.

The Mechanics Behind Cost Reduction: Token Incentives and Open Market Dynamics

The secret sauce behind these savings lies in a combination of economic incentives and technological transparency. GPU owners stake tokens (such as NOS or EdgenTech’s native asset) to participate in the network; in return they earn rewards proportional to their uptime and compute contribution. This staking mechanism not only secures the network but also aligns incentives for quality service delivery.

The open marketplace structure means that pricing is dynamic – set by real-time supply-demand signals rather than arbitrary vendor schedules. If one region sees excess capacity, prices drop accordingly; if demand spikes elsewhere, new providers are incentivized to join until equilibrium is restored.

This fluidity stands in stark contrast to legacy clouds’ rigid tiers and unpredictable surcharges. It also creates opportunities for cross-DePIN integration (e. g. , bridging between Solana-based compute networks or tapping into Web3-native payment rails), opening new avenues for arbitrage and efficiency gains across the ecosystem.

Pioneering Use Cases: From Research Labs to Enterprise AI Deployments

The implications extend far beyond mere cost savings. Decentralized GPU networks are powering breakthroughs in scientific computing, generative art, large language model inference, video rendering, gaming infrastructure, and more. Early adopters range from university research teams running distributed experiments on borrowed hardware to Fortune 500 enterprises optimizing production-scale inference pipelines without breaking their budgets.

This wave of democratization is particularly vital for emerging markets where access to enterprise-grade GPUs was previously unattainable due to capital constraints or regulatory barriers. Now anyone with spare hardware can participate in – and profit from – the global AI economy.

As these decentralized GPU networks mature, their impact is rippling across the AI value chain. By lowering the barriers to entry, platforms like EdgenTech are enabling a new generation of developers, startups, and institutions to experiment and deploy advanced AI models without the existential risk of runaway infrastructure costs. This shift isn’t just about cost savings, it’s about unleashing innovation at a global scale.

Real-World AI Projects Powered by Decentralized GPU Networks

-

exaBITS: Global AI Compute MarketplaceexaBITS has onboarded over 65,000 GPUs worldwide, enabling organizations to cut AI compute costs by up to 71% and boost performance by 130%. Its decentralized marketplace unlocks millions of consumer GPUs for scalable AI workloads.

-

0G Compute Network: Affordable AI Model Training0G connects idle GPU owners with AI developers, offering a decentralized compute marketplace that’s up to 90% cheaper than traditional cloud providers. This network democratizes access to AI resources for startups and researchers.

-

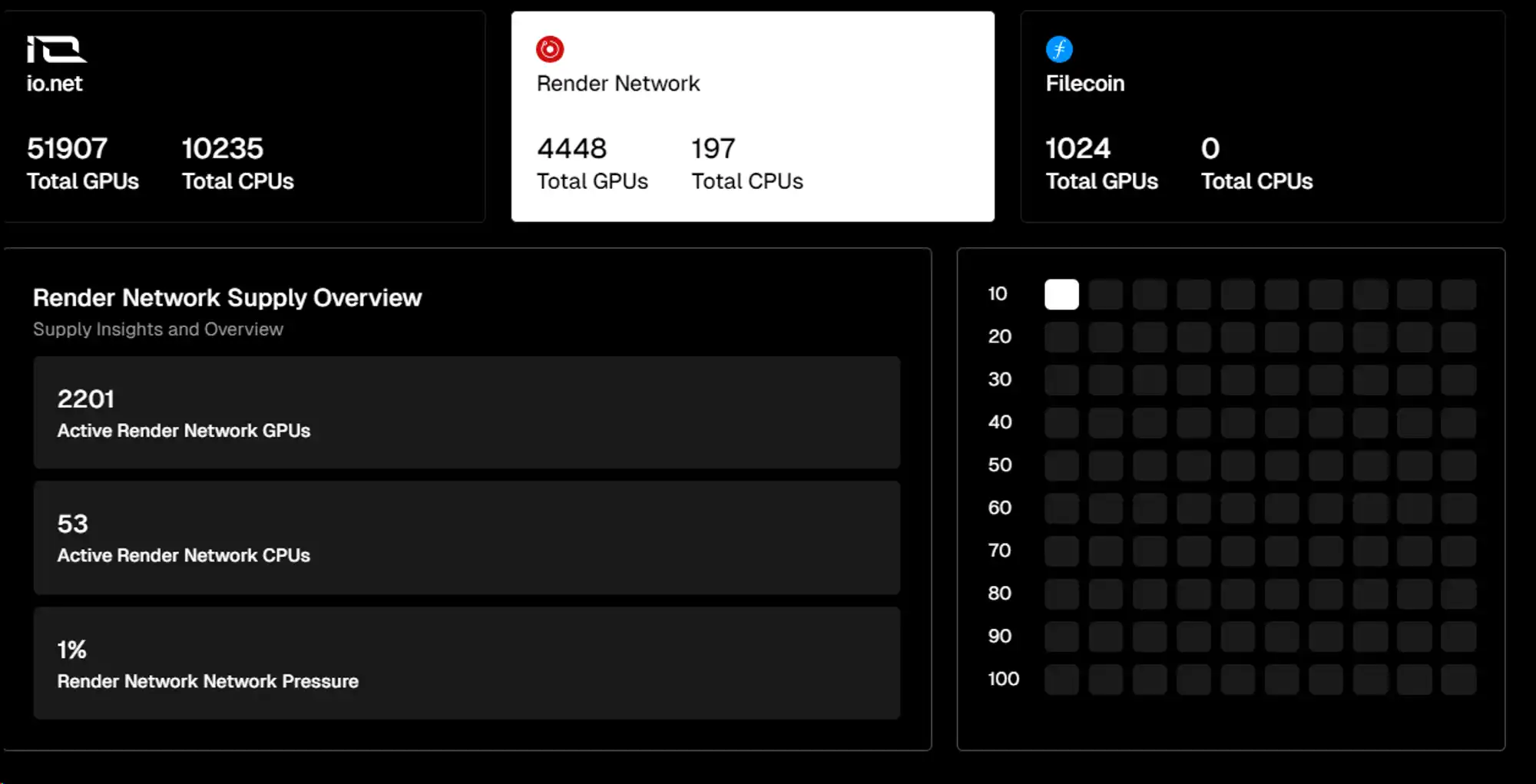

Render Network: Distributed Rendering for AI & GraphicsRender Network leverages a global pool of decentralized GPUs for AI model training, 3D rendering, and graphics workloads. Its distributed architecture enables cost-effective, high-performance compute for creative and scientific projects.

-

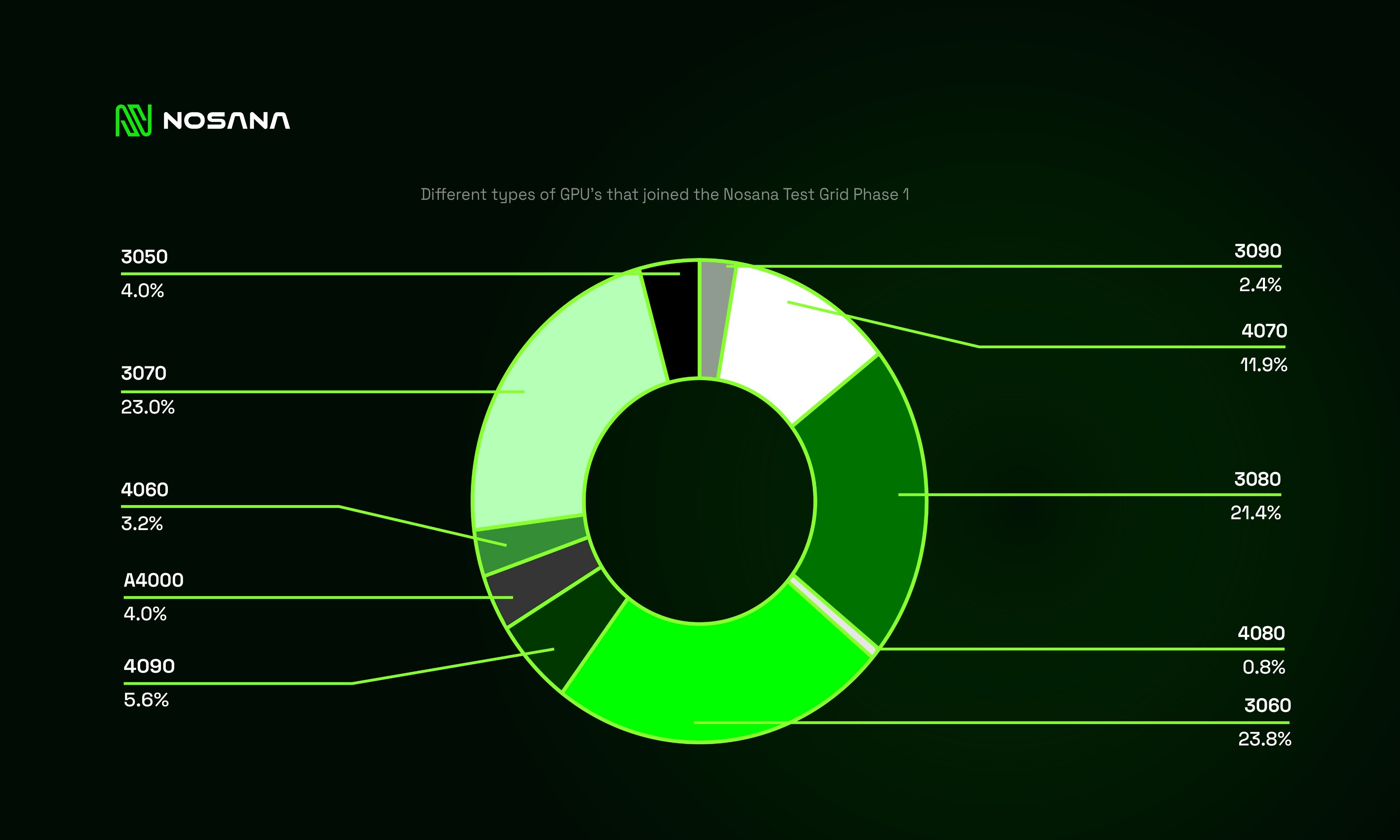

Nosana: Decentralized GPU Compute GridNosana is building a decentralized GPU grid with 29.7 million NOS tokens staked (valued at $92.4 million). The platform supports AI inference and training tasks, making compute more accessible and affordable for developers.

-

Akash Network: Open-Source Cloud for AI WorkloadsAkash Network provides a decentralized, open-source cloud marketplace where users can access affordable GPU compute for AI, ML, and data science applications. Its permissionless model reduces barriers for AI innovation.

Tokenized incentives are catalyzing this transformation. By rewarding both GPU providers and users with network tokens, DePIN projects create a virtuous cycle: more supply lowers prices, which attracts more demand, further boosting network effects. Staking mechanisms also enhance reliability and service quality, bad actors are penalized while high-performers are rewarded. This contrasts sharply with traditional cloud contracts where users have little recourse against spotty service or price gouging.

The transparency inherent in blockchain-based coordination is another game changer. Every transaction, from job assignment to payment, is auditable on-chain, reducing disputes and fostering trust among pseudonymous participants. This is particularly crucial for enterprise clients running sensitive workloads who require verifiable performance guarantees.

Challenges Ahead: Scaling Trust and Interoperability

No paradigm shift comes without friction points. Decentralized GPU networks must still overcome hurdles around standardization, security, and regulatory compliance. Ensuring that consumer-grade GPUs meet enterprise requirements for uptime and data integrity requires robust validation protocols, often enforced through cryptographic proofs or hardware attestation.

Interoperability between DePINs is another frontier. As specialized networks proliferate, some optimized for Solana-native workloads, others for Web3 gaming or scientific research, the ability to move jobs seamlessly across platforms will be key to maximizing global efficiency. Cross-network bridges and open APIs are already under active development but will require ongoing collaboration between stakeholders.

Looking Forward: The New Economics of AI Compute

The macro trend is clear: decentralized GPU networks are not just an alternative, they’re rapidly becoming the preferred solution for scalable, affordable AI compute in 2025 and beyond. As token incentives mature and interoperability improves, expect even steeper reductions in cost per inference or training run, especially compared to the static pricing models of legacy clouds.

This evolution isn’t just technical; it’s fundamentally economic. By transforming idle hardware into liquid assets tradable on open markets, DePINs are rewriting the rules of digital infrastructure provisioning. The result? More equitable access to compute power, accelerated AI innovation cycles, and a broader distribution of economic rewards across the ecosystem.

If you’re interested in deeper analysis on how decentralized networks are slashing AI compute costs, and what it means for your business strategy, explore our related coverage: