The landscape of AI compute has undergone a seismic shift in 2025. As demand for large-scale AI inference surges, the bottleneck is no longer just about model innovation, but about the liquidity and availability of GPU resources on a global scale. Decentralized AI compute networks are now at the heart of this transformation, redefining how GPU power is sourced, priced, and utilized for real-time inference workloads. The implications for developers, enterprises, and investors are profound: liquid GPU marketplaces, dynamic pricing models, and cross-network bridges are enabling scalable and affordable access to compute like never before.

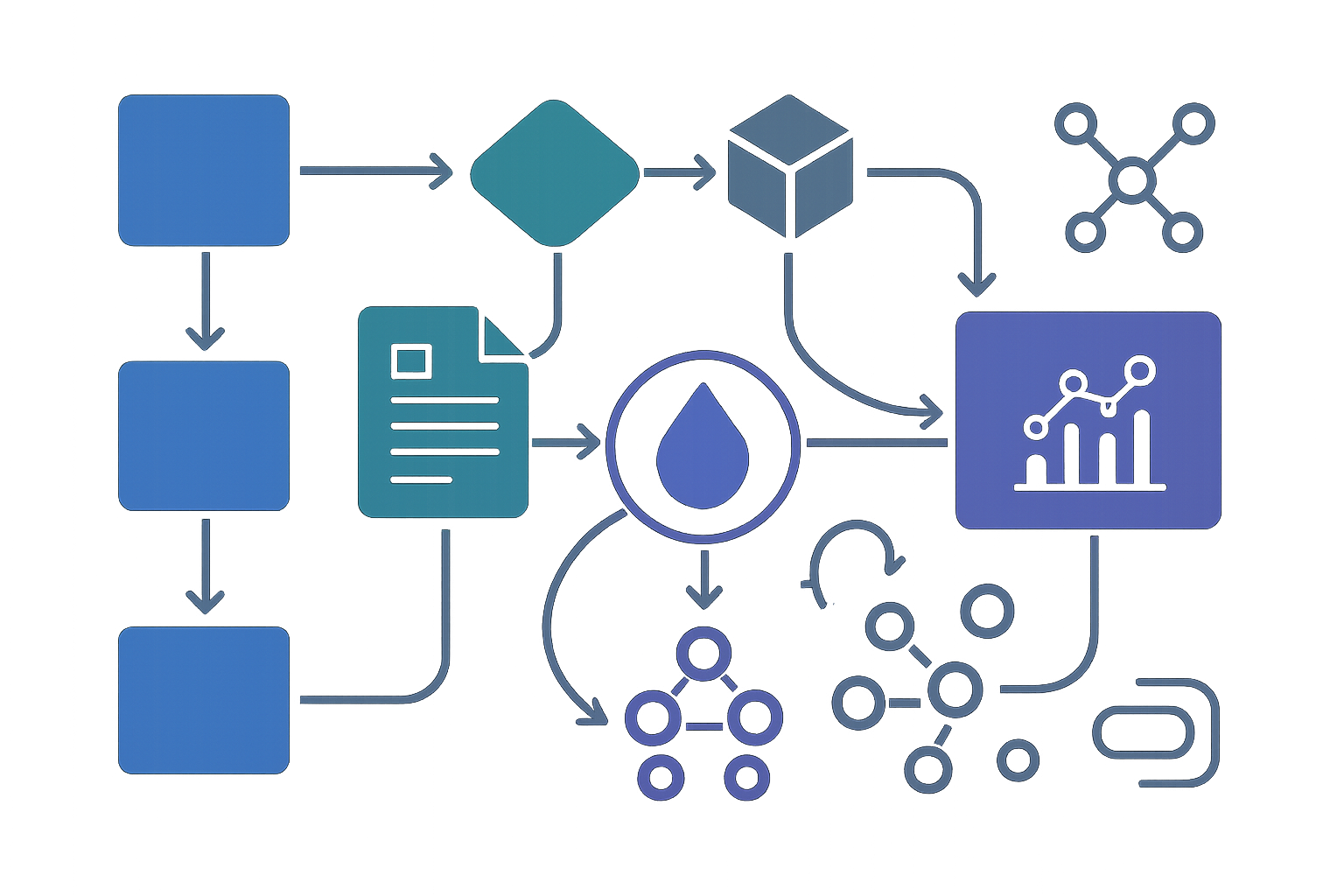

Decentralized GPU Marketplaces: Unlocking Global Liquidity

Platforms such as Render (RNDR) and Rynus have rapidly evolved from niche rendering engines to robust decentralized GPU marketplaces. These platforms allow anyone with idle GPUs – whether tucked away in gaming rigs or enterprise data centers – to rent out their compute power to AI developers worldwide. The result is a global pool of on-demand hardware that slashes costs for inference tasks compared to traditional cloud providers.

This model is fundamentally changing the economics of AI compute. By tokenizing access to hardware and leveraging crypto-powered payment rails, these networks achieve near-instant settlement and incentivize continuous supply. The impact is already being felt in verticals like generative media, video inference, and creative workflows where cost sensitivity and burst demand are critical factors. For a deeper dive into how these dynamics play out in practice, see how decentralized GPU marketplaces are powering the next generation of AI compute networks.

“A liquid GPU market helps smooth the spikes. The network wins if jobs are reliable, affordable, and quick. “ – TokenTax 2025 Report

Innovations in Real-Time AI Inference Architecture

The arms race for efficient inference isn’t just about more GPUs – it’s about smarter architectures that maximize throughput under tight memory and bandwidth constraints. Nvidia’s Rubin CPX GPU represents a leap forward here: with 128GB GDDR7 memory paired with 30 NVFP4 PetaFLOPS of compute power, it’s engineered specifically to handle long-context inference for models like GPT-5 and Grok 3.

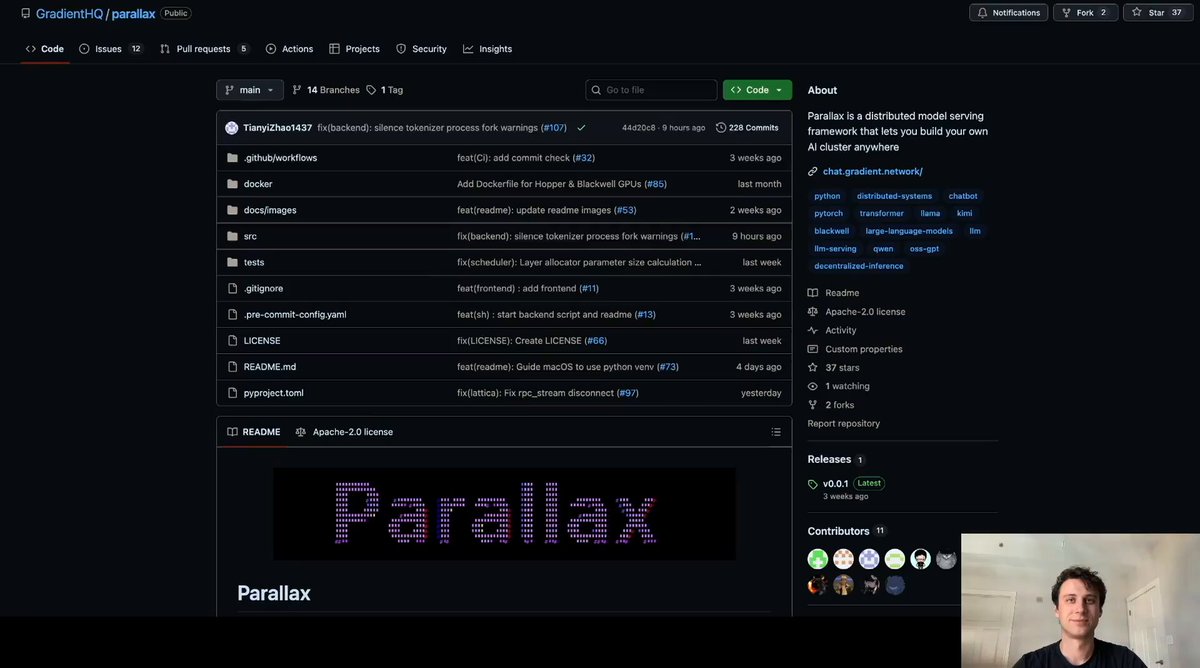

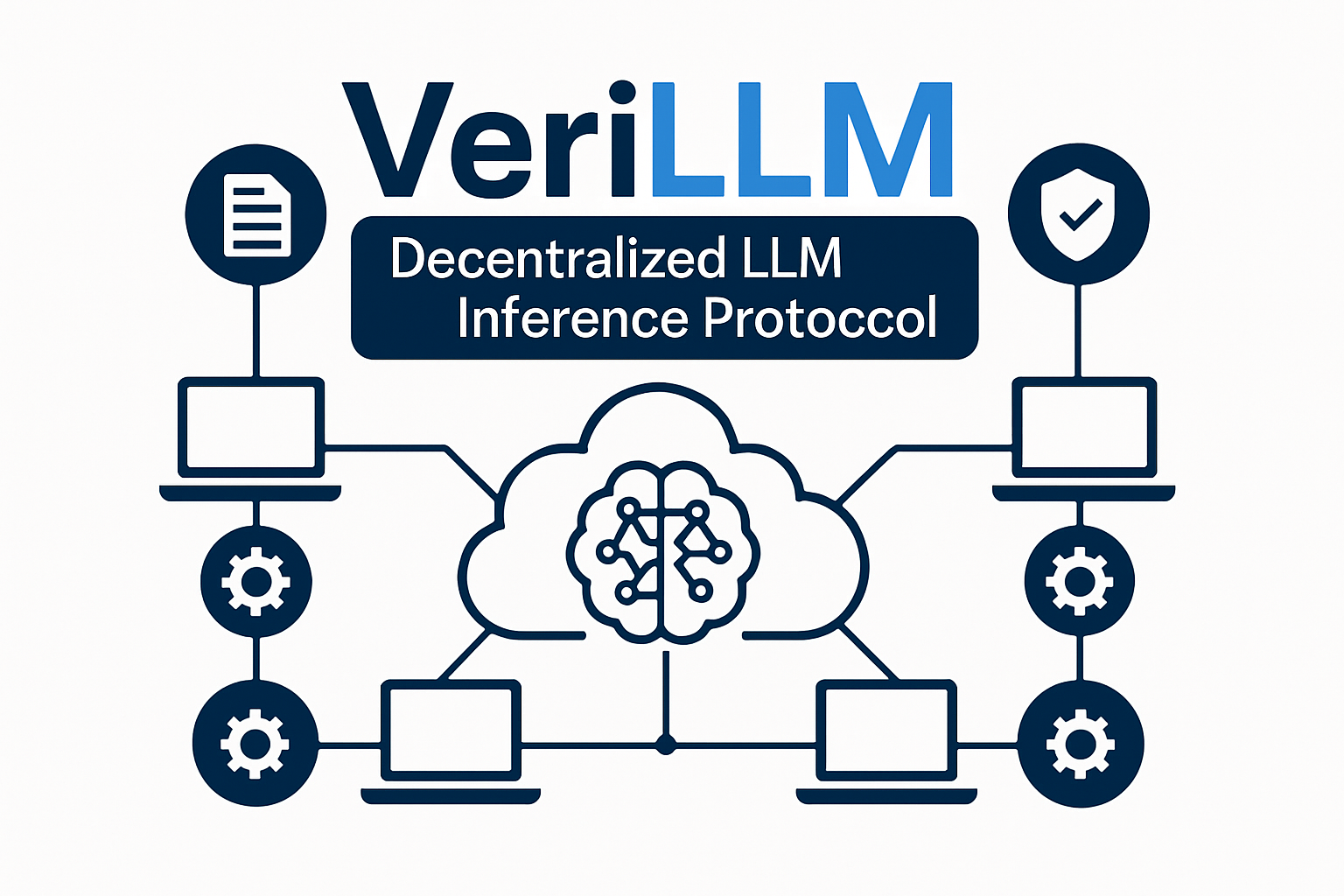

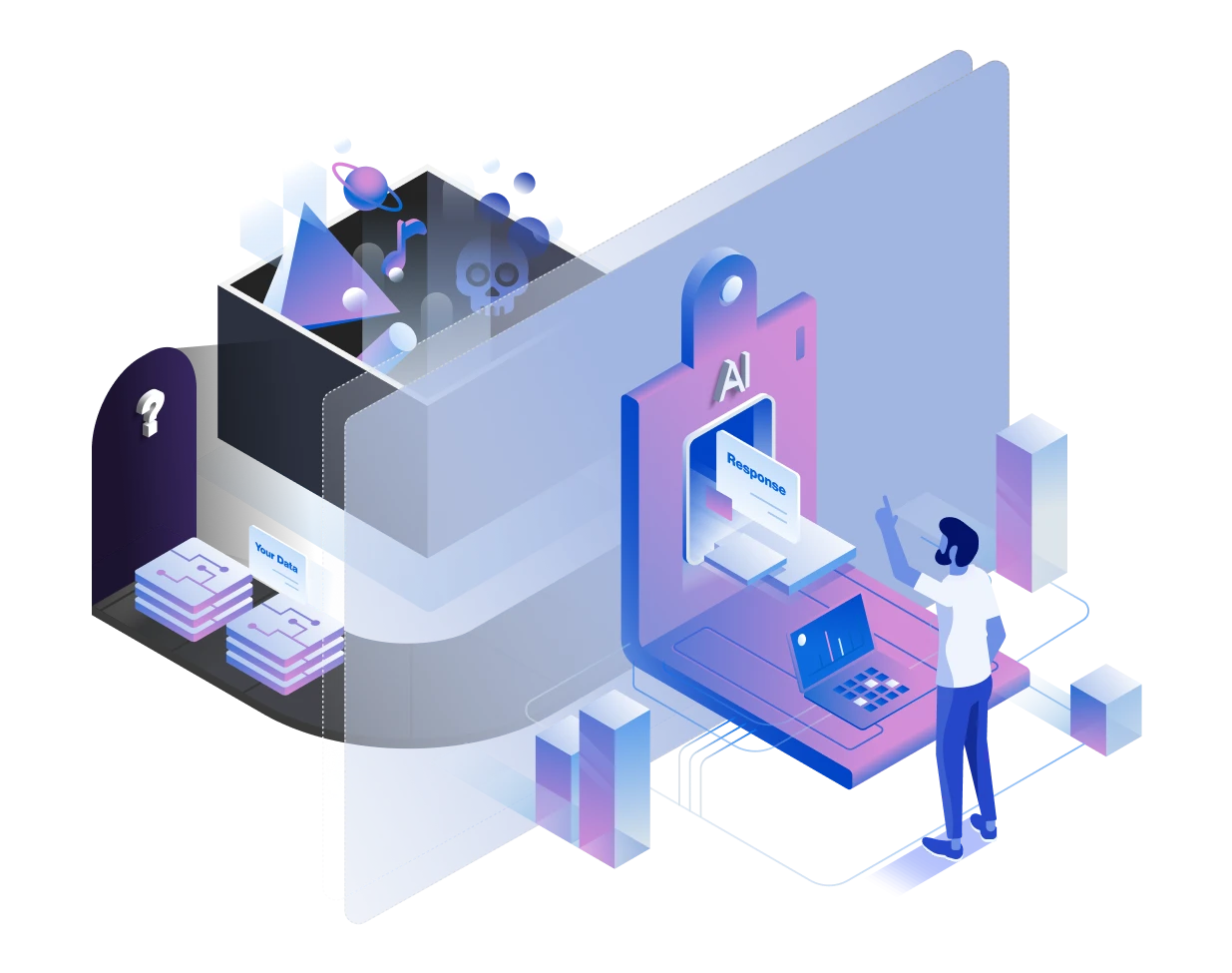

But hardware alone doesn’t solve the distributed scheduling challenge. Enter frameworks like Parallax and VeriLLM: Parallax orchestrates heterogeneous GPUs across geographies through an advanced two-phase scheduler that optimizes both latency and cost efficiency. Meanwhile VeriLLM introduces cryptographic verifiability into LLM inference workflows – meaning users can trust results even when computation happens on untrusted nodes.

This blend of hardware innovation with decentralized orchestration is enabling real-time AI applications at scales previously reserved for hyperscalers.

The Rise of DeFi-Enabled Compute Liquidity Layers

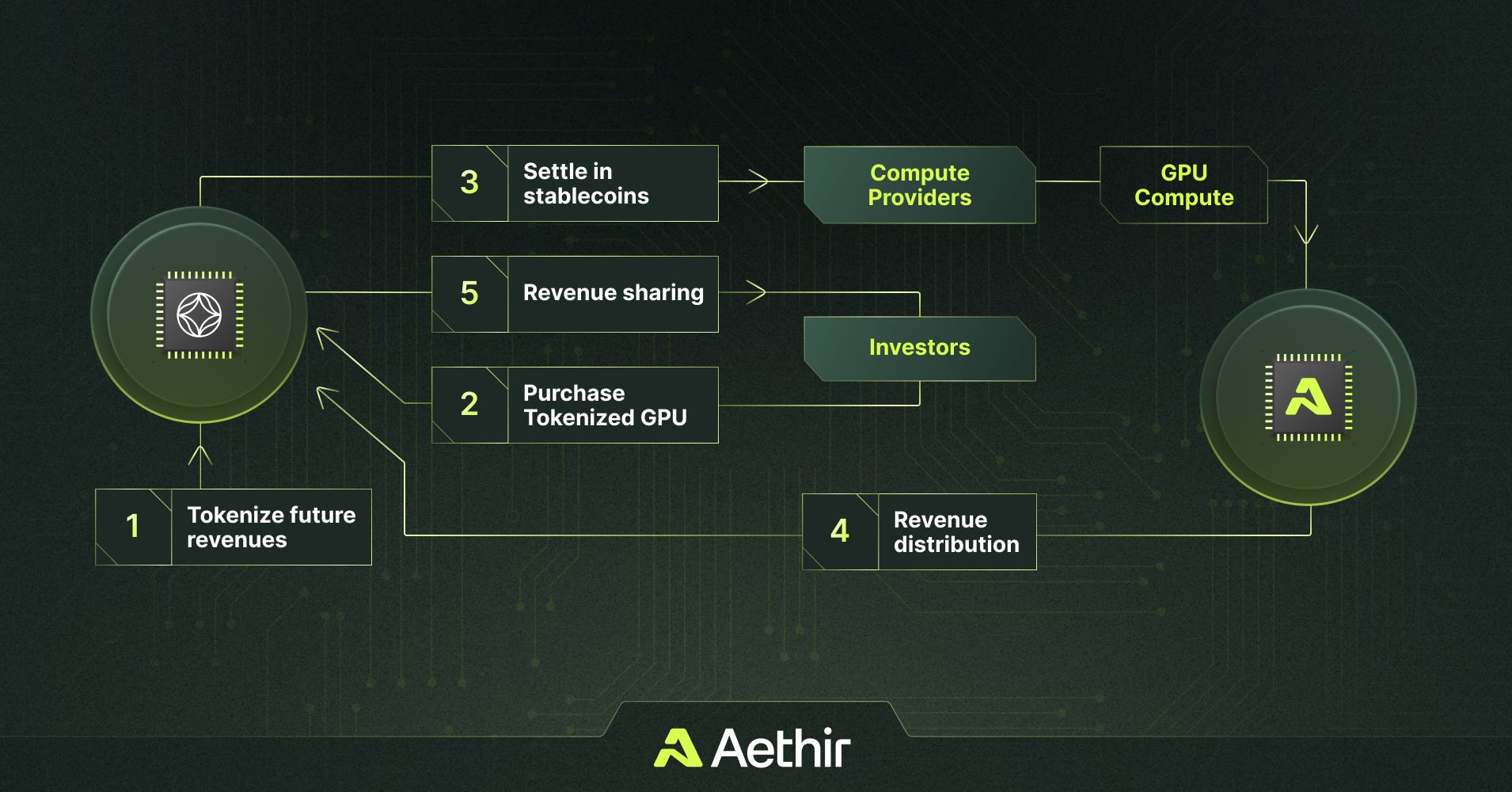

One of 2025’s most intriguing developments is the integration of DeFi protocols directly into the fabric of decentralized AI infrastructure. Protocols like USD. AI bridge stablecoin capital pools with physical GPU assets by tokenizing hardware as NFTs; these NFTs represent claims on real-world equipment deployed in data centers optimized for AI workloads.

This approach not only unlocks new funding channels for scaling up global compute supply but also creates a secondary market where liquidity can flow dynamically between financial investors and infrastructure operators. It’s an elegant solution to smoothing out supply shocks during periods of peak demand while democratizing access to high-performance GPUs outside legacy cloud monopolies.

The convergence between DeFi capital markets and decentralized compute layers points toward an era where liquidity isn’t just measured in tokens or dollars – it’s measured in teraflops per second available on tap anywhere in the world.

As these decentralized AI compute networks mature, we’re seeing the emergence of cross-network bridges and dynamic pricing engines that further enhance efficiency and resilience. Instead of being locked into a single provider or cloud silo, developers can now tap into the best-priced GPU resources across multiple networks in real time. This interoperability is crucial for mission-critical inference workloads where latency, cost, and uptime are paramount.

Dynamic pricing models, driven by transparent on-chain auctions and real-time supply-demand signals, mean that GPU rates can adjust instantly to market conditions. This not only incentivizes more hardware owners to contribute idle resources but also gives AI builders unprecedented control over their compute budgets. For those interested in the nuts and bolts of these pricing mechanisms, our deep dive on real-time GPU markets in DePIN unpacks the latest innovations.

“Decentralized compute is getting real. The idea of decentralized GPU networks where users rent compute on demand and hardware owners earn is fundamentally changing the game. ” – CoinDesk 2025 Feature

Enterprise Adoption and New Use Cases

The ripple effects are already visible across sectors: from fintech firms running sensitive LLM inference workflows with cryptographic verifiability, to creative studios leveraging burst GPU capacity for generative video, to AI startups deploying edge inference at scale without up-front capital expenditure. Even major enterprises are piloting hybrid approaches, combining internal clusters with decentralized networks, to achieve both cost savings and operational flexibility.

The open nature of these networks also fosters innovation at the application layer. Projects like Neurolov are pioneering browser-based Web3 AI compute, while Livepeer’s marketplace powers live and on-demand AI video at global scale. As more developers build atop these composable primitives, expect a new wave of permissionless AI services, from privacy-preserving inference to community-curated model marketplaces, reshaping what’s possible with decentralized infrastructure.

Challenges Ahead: Security, Verification and Network Effects

No transformation comes without friction. The shift to decentralized AI compute surfaces new challenges around workload verification, data privacy, and network reliability. Protocols like VeriLLM offer promising cryptographic proof schemes for LLM inference integrity, but mainstream adoption will require robust standards for trustless execution across heterogeneous hardware pools.

Meanwhile, network effects will continue to favor platforms that deliver seamless onboarding for both supply (GPU owners) and demand (AI builders). Liquidity begets liquidity: as more participants join a network, price discovery improves and job completion times shrink, creating a flywheel effect that entrenches market leaders.

Top Decentralized AI Compute Protocols Transforming GPU Liquidity in 2025

-

Render Network (RNDR): A leading decentralized GPU marketplace, Render enables users to rent out idle GPU power for AI training, inference, and creative tasks. By aggregating global GPU resources, Render lowers costs and increases accessibility for AI developers, driving efficiency in real-time AI workloads.

-

Rynus: Rynus operates a rapidly growing decentralized GPU network, providing affordable, on-demand compute for AI applications. Its open marketplace model transforms surplus hardware into a distributed engine for AI inference, supporting a wide range of machine learning and creative workflows.

-

Parallax: Parallax pioneers decentralized AI inference with a two-phase scheduler that efficiently utilizes heterogeneous GPU pools. This framework optimizes both latency and throughput, making large-scale, real-time AI inference more accessible and cost-effective.

-

VeriLLM: VeriLLM introduces a publicly verifiable protocol for decentralized LLM (large language model) inference. By ensuring security and efficiency with minimal verification costs, VeriLLM makes trustworthy, distributed AI inference possible for enterprise and public applications.

-

USD.AI: Bridging DeFi and AI, USD.AI tokenizes GPU hardware as NFTs and channels on-chain liquidity into loans for AI data centers equipped with NVIDIA GPUs. This innovative protocol enhances GPU liquidity, fueling the expansion of decentralized AI infrastructure.

-

Livepeer (LPT): Originally focused on decentralized video streaming, Livepeer has evolved into a global compute marketplace for AI video. Its permissionless platform powers live and on-demand media compute, leveraging decentralized GPU resources for scalable AI-powered video processing.

-

Internet Computer (ICP): With native on-chain AI app hosting and a market cap exceeding $800M, Internet Computer provides a scalable backbone for decentralized AI applications. Its infrastructure supports real-time inference and seamless integration with other decentralized protocols.

Strategic Outlook: What Comes Next?

The next phase will see further integration between DePIN infrastructure layers, compute, storage, bandwidth, and cross-chain interoperability solutions. As tokenized hardware becomes a core asset class within DeFi portfolios, expect new financial products tailored specifically for managing exposure to real-world compute risk.

For investors and builders alike, the message is clear: decentralized AI compute networks have moved from theory to production, unlocking a programmable liquidity layer for global GPU resources. The winners in this new era will be those who master not just model design or hardware procurement, but also the art of orchestrating distributed infrastructure at scale.

If you’re ready to explore how these trends can drive your next project or portfolio allocation deeper into the future of scalable AI infrastructure, check out our guides on scalable DePIN-powered AI compute and cost advantages vs AWS. The age of liquid GPUs is here, and it’s just getting started.